Occlusion in augmented reality occurs when virtual objects are correctly hidden behind real-world objects, enhancing realism by maintaining proper spatial relationships. Clipping happens when parts of virtual objects are improperly cut off or disappear at screen edges or boundaries, breaking immersion and reducing visual coherence. Effective AR systems prioritize occlusion handling over clipping to deliver seamless integration of digital content with the physical environment.

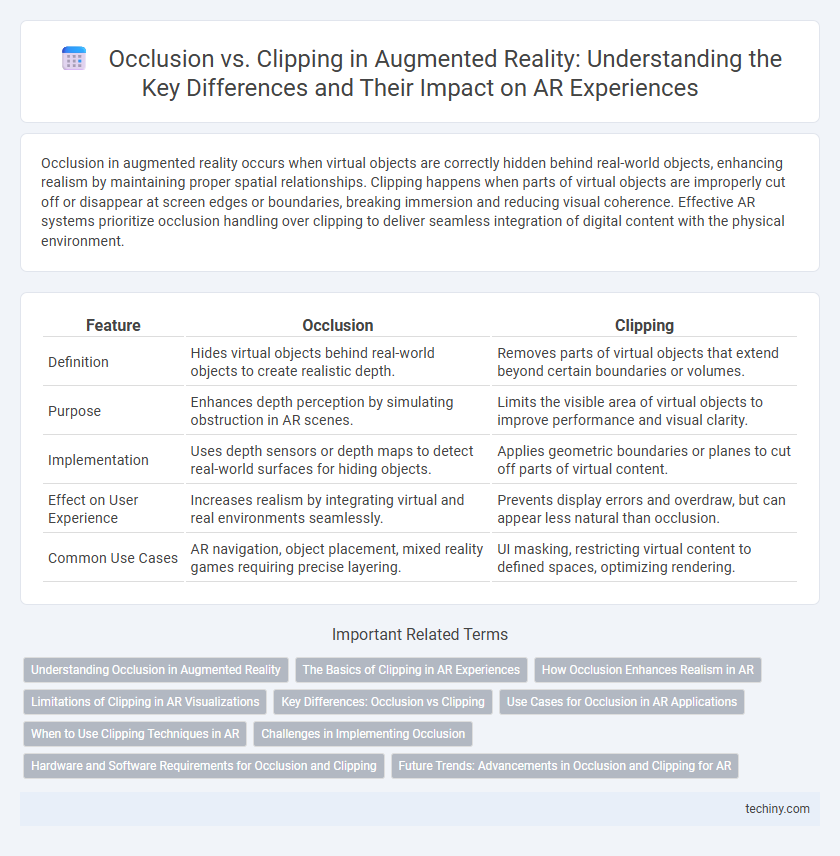

Table of Comparison

| Feature | Occlusion | Clipping |

|---|---|---|

| Definition | Hides virtual objects behind real-world objects to create realistic depth. | Removes parts of virtual objects that extend beyond certain boundaries or volumes. |

| Purpose | Enhances depth perception by simulating obstruction in AR scenes. | Limits the visible area of virtual objects to improve performance and visual clarity. |

| Implementation | Uses depth sensors or depth maps to detect real-world surfaces for hiding objects. | Applies geometric boundaries or planes to cut off parts of virtual content. |

| Effect on User Experience | Increases realism by integrating virtual and real environments seamlessly. | Prevents display errors and overdraw, but can appear less natural than occlusion. |

| Common Use Cases | AR navigation, object placement, mixed reality games requiring precise layering. | UI masking, restricting virtual content to defined spaces, optimizing rendering. |

Understanding Occlusion in Augmented Reality

Occlusion in augmented reality occurs when virtual objects are correctly hidden behind real-world objects, enhancing the realism of the mixed environment by maintaining proper depth perception. Accurate occlusion requires precise depth sensing and real-time spatial mapping using technologies like LiDAR or stereo cameras to differentiate object distances. Clipping, by contrast, refers to the erroneous cutting off of virtual objects at rendering boundaries, which can disrupt immersion but does not involve interaction with physical surroundings.

The Basics of Clipping in AR Experiences

Clipping in augmented reality refers to restricting the display of virtual objects when they move outside the camera's view or designated boundaries, ensuring a clean and realistic scene. It helps maintain immersive experiences by preventing virtual elements from appearing in unrealistic positions or overlapping physical objects incorrectly. Effective clipping techniques enhance spatial coherence by aligning virtual content precisely within the user's field of view, distinct from occlusion which deals with layering and depth perception between real and virtual objects.

How Occlusion Enhances Realism in AR

Occlusion in augmented reality improves realism by accurately hiding virtual objects behind real-world elements, creating seamless depth perception that mimics natural vision. Unlike clipping, which simply cuts off parts of virtual objects, occlusion uses spatial mapping and depth sensing to ensure virtual and physical objects interact convincingly. This precise synchronization between digital content and the physical environment significantly increases immersion and user engagement in AR applications.

Limitations of Clipping in AR Visualizations

Clipping in AR visualizations often fails to accurately represent depth relationships, causing virtual objects to improperly intersect or disappear behind real-world elements. This limitation leads to unrealistic and disruptive user experiences because clipped objects may appear unnaturally cut off without considering true spatial occlusion. Advanced occlusion techniques using depth sensing provide more precise layering of virtual content, overcoming clipping's inability to handle complex real-world geometries effectively.

Key Differences: Occlusion vs Clipping

Occlusion in augmented reality refers to objects being visually blocked by other real or virtual elements, preserving the natural depth perception and spatial relationships within the scene. Clipping occurs when parts of virtual objects are abruptly cut off or disappear due to camera boundaries, improper rendering, or misaligned tracking, breaking immersion. Key differences include occlusion enhancing realism by accurately representing object overlaps, while clipping causes visual artifacts that hinder the seamless integration of virtual content with the physical environment.

Use Cases for Occlusion in AR Applications

Occlusion in augmented reality (AR) enhances realism by allowing virtual objects to be accurately hidden behind real-world elements, improving spatial awareness and user interaction. Use cases for occlusion include navigation apps where virtual arrows appear behind physical obstacles, interactive gaming experiences with seamless object layering, and industrial maintenance where digital instructions are hidden behind machinery parts. This technique significantly boosts immersion and functionality by aligning virtual content precisely within the physical environment.

When to Use Clipping Techniques in AR

Clipping techniques in augmented reality are essential when dealing with transparent or semi-transparent objects that require precise edge rendering to maintain spatial consistency without obstructing the user's view. Use clipping when you need to render partial visibility of digital elements interacting with real-world backgrounds, as it helps prevent visual artifacts by limiting the rendering to specific viewports or object bounds. Employ clipping in scenarios where performance optimization is critical, as it reduces the computational load by excluding unnecessary pixels outside the defined clipping region.

Challenges in Implementing Occlusion

Implementing occlusion in augmented reality faces challenges such as accurately detecting complex real-world surfaces and ensuring depth consistency between virtual and physical objects. Limitations in sensor precision and environmental variability often lead to misalignment and visual artifacts, disrupting immersion. High computational demands for real-time occlusion processing also constrain performance on mobile AR devices.

Hardware and Software Requirements for Occlusion and Clipping

Occlusion in augmented reality demands advanced hardware such as depth sensors and LiDAR to accurately detect and map real-world objects, alongside software algorithms that support real-time 3D environment reconstruction and object recognition. Clipping requires less intensive hardware, typically standard cameras and IMUs, with software focusing on managing rendering boundaries and viewport constraints to prevent virtual objects from rendering outside intended areas. Efficient occlusion relies on powerful GPUs and optimized spatial mapping software to blend virtual elements seamlessly with the physical world, while clipping emphasizes lightweight rendering engines and precise frame buffer management for performance.

Future Trends: Advancements in Occlusion and Clipping for AR

Future trends in augmented reality emphasize enhanced occlusion and clipping techniques, leveraging deep learning algorithms to accurately map real-world environments and seamlessly integrate virtual objects. Innovations in sensor fusion and real-time 3D reconstruction enable precise depth perception, minimizing visual artifacts and improving user immersion. Continued development in hardware capabilities, such as LiDAR and advanced cameras, supports more dynamic and context-aware occlusion and clipping, driving forward AR applications in gaming, education, and industrial design.

Occlusion vs Clipping Infographic

techiny.com

techiny.com