Visual odometry leverages camera data to estimate a device's position by analyzing the motion of visual features in the environment, providing high accuracy in feature-rich settings. Inertial odometry relies on accelerometer and gyroscope sensors to track movement by measuring acceleration and angular velocity, offering robustness in environments where visual data is unreliable or unavailable. Combining both methods enhances augmented reality experiences by ensuring precise and continuous spatial tracking even under challenging conditions.

Table of Comparison

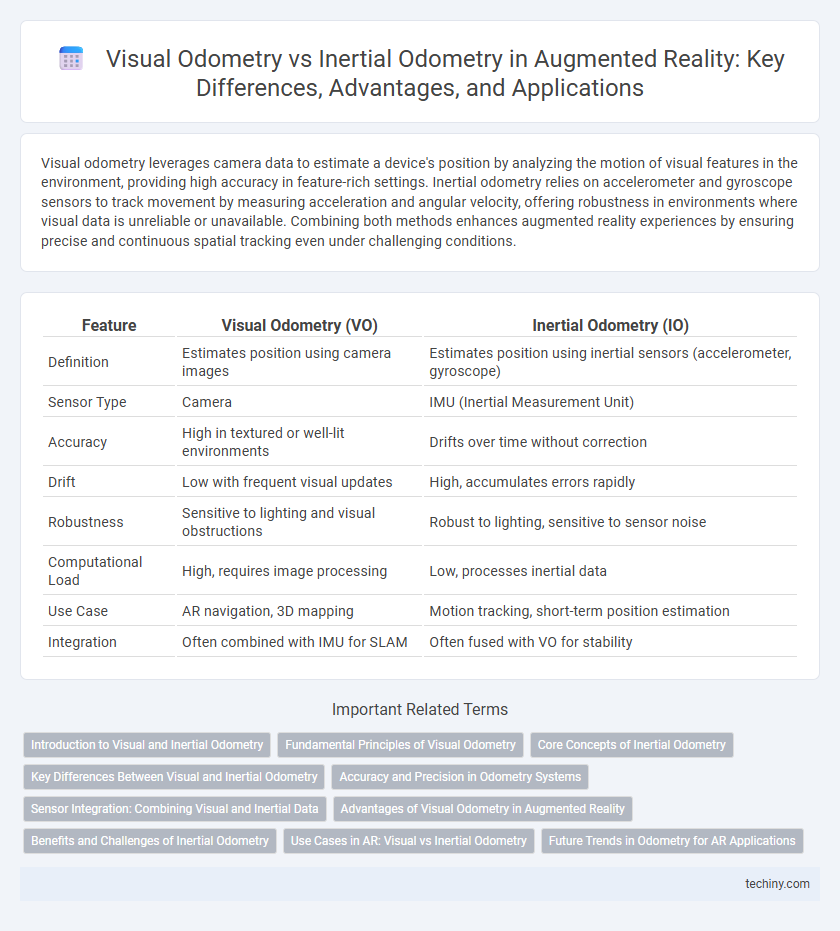

| Feature | Visual Odometry (VO) | Inertial Odometry (IO) |

|---|---|---|

| Definition | Estimates position using camera images | Estimates position using inertial sensors (accelerometer, gyroscope) |

| Sensor Type | Camera | IMU (Inertial Measurement Unit) |

| Accuracy | High in textured or well-lit environments | Drifts over time without correction |

| Drift | Low with frequent visual updates | High, accumulates errors rapidly |

| Robustness | Sensitive to lighting and visual obstructions | Robust to lighting, sensitive to sensor noise |

| Computational Load | High, requires image processing | Low, processes inertial data |

| Use Case | AR navigation, 3D mapping | Motion tracking, short-term position estimation |

| Integration | Often combined with IMU for SLAM | Often fused with VO for stability |

Introduction to Visual and Inertial Odometry

Visual odometry uses camera images to estimate a device's position and orientation by analyzing sequential frames for motion cues, enabling precise localization in visually rich environments. Inertial odometry relies on data from accelerometers and gyroscopes to compute movement by measuring linear acceleration and angular velocity, providing robust tracking even in visually challenging conditions. Combining both methods leverages visual features for accuracy and inertial sensors for stability, enhancing overall augmented reality tracking performance.

Fundamental Principles of Visual Odometry

Visual Odometry relies on analyzing sequential camera images to estimate the position and orientation of a device by tracking visual features and calculating their motion between frames. This technique depends on feature detection, matching, and triangulation to reconstruct 3D movement, enabling accurate localization in augmented reality environments. Unlike inertial odometry, which uses accelerometers and gyroscopes, visual odometry leverages rich spatial information from images for precise spatial awareness.

Core Concepts of Inertial Odometry

Inertial odometry relies on data from inertial measurement units (IMUs) such as accelerometers and gyroscopes to estimate device motion by tracking acceleration and angular velocity. This method enables continuous position tracking without external references, making it crucial for augmented reality applications where GPS signals are unavailable or unreliable. Core concepts include sensor fusion algorithms like Kalman filtering to correct drift and improve accuracy over time.

Key Differences Between Visual and Inertial Odometry

Visual odometry relies on camera images to estimate motion by analyzing changes in visual features, offering high spatial accuracy but limited performance in low-light or textureless environments. Inertial odometry uses data from accelerometers and gyroscopes to track movement, providing fast, continuous pose estimation but prone to drift over time due to sensor noise and bias. Combining both methods in sensor fusion enhances robustness and accuracy for augmented reality applications by leveraging complementary strengths.

Accuracy and Precision in Odometry Systems

Visual Odometry employs camera data to estimate device position with high accuracy in well-lit, textured environments, but its precision can degrade under low light or feature-poor conditions. Inertial Odometry relies on accelerometers and gyroscopes to track motion, providing consistent precision in dynamic or low-visibility settings, although it may accumulate drift errors over time without external corrections. Combining both systems enhances overall odometry performance by leveraging the high accuracy of visual inputs and the robust precision of inertial measurements.

Sensor Integration: Combining Visual and Inertial Data

Visual odometry uses camera images to estimate motion by tracking visual features, while inertial odometry relies on accelerometers and gyroscopes to measure linear and angular movement. Integrating these sensors enhances pose estimation accuracy by compensating for the limitations of each method, such as visual occlusion or inertial drift. Sensor fusion algorithms, like Extended Kalman Filters, optimally combine visual and inertial data to provide robust and real-time localization in augmented reality applications.

Advantages of Visual Odometry in Augmented Reality

Visual odometry offers enhanced environmental understanding by capturing rich visual features from real-world scenes, enabling precise localization and mapping in augmented reality applications. It provides more accurate tracking in complex or dynamic environments where inertial sensors may suffer from drift or noise. The utilization of camera data allows for seamless integration of virtual objects with real-world surfaces, improving the overall AR experience.

Benefits and Challenges of Inertial Odometry

Inertial odometry offers significant benefits for augmented reality by providing robust motion tracking without reliance on external references, enabling continuous operation in GPS-denied environments. The key challenge lies in sensor drift and noise accumulation from inertial measurement units (IMUs), which can degrade positional accuracy over time. Effective sensor fusion algorithms and periodic recalibration are essential to mitigate these limitations and enhance long-term tracking performance.

Use Cases in AR: Visual vs Inertial Odometry

Visual odometry excels in AR applications requiring precise environmental mapping and detailed spatial understanding, such as indoor navigation and object placement in complex settings. Inertial odometry is preferred for scenarios demanding robust performance in low-visibility conditions or rapid motion tracking, including AR gaming and fitness tracking. Combining both methods enhances overall tracking accuracy and reliability across diverse AR use cases.

Future Trends in Odometry for AR Applications

Future trends in odometry for augmented reality emphasize combining visual odometry's rich environmental mapping with inertial odometry's fast motion detection to enhance accuracy and robustness. Machine learning algorithms will increasingly fuse sensor data, improving real-time pose estimation in dynamic and occluded environments. Integration of edge computing and AI accelerators aims to reduce latency and power consumption, facilitating seamless AR experiences on mobile and wearable devices.

Visual Odometry vs Inertial Odometry Infographic

techiny.com

techiny.com