Plane detection in augmented reality identifies flat surfaces like floors and tables, enabling virtual objects to be placed realistically within a physical space. Point detection, on the other hand, tracks individual feature points on objects or environments to anchor virtual elements with precision. Both techniques are essential for stable and immersive AR experiences, with plane detection prioritizing spatial understanding and point detection enhancing detailed interaction.

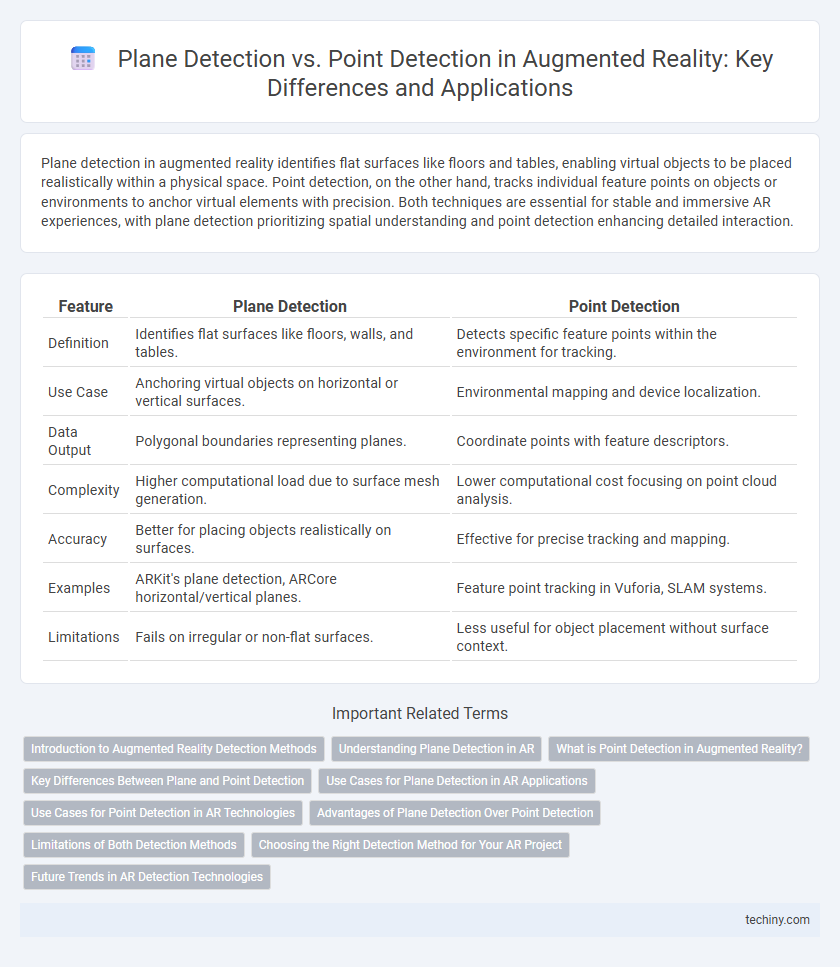

Table of Comparison

| Feature | Plane Detection | Point Detection |

|---|---|---|

| Definition | Identifies flat surfaces like floors, walls, and tables. | Detects specific feature points within the environment for tracking. |

| Use Case | Anchoring virtual objects on horizontal or vertical surfaces. | Environmental mapping and device localization. |

| Data Output | Polygonal boundaries representing planes. | Coordinate points with feature descriptors. |

| Complexity | Higher computational load due to surface mesh generation. | Lower computational cost focusing on point cloud analysis. |

| Accuracy | Better for placing objects realistically on surfaces. | Effective for precise tracking and mapping. |

| Examples | ARKit's plane detection, ARCore horizontal/vertical planes. | Feature point tracking in Vuforia, SLAM systems. |

| Limitations | Fails on irregular or non-flat surfaces. | Less useful for object placement without surface context. |

Introduction to Augmented Reality Detection Methods

Plane detection identifies flat surfaces like floors and walls to anchor virtual objects realistically, creating immersive AR experiences. Point detection tracks distinct feature points in the environment to map spatial positions, enabling precise object placement and interaction. Both methods are fundamental in augmented reality, providing complementary data for accurate scene understanding and robust visualization.

Understanding Plane Detection in AR

Plane detection in augmented reality involves identifying flat surfaces like floors, walls, and tabletops to anchor virtual objects realistically. Advanced AR systems use depth sensing and feature point analysis to map these planes accurately, enabling stable placement and interaction of digital content within a physical space. Understanding plane detection enhances spatial awareness and improves the seamless blending of virtual elements into the real environment.

What is Point Detection in Augmented Reality?

Point detection in augmented reality identifies specific feature points or landmarks within the environment to anchor virtual objects accurately. It relies on analyzing distinctive visual cues such as corners, edges, or textured areas to track spatial positions in real-time. This method enables detailed interaction with complex surfaces and dynamic scenes by continuously updating point features as the user moves.

Key Differences Between Plane and Point Detection

Plane detection identifies flat surfaces like floors, walls, or tables by analyzing clusters of points to create a continuous geometric plane, enabling realistic placement of virtual objects. Point detection focuses on recognizing distinct feature points or landmarks within the environment without forming surfaces, often used for tracking and mapping purposes. The key difference lies in plane detection's ability to define extended surfaces, while point detection provides discrete reference points critical for spatial understanding and augmented reality stability.

Use Cases for Plane Detection in AR Applications

Plane detection in augmented reality (AR) is essential for accurate placement of virtual objects on flat surfaces such as floors, tables, and walls, enhancing user interaction and immersion in applications like interior design, gaming, and navigation. Unlike point detection, which identifies specific feature points, plane detection enables stable and realistic object anchoring by recognizing the orientation and boundaries of horizontal and vertical planes. This functionality supports complex AR experiences including spatial mapping, object placement, and real-world environment understanding.

Use Cases for Point Detection in AR Technologies

Point detection in augmented reality (AR) technologies excels in detailed object recognition and surface mapping, enabling applications like interactive gaming, precise object placement, and real-time environment scanning. Unlike plane detection, which identifies flat surfaces, point detection captures intricate spatial features essential for AR navigation, gesture recognition, and augmented object tracking. Industries such as retail, manufacturing, and healthcare leverage point detection to enhance user interaction by providing accurate spatial awareness and adaptive virtual content integration.

Advantages of Plane Detection Over Point Detection

Plane detection in augmented reality provides enhanced spatial awareness by identifying flat surfaces such as floors, tables, and walls, enabling more realistic object placement and interaction. Unlike point detection, plane detection facilitates stable anchoring of virtual objects, reducing jitter and improving user experience in AR applications. This capability supports complex scene understanding, which is crucial for gaming, interior design, and industrial applications.

Limitations of Both Detection Methods

Plane detection in augmented reality struggles with irregular surfaces and low-texture environments, often missing flat planes or producing inaccurate results. Point detection is limited by its dependency on distinct feature points, which can be sparse in smooth or uniform areas, leading to unstable tracking. Both methods face challenges under varying lighting conditions and dynamic scenes, impacting overall AR performance and user experience.

Choosing the Right Detection Method for Your AR Project

Plane detection excels in identifying flat surfaces such as floors, walls, and tables, making it ideal for AR applications that require stable object placement and interaction within a structured environment. Point detection captures specific feature points or textures in the environment, offering greater flexibility for dynamic or irregular surfaces and enhancing tracking accuracy in complex scenes. Selecting the appropriate detection method depends on your AR project's spatial requirements, the nature of user interactions, and the environmental complexity to ensure optimal performance and user experience.

Future Trends in AR Detection Technologies

Future trends in AR detection technologies emphasize enhanced plane detection through machine learning algorithms that improve the accuracy and speed of spatial mapping in dynamic environments. Point detection is evolving with advanced feature extraction techniques using deep neural networks to enable more precise and scalable 3D object recognition. Combining multimodal sensor data such as LiDAR and RGB cameras will drive the next generation of AR detection, enabling richer contextual awareness and interaction fidelity.

Plane Detection vs Point Detection Infographic

techiny.com

techiny.com