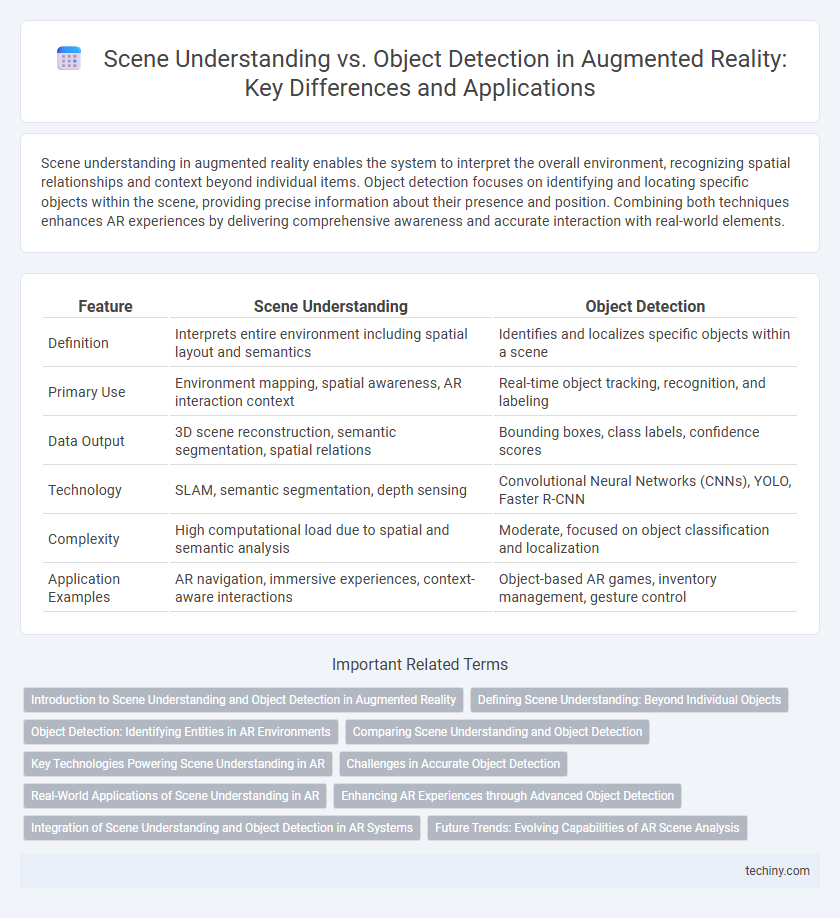

Scene understanding in augmented reality enables the system to interpret the overall environment, recognizing spatial relationships and context beyond individual items. Object detection focuses on identifying and locating specific objects within the scene, providing precise information about their presence and position. Combining both techniques enhances AR experiences by delivering comprehensive awareness and accurate interaction with real-world elements.

Table of Comparison

| Feature | Scene Understanding | Object Detection |

|---|---|---|

| Definition | Interprets entire environment including spatial layout and semantics | Identifies and localizes specific objects within a scene |

| Primary Use | Environment mapping, spatial awareness, AR interaction context | Real-time object tracking, recognition, and labeling |

| Data Output | 3D scene reconstruction, semantic segmentation, spatial relations | Bounding boxes, class labels, confidence scores |

| Technology | SLAM, semantic segmentation, depth sensing | Convolutional Neural Networks (CNNs), YOLO, Faster R-CNN |

| Complexity | High computational load due to spatial and semantic analysis | Moderate, focused on object classification and localization |

| Application Examples | AR navigation, immersive experiences, context-aware interactions | Object-based AR games, inventory management, gesture control |

Introduction to Scene Understanding and Object Detection in Augmented Reality

Scene understanding in augmented reality involves comprehensively interpreting the physical environment by analyzing spatial relationships, surfaces, and contextual elements to enable realistic virtual object integration. Object detection focuses on identifying and localizing specific items within the environment using computer vision techniques such as convolutional neural networks (CNNs) and deep learning models. Combining scene understanding and object detection improves AR experiences by providing accurate environmental mapping and precise interaction with real-world objects.

Defining Scene Understanding: Beyond Individual Objects

Scene understanding in augmented reality involves interpreting the spatial and contextual relationships between multiple elements in an environment, rather than just identifying individual objects. It integrates depth estimation, surface reconstruction, and semantic segmentation to create a coherent representation of the surroundings. This holistic approach enables more immersive and interactive AR experiences by providing meaningful context beyond isolated object detection.

Object Detection: Identifying Entities in AR Environments

Object detection in augmented reality (AR) focuses on accurately identifying and classifying entities within the environment to enable real-time interaction and enhanced user experiences. Unlike scene understanding, which interprets the overall context and spatial layout, object detection provides precise localization and recognition of individual objects such as furniture, signage, or interactive elements. Advanced neural networks like YOLO and SSD enhance detection accuracy by processing visual data quickly, facilitating immersive AR applications in retail, gaming, and industrial maintenance.

Comparing Scene Understanding and Object Detection

Scene understanding in augmented reality provides a comprehensive spatial awareness by interpreting the entire environment, including surfaces, boundaries, and spatial relationships, while object detection specifically identifies and classifies individual objects within a scene. Scene understanding enables more immersive and interactive AR experiences through semantic mapping and context recognition, whereas object detection focuses on pinpointing and tracking discrete items for targeted interaction. Both technologies rely on computer vision algorithms, but scene understanding integrates data for holistic environmental modeling, contrasting with the localized analysis of object detection.

Key Technologies Powering Scene Understanding in AR

Scene understanding in augmented reality relies on advanced technologies such as simultaneous localization and mapping (SLAM), depth sensing, and semantic segmentation to create accurate spatial maps and interpret environmental context. These key technologies enable AR systems to identify surfaces, objects, and spatial relationships, facilitating immersive and interactive experiences beyond simple object detection. Integration of machine learning algorithms enhances the system's capability to continuously adapt and refine environmental models in real-time.

Challenges in Accurate Object Detection

Accurate object detection in augmented reality faces challenges such as varying lighting conditions, occlusions, and complex backgrounds that hinder precise identification and tracking. Scene understanding must integrate contextual information to differentiate objects with similar features and maintain spatial consistency in dynamic environments. Advanced algorithms leveraging deep learning and sensor fusion are essential to overcome these obstacles and enhance real-time detection accuracy.

Real-World Applications of Scene Understanding in AR

Scene understanding in augmented reality (AR) enables accurate spatial mapping and environmental context recognition, essential for interactive experiences like indoor navigation, furniture placement, and immersive gaming. Unlike object detection, which identifies and classifies individual items, scene understanding interprets the entire environment, facilitating realistic occlusion, lighting adaptation, and multi-surface interaction. Real-world applications rely heavily on scene understanding to deliver seamless integration of virtual content with physical surroundings, improving user engagement and situational awareness.

Enhancing AR Experiences through Advanced Object Detection

Advanced object detection technologies significantly enhance augmented reality experiences by enabling accurate identification and real-time tracking of multiple objects within complex scenes. Deep learning models such as convolutional neural networks (CNNs) improve precision in recognizing varied shapes, sizes, and occlusions, surpassing traditional scene understanding that often relies on semantic segmentation. This heightened object awareness allows for more interactive and immersive AR applications, facilitating seamless integration of virtual elements with the physical environment.

Integration of Scene Understanding and Object Detection in AR Systems

Integration of scene understanding and object detection in AR systems enhances the accuracy and contextual awareness of virtual overlays, enabling more seamless interactions between digital content and the physical environment. Advanced algorithms combine spatial mapping, semantic segmentation, and real-time object recognition to create a cohesive representation of the scene, improving the system's ability to interpret complex environments. This fusion supports dynamic occlusion handling, precise anchor placement, and adaptive content rendering, crucial for immersive augmented reality experiences.

Future Trends: Evolving Capabilities of AR Scene Analysis

Future trends in augmented reality highlight the evolution of scene understanding, enabling AR systems to interpret complex environments beyond individual object detection. Advanced neural networks and spatial computing will enhance holistic scene analysis, allowing seamless interaction with dynamic real-world settings. This progression fosters more immersive and context-aware AR experiences by integrating semantic mapping and real-time environmental comprehension.

Scene Understanding vs Object Detection Infographic

techiny.com

techiny.com