Simultaneous Localization and Mapping (SLAM) constructs and updates a map of an unknown environment while tracking the device's position, enabling precise navigation and interaction within augmented reality (AR) spaces. Visual-Inertial Odometry (VIO) combines camera images with inertial sensor data to estimate device movement, offering faster and more robust pose estimation but often with less detailed environmental mapping. Choosing between SLAM and VIO depends on the AR application's need for comprehensive environmental understanding versus efficient and responsive motion tracking.

Table of Comparison

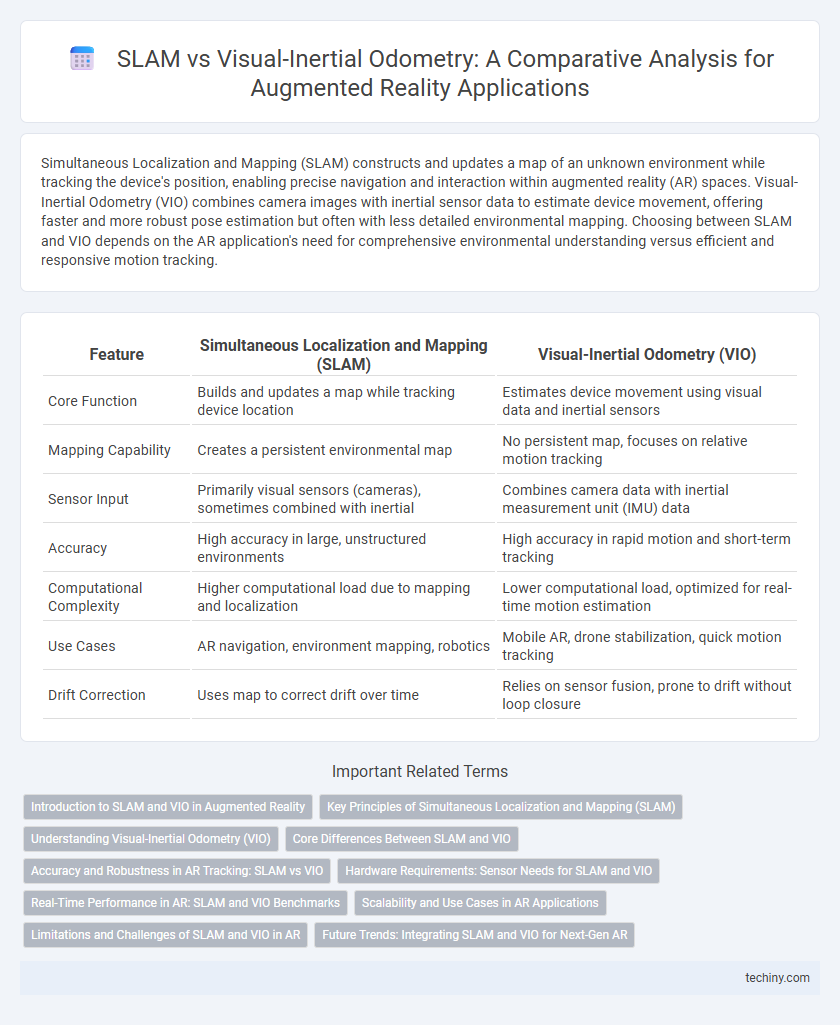

| Feature | Simultaneous Localization and Mapping (SLAM) | Visual-Inertial Odometry (VIO) |

|---|---|---|

| Core Function | Builds and updates a map while tracking device location | Estimates device movement using visual data and inertial sensors |

| Mapping Capability | Creates a persistent environmental map | No persistent map, focuses on relative motion tracking |

| Sensor Input | Primarily visual sensors (cameras), sometimes combined with inertial | Combines camera data with inertial measurement unit (IMU) data |

| Accuracy | High accuracy in large, unstructured environments | High accuracy in rapid motion and short-term tracking |

| Computational Complexity | Higher computational load due to mapping and localization | Lower computational load, optimized for real-time motion estimation |

| Use Cases | AR navigation, environment mapping, robotics | Mobile AR, drone stabilization, quick motion tracking |

| Drift Correction | Uses map to correct drift over time | Relies on sensor fusion, prone to drift without loop closure |

Introduction to SLAM and VIO in Augmented Reality

Simultaneous Localization and Mapping (SLAM) creates and updates a map of an unknown environment while tracking the device's position, critical for immersive augmented reality experiences. Visual-Inertial Odometry (VIO) fuses camera images with inertial sensor data to estimate motion, offering robust and accurate tracking in dynamic AR environments. Both SLAM and VIO enable real-time spatial awareness, underpinning advanced AR applications such as navigation, object placement, and interactive gaming.

Key Principles of Simultaneous Localization and Mapping (SLAM)

Simultaneous Localization and Mapping (SLAM) builds a consistent map of an unknown environment while simultaneously tracking the device's position within it using sensor data such as cameras and LiDAR. The core principle involves data association, loop closure, and probabilistic state estimation, enabling real-time correction of localization errors. SLAM algorithms use feature extraction and matching to incrementally construct spatial maps essential for augmented reality applications requiring precise environmental understanding.

Understanding Visual-Inertial Odometry (VIO)

Visual-Inertial Odometry (VIO) combines camera images with inertial measurement unit (IMU) data to provide real-time pose estimation and trajectory tracking in augmented reality environments. By fusing visual information with accelerometer and gyroscope measurements, VIO enhances accuracy and robustness in dynamic or feature-scarce scenarios where traditional visual SLAM may struggle. This sensor fusion enables AR devices to maintain stable localization and mapping with lower computational overhead, improving user experience and device responsiveness.

Core Differences Between SLAM and VIO

Simultaneous Localization and Mapping (SLAM) creates a detailed map of the environment while simultaneously tracking the device's position within it, using visual data from cameras. Visual-Inertial Odometry (VIO) fuses visual information with inertial measurements from accelerometers and gyroscopes to estimate motion, focusing primarily on pose estimation rather than mapping. The core difference lies in SLAM's dual function of mapping and localization versus VIO's emphasis on rapid, short-term pose tracking through sensor fusion.

Accuracy and Robustness in AR Tracking: SLAM vs VIO

Simultaneous Localization and Mapping (SLAM) offers high accuracy in augmented reality tracking by dynamically constructing and updating a map of the environment, enabling precise localization even in complex scenes. Visual-Inertial Odometry (VIO) combines camera data with inertial sensors, enhancing robustness against rapid movements and temporary visual occlusions but may experience drift over extended periods without global map correction. In AR tracking, SLAM excels in maintaining long-term accuracy and environmental context, while VIO provides superior resilience to motion blur and sensor noise, making their integration essential for optimal performance.

Hardware Requirements: Sensor Needs for SLAM and VIO

Simultaneous Localization and Mapping (SLAM) requires advanced sensors such as depth cameras, LiDAR, or stereo cameras to accurately map environments and localize in real-time, increasing hardware complexity and cost. Visual-Inertial Odometry (VIO) combines monocular or stereo visual sensors with inertial measurement units (IMUs), relying on minimal additional hardware while maintaining robust motion tracking capabilities. VIO's lower dependency on specialized sensors offers a more lightweight and power-efficient solution for augmented reality devices compared to traditional SLAM sensor arrays.

Real-Time Performance in AR: SLAM and VIO Benchmarks

Simultaneous Localization and Mapping (SLAM) and Visual-Inertial Odometry (VIO) are critical for real-time performance in augmented reality, with SLAM providing robust environment mapping and VIO enhancing motion tracking accuracy using inertial data. Benchmarks reveal SLAM excels in large-scale mapping and global consistency, while VIO offers lower latency and improved responsiveness in dynamic, resource-constrained AR applications. Optimizing real-time AR experiences often involves integrating SLAM's spatial understanding with VIO's high-frequency pose estimation for seamless user interaction.

Scalability and Use Cases in AR Applications

Simultaneous Localization and Mapping (SLAM) offers robust scalability for large-scale augmented reality (AR) environments by creating detailed external maps, making it ideal for applications like indoor navigation and complex scene reconstruction. Visual-Inertial Odometry (VIO) excels in smaller-scale, dynamic AR experiences, leveraging inertial sensors combined with visual data for fast, real-time tracking suited to mobile AR gaming and wearable devices. SLAM's comprehensive environment mapping supports persistent AR content placement, while VIO's lightweight approach prioritizes low latency and energy efficiency in constrained hardware scenarios.

Limitations and Challenges of SLAM and VIO in AR

Simultaneous Localization and Mapping (SLAM) faces limitations in dynamic environments where tracking accuracy degrades due to moving objects and changes in lighting conditions. Visual-Inertial Odometry (VIO) challenges include sensor noise and drift over time, resulting in cumulative errors that reduce long-term precision in augmented reality applications. Both SLAM and VIO require significant computational resources, impacting real-time performance and energy efficiency on mobile AR devices.

Future Trends: Integrating SLAM and VIO for Next-Gen AR

Next-generation augmented reality systems increasingly integrate Simultaneous Localization and Mapping (SLAM) with Visual-Inertial Odometry (VIO) to enhance real-time tracking accuracy and environmental mapping. This fusion leverages SLAM's robust environmental reconstruction and VIO's high-frequency motion estimation, enabling seamless AR experiences in complex, dynamic environments. Emerging trends focus on optimizing sensor fusion algorithms and exploiting machine learning to improve system robustness and efficiency while minimizing computational load for wearable AR devices.

Simultaneous Localization and Mapping (SLAM) vs Visual-Inertial Odometry (VIO) Infographic

techiny.com

techiny.com