Centralized processing in the Internet of Things (IoT) involves collecting data from various devices and sending it to a single central server for analysis, which can simplify management and improve control. Decentralized processing, on the other hand, distributes data handling across multiple edge devices or local servers, reducing latency and enhancing system resilience. Choosing between centralized and decentralized processing depends on factors like network bandwidth, real-time requirements, and security considerations.

Table of Comparison

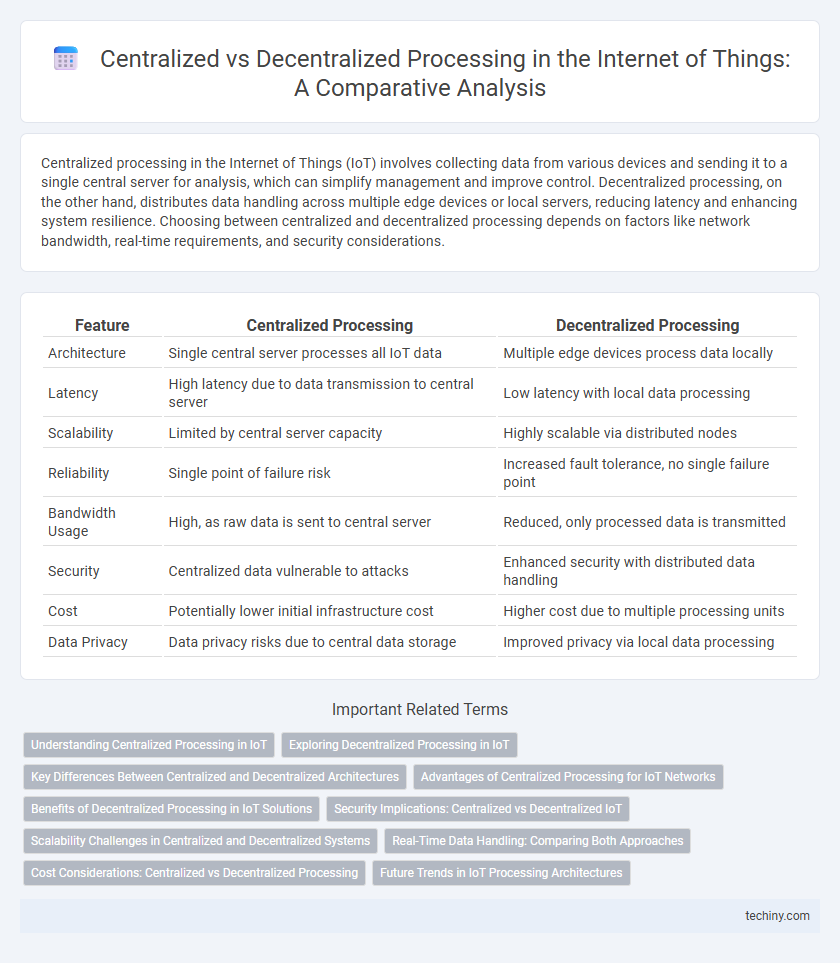

| Feature | Centralized Processing | Decentralized Processing |

|---|---|---|

| Architecture | Single central server processes all IoT data | Multiple edge devices process data locally |

| Latency | High latency due to data transmission to central server | Low latency with local data processing |

| Scalability | Limited by central server capacity | Highly scalable via distributed nodes |

| Reliability | Single point of failure risk | Increased fault tolerance, no single failure point |

| Bandwidth Usage | High, as raw data is sent to central server | Reduced, only processed data is transmitted |

| Security | Centralized data vulnerable to attacks | Enhanced security with distributed data handling |

| Cost | Potentially lower initial infrastructure cost | Higher cost due to multiple processing units |

| Data Privacy | Data privacy risks due to central data storage | Improved privacy via local data processing |

Understanding Centralized Processing in IoT

Centralized processing in IoT involves aggregating data from multiple devices to a single centralized server or cloud platform for analysis and decision-making. This approach simplifies management and security by consolidating resources but can introduce latency and create a single point of failure. Centralized architectures are ideal for applications requiring extensive computational power and long-term data storage, such as smart city management and industrial IoT analytics.

Exploring Decentralized Processing in IoT

Decentralized processing in the Internet of Things (IoT) enhances scalability and reduces latency by distributing data computation across edge devices rather than relying on a central server. This approach improves real-time decision-making and fault tolerance by enabling local data analysis and autonomous device operation. Deploying decentralized architectures helps mitigate network congestion and enhances security through localized data control within IoT ecosystems.

Key Differences Between Centralized and Decentralized Architectures

Centralized processing in Internet of Things (IoT) systems relies on a single core system or server to collect, analyze, and manage data, enabling simplified control and maintenance but often causing latency and bandwidth bottlenecks. Decentralized processing distributes data handling across multiple edge devices or local nodes, enhancing scalability, reducing latency, and improving fault tolerance by processing information closer to the data source. Key differences include data flow direction, with centralized architectures funneling data to a central hub, whereas decentralized systems enable local decision-making and parallel processing, optimizing real-time responsiveness and network resilience.

Advantages of Centralized Processing for IoT Networks

Centralized processing in IoT networks enhances data security by consolidating storage and management within a single control point, reducing vulnerability from multiple access points. It streamlines system maintenance and updates, enabling efficient deployment of patches and policy enforcement across connected devices. Centralized architectures also facilitate better data analysis and decision-making through aggregated information, improving overall network performance and reliability.

Benefits of Decentralized Processing in IoT Solutions

Decentralized processing in IoT solutions enhances system resilience by reducing single points of failure and improving fault tolerance across distributed nodes. It enables real-time data analysis closer to the source, minimizing latency and bandwidth usage while supporting scalable and flexible network architectures. This approach optimizes resource allocation and facilitates autonomous decision-making for improved operational efficiency and security in complex IoT ecosystems.

Security Implications: Centralized vs Decentralized IoT

Centralized processing in IoT systems concentrates data and control in a single location, raising risks of bottlenecks and single points of failure that can be exploited by cyberattacks. Decentralized processing distributes data and decision-making across multiple nodes, enhancing resilience and reducing the impact of attacks but increasing complexity in security management and data consistency. Evaluating trade-offs between centralized and decentralized architectures is crucial for optimizing IoT security, with decentralized models often offering stronger defenses against targeted breaches by limiting attack surfaces.

Scalability Challenges in Centralized and Decentralized Systems

Centralized processing in Internet of Things (IoT) systems often faces scalability challenges due to bottlenecks at a single processing unit, resulting in latency and limited capacity to handle massive data influx from numerous connected devices. Decentralized processing distributes computation across edge nodes, enhancing scalability by reducing latency and bandwidth usage but introduces complexity in coordination and data consistency. Effective scalability in IoT requires balancing the centralized control's simplicity with the decentralized system's resilience and distributed resource management.

Real-Time Data Handling: Comparing Both Approaches

Centralized processing in IoT offers streamlined real-time data handling by aggregating data in a central server, enabling efficient analysis but potentially causing latency due to network congestion. Decentralized processing distributes computation across edge devices, significantly reducing latency and enhancing responsiveness for time-sensitive applications such as industrial automation and smart grids. Real-time IoT systems benefit from decentralized architectures by minimizing data transmission delays and improving fault tolerance compared to centralized models.

Cost Considerations: Centralized vs Decentralized Processing

Centralized processing in IoT often results in lower hardware costs due to shared resources but can incur higher expenses in data transmission and cloud infrastructure. Decentralized processing reduces network latency and bandwidth usage, which lowers ongoing operational costs but requires investment in edge devices with sufficient computing power. Evaluating total cost of ownership in IoT deployments involves balancing initial device procurement against continuous cloud service and bandwidth fees.

Future Trends in IoT Processing Architectures

Future trends in IoT processing architectures emphasize a shift from centralized to decentralized processing to enhance scalability and reduce latency. Edge computing capabilities are increasingly integrated, allowing data to be processed closer to IoT devices, which improves real-time responsiveness and reduces the burden on central servers. Advancements in AI and 5G enable more efficient decentralized systems that support massive IoT deployments with improved security and energy efficiency.

Centralized processing vs Decentralized processing Infographic

techiny.com

techiny.com