Qubits differ from classical bits by representing both 0 and 1 states simultaneously through superposition, enabling quantum computers to perform complex calculations more efficiently. Unlike bits, which are strictly binary, qubits leverage entanglement to correlate their states, exponentially increasing computational power. This foundational difference allows quantum algorithms to solve problems in cryptography, optimization, and simulation that are infeasible for classical computers.

Table of Comparison

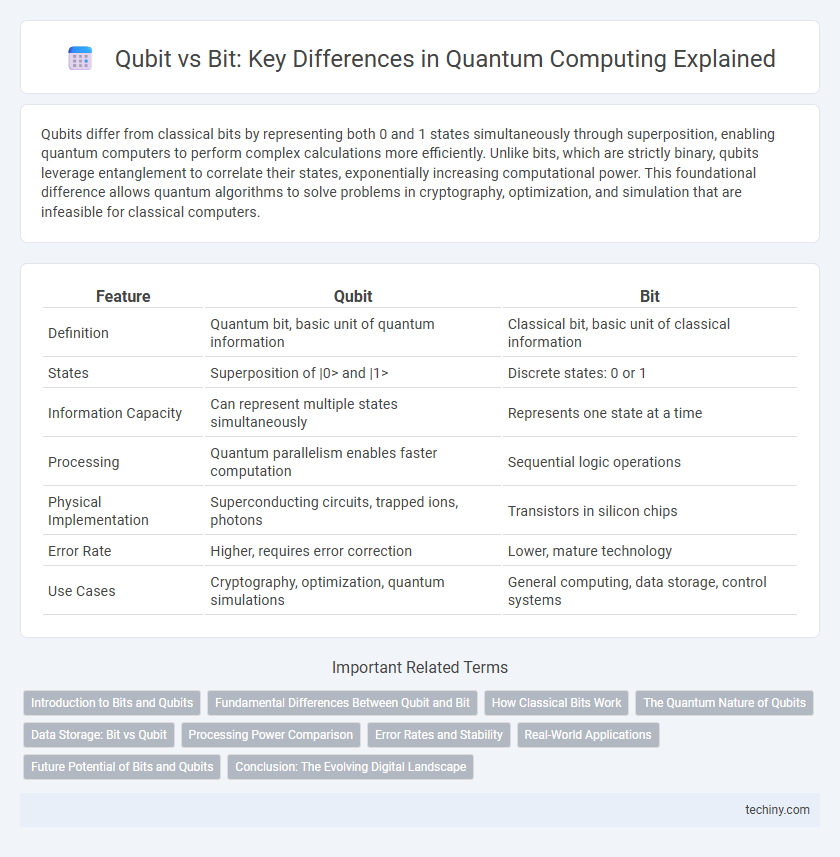

| Feature | Qubit | Bit |

|---|---|---|

| Definition | Quantum bit, basic unit of quantum information | Classical bit, basic unit of classical information |

| States | Superposition of |0> and |1> | Discrete states: 0 or 1 |

| Information Capacity | Can represent multiple states simultaneously | Represents one state at a time |

| Processing | Quantum parallelism enables faster computation | Sequential logic operations |

| Physical Implementation | Superconducting circuits, trapped ions, photons | Transistors in silicon chips |

| Error Rate | Higher, requires error correction | Lower, mature technology |

| Use Cases | Cryptography, optimization, quantum simulations | General computing, data storage, control systems |

Introduction to Bits and Qubits

A bit represents classical information with binary values of 0 or 1, serving as the fundamental unit in traditional computing systems. In contrast, a qubit exploits quantum phenomena such as superposition and entanglement, allowing it to exist simultaneously in multiple states. This capability enables quantum computers to process complex computations more efficiently than classical bits, revolutionizing performance in specific applications like cryptography and optimization.

Fundamental Differences Between Qubit and Bit

Qubits differ fundamentally from classical bits by existing in superposition, allowing them to represent both 0 and 1 simultaneously, whereas bits are binary and strictly represent either 0 or 1. Unlike bits that utilize classical electrical states, qubits leverage quantum phenomena such as entanglement and coherence to perform complex computations more efficiently. This intrinsic quantum behavior enables exponential data processing capabilities, distinguishing qubits as the core units driving quantum computing advancements.

How Classical Bits Work

Classical bits operate using binary states, representing either a 0 or a 1 through distinct voltage levels or magnetic orientations in digital circuits. These bits form the foundation of conventional computing by executing logical operations based on Boolean algebra and deterministic processes. Unlike qubits in quantum computing, classical bits cannot exist in superposition, limiting their ability to represent multiple states simultaneously.

The Quantum Nature of Qubits

Qubits leverage the principles of quantum mechanics, such as superposition and entanglement, allowing them to represent both 0 and 1 simultaneously, unlike classical bits that exist in a definite state of either 0 or 1. This unique quantum nature enables qubits to process complex computations exponentially faster by exploring multiple possibilities at once. Quantum coherence and interference further empower qubits to perform advanced algorithms that are impossible for traditional bits.

Data Storage: Bit vs Qubit

Data storage in classical computing relies on bits, which represent information as either 0 or 1, limiting storage capacity to binary states. Qubits in quantum computing exploit superposition, enabling them to encode multiple states simultaneously, vastly enhancing data density and processing potential. Unlike bits, qubits support entanglement and coherence, allowing exponential growth in information storage and complex computations within quantum systems.

Processing Power Comparison

Qubits leverage quantum superposition to represent multiple states simultaneously, enabling exponential growth in processing power compared to classical bits that process information in binary states of 0 or 1. This allows quantum computers to perform complex calculations at speeds unattainable by traditional bit-based systems, particularly in fields like cryptography and optimization. Processing power in quantum computing scales exponentially with the number of qubits, whereas classical computing power scales linearly with bits.

Error Rates and Stability

Qubits exhibit significantly higher error rates compared to classical bits due to their susceptibility to quantum decoherence and noise, posing challenges for stable quantum computation. Classical bits maintain near-zero error rates through well-established error correction and stable physical states in semiconductor devices. Advances in quantum error correction codes and qubit coherence times are critical to improving qubit stability and reducing error rates for scalable quantum computing applications.

Real-World Applications

Qubits enable quantum computers to process complex computations exponentially faster than classical bits, making them ideal for cryptographic algorithms and optimization problems in logistics. Unlike bits, which represent data as 0 or 1, qubits exploit superposition and entanglement, allowing quantum systems to evaluate numerous possibilities simultaneously. Real-world applications include drug discovery simulations, financial risk modeling, and improving machine learning algorithms through enhanced data processing capabilities.

Future Potential of Bits and Qubits

Qubits leverage superposition and entanglement to perform complex computations exponentially faster than classical bits, which are limited to binary states. While traditional bits remain foundational for current digital technologies, their future potential is constrained by scalability and efficiency limits. Qubits promise transformative advancements in cryptography, optimization, and simulation, driving the evolution of computing beyond classical boundaries.

Conclusion: The Evolving Digital Landscape

Qubits outperform classical bits by leveraging superposition and entanglement, exponentially increasing computational power in quantum computing. This fundamental difference enables quantum systems to solve complex problems in cryptography, optimization, and simulation that classical computers cannot efficiently address. As quantum technology advances, the digital landscape is evolving towards hybrid architectures integrating both qubits and bits for enhanced processing capabilities.

Qubit vs Bit Infographic

techiny.com

techiny.com