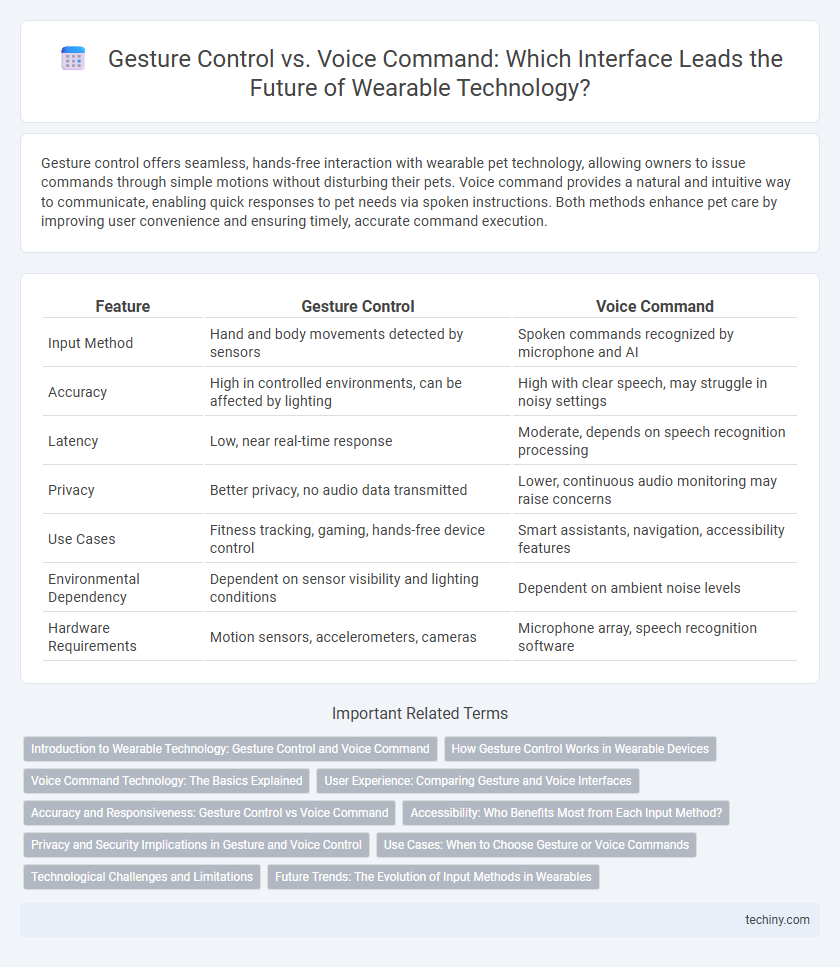

Gesture control offers seamless, hands-free interaction with wearable pet technology, allowing owners to issue commands through simple motions without disturbing their pets. Voice command provides a natural and intuitive way to communicate, enabling quick responses to pet needs via spoken instructions. Both methods enhance pet care by improving user convenience and ensuring timely, accurate command execution.

Table of Comparison

| Feature | Gesture Control | Voice Command |

|---|---|---|

| Input Method | Hand and body movements detected by sensors | Spoken commands recognized by microphone and AI |

| Accuracy | High in controlled environments, can be affected by lighting | High with clear speech, may struggle in noisy settings |

| Latency | Low, near real-time response | Moderate, depends on speech recognition processing |

| Privacy | Better privacy, no audio data transmitted | Lower, continuous audio monitoring may raise concerns |

| Use Cases | Fitness tracking, gaming, hands-free device control | Smart assistants, navigation, accessibility features |

| Environmental Dependency | Dependent on sensor visibility and lighting conditions | Dependent on ambient noise levels |

| Hardware Requirements | Motion sensors, accelerometers, cameras | Microphone array, speech recognition software |

Introduction to Wearable Technology: Gesture Control and Voice Command

Gesture control and voice command represent two primary interaction methods in wearable technology, enabling intuitive, hands-free device operation. Gesture control utilizes sensors like accelerometers and gyroscopes to detect hand or body movements, offering precise and discreet input for smartwatches, fitness trackers, and AR glasses. Voice command leverages advanced speech recognition algorithms and natural language processing to execute tasks swiftly, enhancing user convenience and accessibility in devices such as earbuds and smart rings.

How Gesture Control Works in Wearable Devices

Gesture control in wearable devices operates through sensors such as accelerometers, gyroscopes, and infrared cameras that detect and interpret hand or body movements. These sensors capture motion data, which is processed by embedded algorithms to execute commands without physical contact. This technology enhances user interaction by enabling intuitive and seamless control over devices like smartwatches and augmented reality glasses.

Voice Command Technology: The Basics Explained

Voice command technology in wearable devices utilizes advanced speech recognition algorithms and natural language processing to interpret user instructions without manual input. This hands-free interface enhances accessibility and convenience by enabling intuitive voice interactions for tasks such as navigation, messaging, and device control. Integration with AI assistants and continuous improvements in accuracy and noise reduction make voice commands a critical feature in modern wearable technology ecosystems.

User Experience: Comparing Gesture and Voice Interfaces

Gesture control offers intuitive, hands-free interaction with wearable technology, allowing users to perform complex commands through natural movements, enhancing precision and reducing accidental inputs. Voice command interfaces excel in convenience, enabling quick, multitasking interactions without physical effort, but can struggle with accuracy in noisy environments or diverse accents. Both interfaces shape user experience distinctly, with gesture control favoring tactile engagement and voice command emphasizing accessibility and speed.

Accuracy and Responsiveness: Gesture Control vs Voice Command

Gesture control in wearable technology offers high accuracy in recognizing predefined motions, enabling swift and intuitive device interactions, especially in environments with background noise. Voice command accuracy can vary based on speech clarity and ambient sound, but advancements in natural language processing have significantly improved response times and recognition precision. Responsiveness in gesture control depends on sensor quality and motion algorithms, while voice commands benefit from real-time processing and continuous learning to enhance user experience.

Accessibility: Who Benefits Most from Each Input Method?

Gesture control benefits individuals with speech impairments or those in noisy environments, providing a hands-free, silent interaction method ideal for users with limited vocal ability. Voice command enhances accessibility for users with mobility challenges or visual impairments, enabling efficient device operation through speech without requiring hand movement. Both input methods improve user experience by catering to specific disabilities, making wearable technology more inclusive and adaptable.

Privacy and Security Implications in Gesture and Voice Control

Gesture control in wearable technology offers a more discreet and secure interaction method by minimizing audio data transmission, reducing risks of eavesdropping and unauthorized voice recording. Voice command systems often require continuous listening capabilities, increasing vulnerabilities to hacking, data interception, and inadvertent activation by ambient sounds. Both interfaces demand robust encryption protocols and user consent frameworks to safeguard personal data and prevent exploitation of sensitive biometric inputs.

Use Cases: When to Choose Gesture or Voice Commands

Gesture control excels in noisy environments or situations requiring hands-free operation without vocal input, such as fitness tracking or augmented reality interactions. Voice commands are ideal for multitasking scenarios, accessibility for users with limited mobility, and smart home device management where vocal instructions simplify complex tasks. Choosing between gesture and voice depends on user context, environmental factors, and the specific wearable device's interface capabilities.

Technological Challenges and Limitations

Gesture control in wearable technology faces challenges such as limited sensor accuracy, difficulty in recognizing complex movements, and high power consumption, which can hinder user experience. Voice command systems struggle with background noise interference, language dialect variations, and privacy concerns related to continuous audio monitoring. Both interfaces require advances in machine learning algorithms and hardware optimization to improve reliability and responsiveness in diverse environments.

Future Trends: The Evolution of Input Methods in Wearables

Gesture control and voice command technologies in wearable devices are rapidly evolving, with future trends emphasizing multimodal interaction that combines both input methods for enhanced user experience. Advances in AI and sensor technology are enabling more accurate gesture recognition and natural language processing, improving responsiveness and accessibility in diverse environments. Emerging wearables are expected to integrate adaptive input systems that learn user preferences and contexts to seamlessly switch between gesture and voice commands, driving innovation in hands-free control.

Gesture Control vs Voice Command Infographic

techiny.com

techiny.com