Environmental understanding in augmented reality involves interpreting the overall spatial layout and dynamic context of a scene to create immersive, interactive experiences. Object recognition focuses on identifying and classifying specific items within that environment, enabling precise interactions with real-world objects. Combining both capabilities enhances AR applications by providing a comprehensive awareness that supports seamless integration of virtual content.

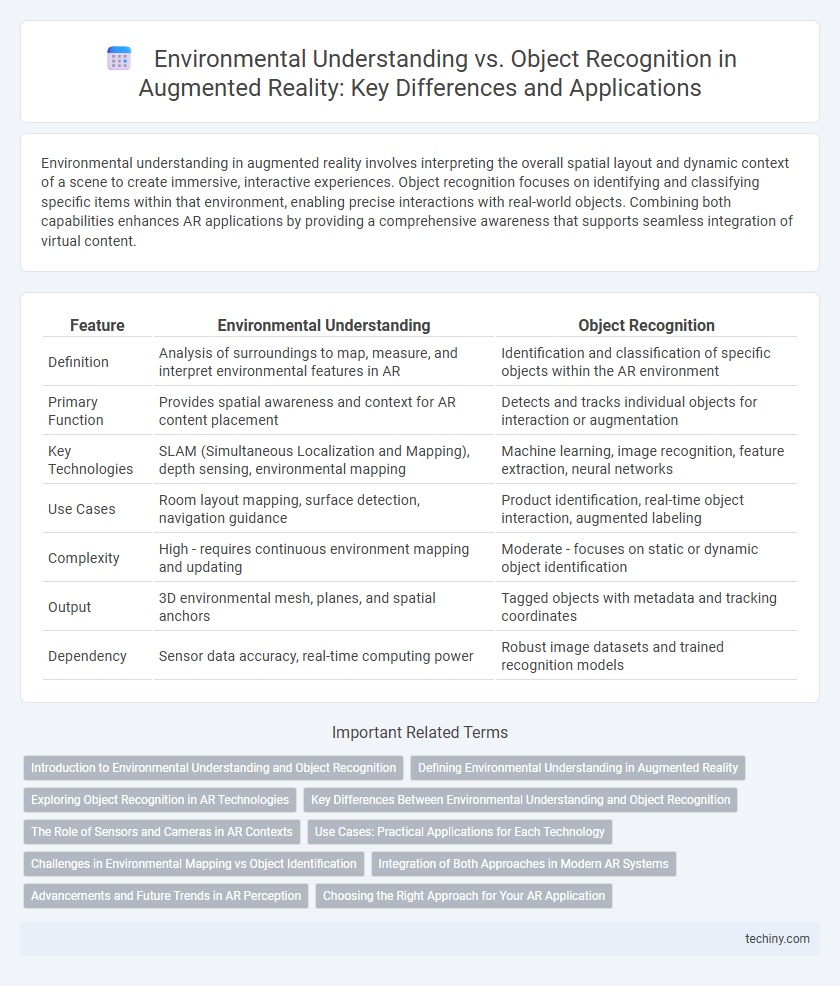

Table of Comparison

| Feature | Environmental Understanding | Object Recognition |

|---|---|---|

| Definition | Analysis of surroundings to map, measure, and interpret environmental features in AR | Identification and classification of specific objects within the AR environment |

| Primary Function | Provides spatial awareness and context for AR content placement | Detects and tracks individual objects for interaction or augmentation |

| Key Technologies | SLAM (Simultaneous Localization and Mapping), depth sensing, environmental mapping | Machine learning, image recognition, feature extraction, neural networks |

| Use Cases | Room layout mapping, surface detection, navigation guidance | Product identification, real-time object interaction, augmented labeling |

| Complexity | High - requires continuous environment mapping and updating | Moderate - focuses on static or dynamic object identification |

| Output | 3D environmental mesh, planes, and spatial anchors | Tagged objects with metadata and tracking coordinates |

| Dependency | Sensor data accuracy, real-time computing power | Robust image datasets and trained recognition models |

Introduction to Environmental Understanding and Object Recognition

Environmental understanding in augmented reality involves analyzing and interpreting the physical surroundings to create accurate 3D spatial maps, enabling seamless integration of virtual elements. Object recognition focuses on identifying and classifying specific items within the environment using advanced machine learning algorithms, enhancing interactivity through contextual awareness. Both technologies leverage computer vision and sensor data to deliver immersive AR experiences that respond dynamically to real-world conditions.

Defining Environmental Understanding in Augmented Reality

Environmental understanding in augmented reality involves the system's ability to perceive and interpret the physical surroundings, including surfaces, spatial layout, and ambient conditions, to anchor virtual content accurately. This process enables AR devices to map 3D environments dynamically, supporting realistic interaction and occlusion between digital and real-world elements. Unlike object recognition, which identifies and classifies specific items, environmental understanding creates a holistic model of the space for seamless integration of augmented experiences.

Exploring Object Recognition in AR Technologies

Object recognition in augmented reality (AR) technologies enables systems to identify and categorize real-world items with high precision, facilitating interactive and context-aware experiences. This capability enhances applications in fields like retail, education, and manufacturing by allowing digital content to seamlessly integrate with identified objects. Advances in machine learning algorithms and 3D mapping have significantly improved the accuracy and speed of object recognition, driving the evolution of immersive AR solutions.

Key Differences Between Environmental Understanding and Object Recognition

Environmental understanding in augmented reality involves accurately mapping and interpreting the physical surroundings to enable seamless interaction with virtual content, while object recognition focuses specifically on identifying and classifying individual objects within that environment. Environmental understanding relies heavily on spatial mapping, depth sensing, and surface detection to create a comprehensive model of the environment, whereas object recognition uses visual features, machine learning algorithms, and pattern detection to detect and label specific items. These distinctions impact AR applications by influencing how virtual objects interact with real-world settings versus how specific items trigger interactive experiences.

The Role of Sensors and Cameras in AR Contexts

Sensors and cameras in augmented reality devices capture spatial data essential for environmental understanding, enabling the system to map and interpret the physical world. Environmental understanding relies on depth sensors, LiDAR, and stereo cameras to construct 3D models of surroundings, while object recognition primarily uses RGB cameras and machine learning algorithms to identify and classify specific items. The integration of multiple sensor types enhances AR experiences by providing accurate scene reconstruction alongside precise object detection and tracking.

Use Cases: Practical Applications for Each Technology

Environmental understanding enables augmented reality systems to map and interpret entire surroundings, facilitating applications such as indoor navigation, spatial mapping for architecture, and immersive gaming experiences. Object recognition focuses on identifying specific items within a scene, empowering use cases like retail product identification, industrial equipment maintenance, and real-time translation of text on surfaces. Combining both technologies enhances AR applications in scenarios like interactive museum exhibits and automated quality control in manufacturing.

Challenges in Environmental Mapping vs Object Identification

Environmental mapping in augmented reality faces challenges such as real-time processing of large-scale, dynamic environments and accurately capturing spatial relationships under varying lighting conditions. Object recognition struggles with identifying partially occluded or visually similar items due to limitations in training data diversity and sensor resolution. Both tasks demand advanced machine learning algorithms and robust sensor fusion to enhance accuracy and reliability in diverse real-world scenarios.

Integration of Both Approaches in Modern AR Systems

Modern augmented reality systems achieve enhanced spatial awareness by integrating environmental understanding and object recognition, enabling real-time mapping of surroundings alongside identification of specific objects. This fusion leverages simultaneous localization and mapping (SLAM) techniques with deep learning-based recognition models to create immersive and contextually relevant AR experiences. The combined data allows for precise interaction between digital content and physical environments, improving accuracy and user engagement in applications such as navigation, gaming, and industrial design.

Advancements and Future Trends in AR Perception

Advancements in augmented reality (AR) perception have enhanced environmental understanding by integrating spatial mapping, light estimation, and scene geometry analysis, enabling more immersive and context-aware experiences. Object recognition technology is evolving through deep learning algorithms and 3D model integration, improving accuracy and real-time interaction with complex objects. Future trends emphasize combining these capabilities to achieve seamless AR environments that adapt dynamically to both the physical surroundings and recognized objects for enhanced user engagement.

Choosing the Right Approach for Your AR Application

Environmental understanding in augmented reality enables precise mapping of physical spaces to create immersive experiences by recognizing spatial relationships and surfaces. Object recognition focuses on identifying and tracking specific items within the environment, essential for context-aware interactions and real-time data display. Selecting the right approach depends on your AR application's goals, whether enhancing spatial awareness or facilitating targeted object interaction to deliver optimal user engagement.

Environmental Understanding vs Object Recognition Infographic

techiny.com

techiny.com