On-device rendering in augmented reality offers low latency and enhanced privacy by processing data directly on the user's device, but it may be limited by hardware capabilities and battery life. Edge rendering offloads complex computations to nearby servers, enabling higher-quality graphics and real-time interactions without taxing the device, though it depends on reliable and fast network connections. Balancing these approaches is crucial for delivering seamless and immersive AR experiences across various use cases.

Table of Comparison

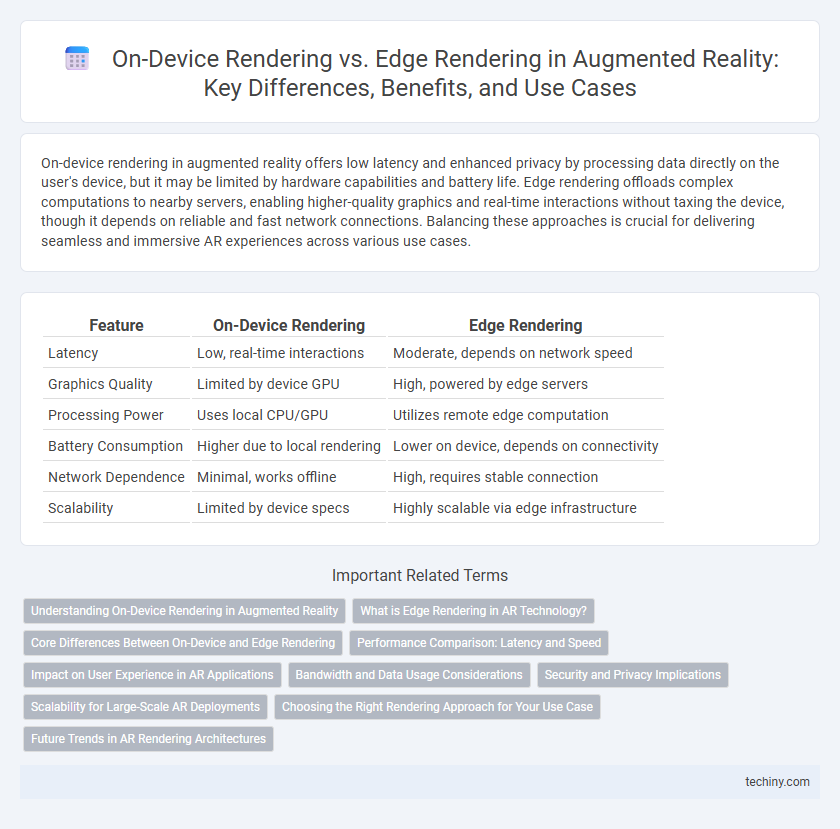

| Feature | On-Device Rendering | Edge Rendering |

|---|---|---|

| Latency | Low, real-time interactions | Moderate, depends on network speed |

| Graphics Quality | Limited by device GPU | High, powered by edge servers |

| Processing Power | Uses local CPU/GPU | Utilizes remote edge computation |

| Battery Consumption | Higher due to local rendering | Lower on device, depends on connectivity |

| Network Dependence | Minimal, works offline | High, requires stable connection |

| Scalability | Limited by device specs | Highly scalable via edge infrastructure |

Understanding On-Device Rendering in Augmented Reality

On-device rendering in augmented reality involves processing and generating graphics directly on the user's device, such as AR glasses or smartphones, minimizing latency and ensuring real-time interaction. This method leverages the device's GPU and CPU capabilities to handle complex 3D models, animations, and environmental mapping without relying on network connectivity. Understanding on-device rendering is critical for delivering seamless, immersive AR experiences that prioritize speed, privacy, and offline functionality.

What is Edge Rendering in AR Technology?

Edge rendering in augmented reality (AR) technology refers to the process of offloading complex computational tasks to nearby edge servers instead of relying solely on the AR device's local hardware. This approach reduces latency and improves real-time interaction by leveraging powerful edge computing resources to handle graphics rendering, data processing, and AI algorithms close to the user. Edge rendering enhances visual fidelity and responsiveness in AR applications, enabling seamless and immersive experiences without overburdening the device's CPU and battery.

Core Differences Between On-Device and Edge Rendering

On-device rendering processes augmented reality visuals directly on the user's device, offering low latency and offline functionality but limited by hardware performance and battery life. Edge rendering offloads computationally intensive tasks to nearby edge servers, enabling higher-quality graphics and more complex interactions while relying on fast, stable network connections to minimize latency. The core difference lies in the trade-off between local processing autonomy with resource constraints versus remote processing power with dependency on network infrastructure.

Performance Comparison: Latency and Speed

On-device rendering in augmented reality minimizes latency by processing data locally, resulting in faster response times critical for real-time interaction and immersive user experiences. Edge rendering leverages nearby servers to offload computational tasks, potentially reducing the device's workload but introducing variable latency depending on network speed and proximity to edge nodes. Performance metrics indicate on-device rendering excels in consistent low latency and instant feedback, while edge rendering offers scalability for complex AR applications with higher computational demands, albeit with occasional speed fluctuations.

Impact on User Experience in AR Applications

On-device rendering in augmented reality (AR) applications delivers low-latency performance by processing graphics locally, enhancing real-time interaction and reducing motion sickness risks. Edge rendering leverages nearby powerful servers to handle complex computations, enabling high-fidelity visuals without taxing the device's battery, but network latency can impact responsiveness. Optimizing the balance between on-device and edge rendering is crucial to maintaining seamless user experience and minimizing visual lag in AR environments.

Bandwidth and Data Usage Considerations

On-device rendering minimizes bandwidth consumption by processing AR visuals directly on the user's device, reducing the need to transmit large data streams over the network. Edge rendering offloads computational tasks to nearby edge servers, which can decrease device power usage but often increases data transfer demands, potentially leading to higher latency and bandwidth strain. Choosing between on-device and edge rendering requires balancing data usage constraints with performance needs, especially in bandwidth-limited environments.

Security and Privacy Implications

On-device rendering in augmented reality enhances security by processing data locally, minimizing exposure to external networks and reducing risks of data interception or breaches. Edge rendering, while offering lower latency and improved performance, involves transmitting sensitive user data to nearby servers, increasing potential vulnerabilities from network attacks or unauthorized access. Privacy implications favor on-device rendering as it limits the sharing of personal information, whereas edge rendering demands robust encryption and strict access controls to safeguard user data.

Scalability for Large-Scale AR Deployments

On-device rendering enables AR applications to function independently on individual devices, reducing latency and enhancing user experience but may struggle with processing power constraints in large-scale deployments. Edge rendering leverages nearby edge servers to offload intensive computation, significantly improving scalability by distributing workloads and supporting numerous simultaneous AR users. Choosing between on-device and edge rendering depends on balancing real-time performance needs and the infrastructure capacity required for expansive AR environments.

Choosing the Right Rendering Approach for Your Use Case

On-device rendering offers low latency and offline functionality, making it ideal for AR applications requiring real-time interactions and privacy. Edge rendering leverages powerful external servers to handle complex graphics, reducing the device's processing load and enabling high-quality visuals for resource-intensive AR experiences. Selecting the right rendering approach depends on factors such as latency tolerance, network reliability, graphical complexity, and device capabilities tailored to the specific augmented reality use case.

Future Trends in AR Rendering Architectures

On-device rendering in augmented reality prioritizes low latency and user privacy by processing data locally on AR hardware, while edge rendering leverages powerful cloud servers closer to the user to enhance graphical fidelity and reduce device constraints. Future trends indicate a hybrid AR rendering architecture that dynamically allocates workloads between on-device GPUs and edge servers based on network conditions, energy efficiency, and application complexity. Advances in 5G connectivity, AI-driven optimization, and decentralized computing infrastructures will further enable seamless integration of on-device and edge rendering, delivering ultra-realistic AR experiences with minimal latency.

On-device rendering vs Edge rendering Infographic

techiny.com

techiny.com