Plane detection in augmented reality identifies flat surfaces such as floors and tables, enabling virtual objects to be anchored realistically within a physical environment. Image recognition, on the other hand, detects and tracks specific images or patterns, allowing digital content to be overlaid precisely on printed materials or objects. Both technologies enhance user interaction by providing spatial awareness and contextual information in AR applications.

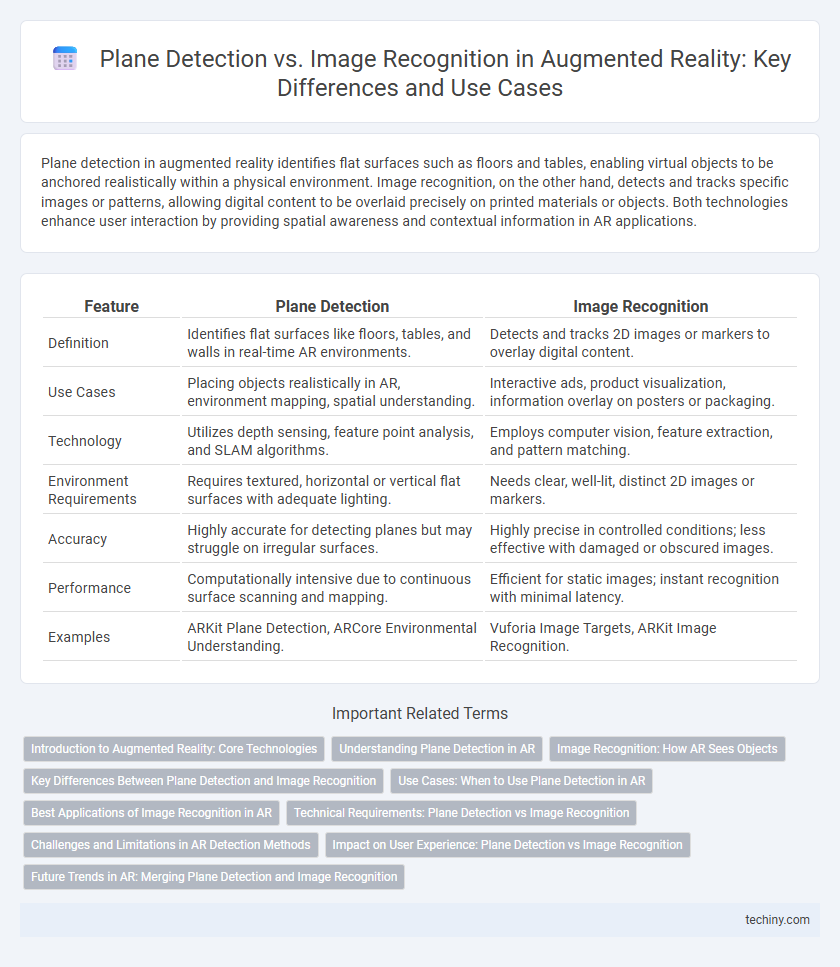

Table of Comparison

| Feature | Plane Detection | Image Recognition |

|---|---|---|

| Definition | Identifies flat surfaces like floors, tables, and walls in real-time AR environments. | Detects and tracks 2D images or markers to overlay digital content. |

| Use Cases | Placing objects realistically in AR, environment mapping, spatial understanding. | Interactive ads, product visualization, information overlay on posters or packaging. |

| Technology | Utilizes depth sensing, feature point analysis, and SLAM algorithms. | Employs computer vision, feature extraction, and pattern matching. |

| Environment Requirements | Requires textured, horizontal or vertical flat surfaces with adequate lighting. | Needs clear, well-lit, distinct 2D images or markers. |

| Accuracy | Highly accurate for detecting planes but may struggle on irregular surfaces. | Highly precise in controlled conditions; less effective with damaged or obscured images. |

| Performance | Computationally intensive due to continuous surface scanning and mapping. | Efficient for static images; instant recognition with minimal latency. |

| Examples | ARKit Plane Detection, ARCore Environmental Understanding. | Vuforia Image Targets, ARKit Image Recognition. |

Introduction to Augmented Reality: Core Technologies

Plane detection in augmented reality involves identifying flat surfaces like floors and tables, enabling precise placement of virtual objects within a 3D space. Image recognition technology detects and tracks specific images or patterns, allowing AR applications to overlay digital content aligned with real-world visuals. Both technologies are fundamental core components in AR systems, enhancing spatial awareness and interactive experiences.

Understanding Plane Detection in AR

Plane detection in augmented reality (AR) involves identifying flat surfaces such as floors, tables, or walls within the user's environment to anchor virtual objects seamlessly. This process utilizes depth sensors and computer vision algorithms to analyze spatial data, enabling accurate placement and interaction of digital content in real-world settings. Unlike image recognition, which detects and responds to specific visual markers, plane detection forms the foundational spatial understanding essential for immersive AR experiences.

Image Recognition: How AR Sees Objects

Image recognition in augmented reality (AR) leverages advanced computer vision algorithms to identify and interpret real-world objects by analyzing visual features such as shapes, colors, and textures. This technology enables AR systems to overlay relevant digital content precisely onto recognized objects, enhancing user interaction by providing context-aware information. Unlike plane detection, which detects flat surfaces for placement, image recognition focuses on understanding and responding to specific objects within an environment for more dynamic and immersive AR experiences.

Key Differences Between Plane Detection and Image Recognition

Plane detection in augmented reality identifies flat surfaces in the real world to place virtual objects contextually, leveraging spatial mapping and depth sensing technologies. Image recognition, on the other hand, analyzes visual features in the environment to detect and track specific images or markers for interaction and content overlay. Key differences include plane detection's focus on spatial geometry and environment understanding, while image recognition centers on pattern identification and marker-based experiences.

Use Cases: When to Use Plane Detection in AR

Plane detection in augmented reality excels in scenarios requiring the identification and interaction with flat surfaces, such as placing virtual furniture in interior design apps or enabling AR navigation on floors and tables. This technology enhances spatial awareness by mapping horizontal and vertical planes, facilitating realistic object placement and immersive user experiences. Use plane detection when consistent, stable surface interaction is crucial, unlike image recognition which suits target-specific or marker-based AR applications.

Best Applications of Image Recognition in AR

Image recognition in augmented reality excels in applications requiring precise interaction with specific objects, such as retail, where it enables virtual try-ons by identifying clothing or accessories. It also enhances educational experiences by overlaying detailed information on textbooks or historical artifacts, creating immersive learning environments. Furthermore, image recognition drives efficient maintenance and repair tasks by recognizing machinery parts and displaying step-by-step instructions in real-time.

Technical Requirements: Plane Detection vs Image Recognition

Plane detection in augmented reality requires advanced sensor data from accelerometers, gyroscopes, and depth sensors to accurately map flat surfaces and enable object placement in 3D space. Image recognition depends heavily on high-resolution cameras and robust computer vision algorithms to identify, track, and overlay digital content on 2D images, demanding significant processing power for real-time analysis. Both techniques demand optimized hardware and software integration, but plane detection prioritizes spatial mapping accuracy while image recognition emphasizes pattern matching and feature extraction capabilities.

Challenges and Limitations in AR Detection Methods

Plane detection in augmented reality often struggles with accurately identifying flat surfaces in complex environments due to varied lighting conditions and textureless planes, leading to unreliable spatial mapping. Image recognition faces limitations in AR when dealing with dynamic scenes, occlusions, and changes in perspective, which reduce the robustness of marker-based tracking. Both methods are challenged by computational constraints on mobile devices, impacting real-time performance and power efficiency in AR applications.

Impact on User Experience: Plane Detection vs Image Recognition

Plane detection in augmented reality enables realistic object placement by identifying flat surfaces, significantly enhancing user interaction through natural environment integration. Image recognition allows for precise triggering of AR content based on specific visual markers, offering tailored and context-aware experiences. Combining both technologies improves immersion and usability by balancing spatial understanding with detailed, marker-based interactions.

Future Trends in AR: Merging Plane Detection and Image Recognition

Future trends in augmented reality emphasize the integration of plane detection and image recognition to create more immersive and interactive experiences. Merging these technologies enables AR systems to accurately map physical environments while recognizing objects and images within them, enhancing spatial awareness and contextual understanding. This fusion drives advancements in sectors like gaming, education, and industrial applications by enabling precise interaction between virtual content and real-world elements.

Plane Detection vs Image Recognition Infographic

techiny.com

techiny.com