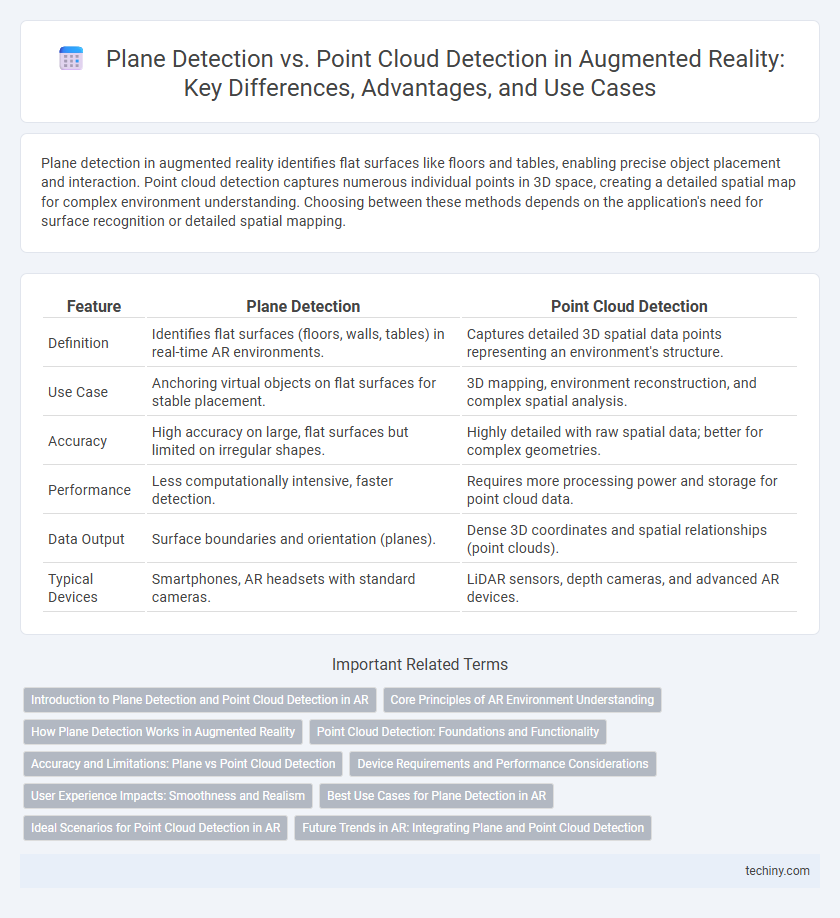

Plane detection in augmented reality identifies flat surfaces like floors and tables, enabling precise object placement and interaction. Point cloud detection captures numerous individual points in 3D space, creating a detailed spatial map for complex environment understanding. Choosing between these methods depends on the application's need for surface recognition or detailed spatial mapping.

Table of Comparison

| Feature | Plane Detection | Point Cloud Detection |

|---|---|---|

| Definition | Identifies flat surfaces (floors, walls, tables) in real-time AR environments. | Captures detailed 3D spatial data points representing an environment's structure. |

| Use Case | Anchoring virtual objects on flat surfaces for stable placement. | 3D mapping, environment reconstruction, and complex spatial analysis. |

| Accuracy | High accuracy on large, flat surfaces but limited on irregular shapes. | Highly detailed with raw spatial data; better for complex geometries. |

| Performance | Less computationally intensive, faster detection. | Requires more processing power and storage for point cloud data. |

| Data Output | Surface boundaries and orientation (planes). | Dense 3D coordinates and spatial relationships (point clouds). |

| Typical Devices | Smartphones, AR headsets with standard cameras. | LiDAR sensors, depth cameras, and advanced AR devices. |

Introduction to Plane Detection and Point Cloud Detection in AR

Plane detection in augmented reality (AR) involves identifying flat surfaces such as floors, walls, and tables by analyzing spatial data captured through device sensors, enabling virtual objects to be anchored realistically within the environment. Point cloud detection captures a dense collection of individual 3D points representing the scene's geometry, offering a detailed spatial map for more complex interaction and object placement. Both techniques leverage depth sensing and computer vision to enhance environmental understanding, with plane detection simplifying surface recognition and point cloud detection providing greater spatial resolution for diverse AR applications.

Core Principles of AR Environment Understanding

Plane detection identifies flat surfaces such as floors, walls, and tables by analyzing clusters of points to create geometric planes, enabling realistic virtual object placement in augmented reality environments. Point cloud detection captures a dense set of spatial data points representing the physical environment's geometry without predefined shapes, allowing detailed 3D mapping and object recognition. Both techniques rely on simultaneous localization and mapping (SLAM) algorithms and sensor fusion from cameras and depth sensors to interpret and understand the AR environment accurately.

How Plane Detection Works in Augmented Reality

Plane detection in augmented reality involves identifying flat surfaces by analyzing video frames and sensor data to recognize consistent patterns and edges. The system uses algorithms to estimate the orientation and boundaries of these surfaces by detecting feature points that align on a planar surface within a 3D environment. This process enables virtual objects to be accurately anchored to real-world floors, tables, or walls, enhancing spatial awareness and interaction.

Point Cloud Detection: Foundations and Functionality

Point cloud detection in augmented reality uses 3D spatial data captured by depth sensors to create highly detailed and accurate representations of the environment, enabling precise object recognition and interaction. Unlike plane detection, which identifies flat surfaces such as floors and tables, point cloud detection constructs complex geometries by analyzing millions of data points, allowing for more flexible and dynamic AR experiences. This foundational approach enhances scene understanding by mapping intricate spatial relationships, supporting advanced applications like object occlusion, real-time environment scanning, and robust spatial anchoring.

Accuracy and Limitations: Plane vs Point Cloud Detection

Plane detection in augmented reality offers precise identification of flat surfaces by analyzing geometric features, making it highly accurate for applications like furniture placement and navigation. In contrast, point cloud detection captures detailed spatial information through dense sets of 3D points, providing richer environmental context but often suffering from increased noise and computational complexity. Limitations of plane detection include difficulty handling irregular surfaces, while point cloud detection may struggle with real-time processing and requires advanced algorithms to filter out irrelevant data.

Device Requirements and Performance Considerations

Plane detection in augmented reality relies on device sensors like LiDAR or advanced depth cameras to identify flat surfaces, requiring higher computational power and sensor precision for accurate mapping. Point cloud detection processes numerous spatial points from depth sensors or stereo cameras, demanding robust GPU capabilities to handle dense data sets and real-time environment modeling. Devices equipped with dedicated AR hardware such as the iPad Pro with LiDAR outperform standard smartphones in both detection accuracy and responsiveness, making sensor quality and processing speed critical factors for optimal AR performance.

User Experience Impacts: Smoothness and Realism

Plane detection enhances user experience by providing stable, flat surfaces for virtual object placement, ensuring smooth interactions and consistent realism in augmented reality environments. Point cloud detection captures detailed spatial data of complex environments, enabling more precise object interactions but may introduce variability in smoothness due to data processing demands. Balancing plane detection's stability with point cloud's spatial accuracy optimizes overall AR realism and interaction fluidity.

Best Use Cases for Plane Detection in AR

Plane detection in augmented reality excels at identifying flat surfaces such as floors, tables, and walls, enabling precise placement of virtual objects for interior design, navigation, and gaming. It provides stable anchors for AR content, enhancing user interaction and spatial understanding in real-world environments. Compared to point cloud detection, plane detection is more efficient for applications requiring surface-based object alignment and environmental awareness.

Ideal Scenarios for Point Cloud Detection in AR

Point cloud detection in augmented reality excels in complex environments requiring precise spatial understanding, such as intricate architectural visualizations or industrial design applications. It captures detailed 3D data points, enabling accurate modeling of irregular surfaces and objects that plane detection cannot reliably interpret. This makes point cloud detection ideal for AR scenarios involving dynamic or cluttered scenes where depth accuracy is critical for user interaction and object placement.

Future Trends in AR: Integrating Plane and Point Cloud Detection

Future trends in augmented reality emphasize the integration of plane detection and point cloud detection to enhance spatial understanding and interaction accuracy. Combining these technologies enables seamless environment mapping, allowing AR applications to recognize flat surfaces and complex 3D structures simultaneously. This fusion drives advancements in immersive experiences, real-time object placement, and improved user engagement across diverse AR platforms.

Plane Detection vs Point Cloud Detection Infographic

techiny.com

techiny.com