Environmental occlusion in augmented reality enhances realism by accurately blocking virtual objects behind real-world elements, creating a natural interaction between digital and physical environments. Depth masking uses depth sensors to determine the relative positioning of objects, enabling precise layering of virtual content based on distance from the user. Together, these techniques improve spatial awareness and immersion by ensuring virtual objects properly integrate with the surrounding environment.

Table of Comparison

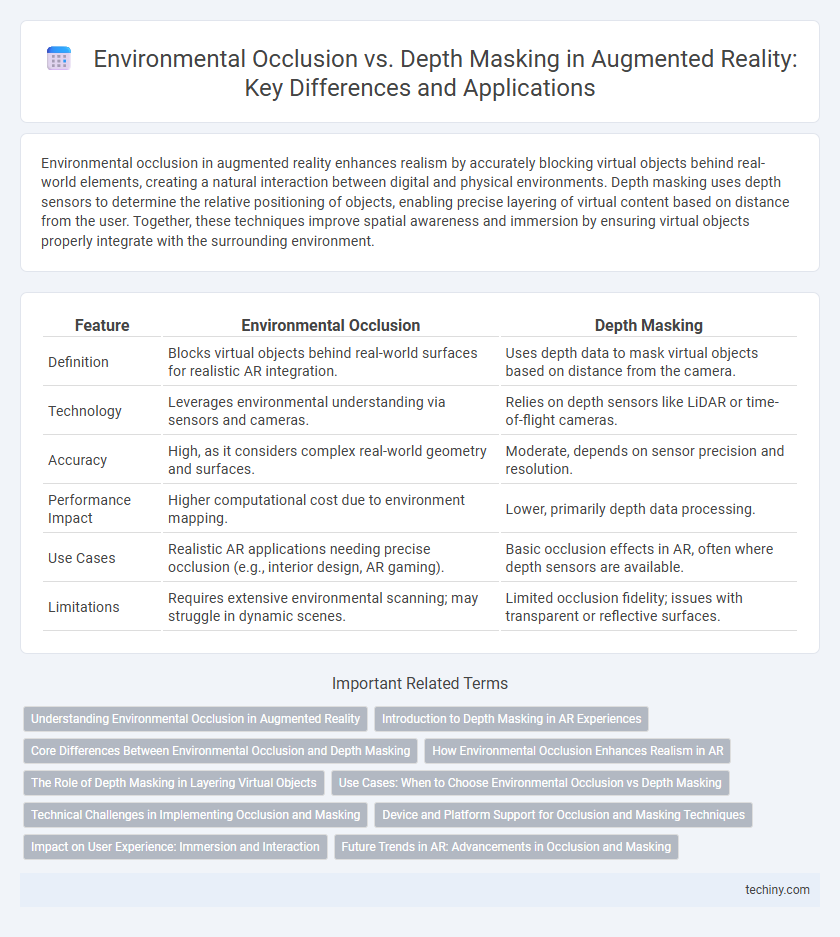

| Feature | Environmental Occlusion | Depth Masking |

|---|---|---|

| Definition | Blocks virtual objects behind real-world surfaces for realistic AR integration. | Uses depth data to mask virtual objects based on distance from the camera. |

| Technology | Leverages environmental understanding via sensors and cameras. | Relies on depth sensors like LiDAR or time-of-flight cameras. |

| Accuracy | High, as it considers complex real-world geometry and surfaces. | Moderate, depends on sensor precision and resolution. |

| Performance Impact | Higher computational cost due to environment mapping. | Lower, primarily depth data processing. |

| Use Cases | Realistic AR applications needing precise occlusion (e.g., interior design, AR gaming). | Basic occlusion effects in AR, often where depth sensors are available. |

| Limitations | Requires extensive environmental scanning; may struggle in dynamic scenes. | Limited occlusion fidelity; issues with transparent or reflective surfaces. |

Understanding Environmental Occlusion in Augmented Reality

Environmental occlusion in augmented reality creates realistic interactions by accurately blocking virtual objects when they are behind real-world elements, enhancing immersion and depth perception. This technique relies on spatial mapping and 3D understanding of the physical environment to detect surfaces and objects that should obscure digital content. Compared to depth masking, environmental occlusion integrates dynamic environmental data for more precise and convincing virtual-to-real object interactions.

Introduction to Depth Masking in AR Experiences

Depth masking in augmented reality (AR) enhances realism by accurately rendering virtual objects behind real-world elements, simulating natural occlusion effects. This technique uses depth sensors or camera data to create depth maps, allowing AR systems to selectively hide virtual content when it should be obscured by physical objects. Integrating depth masking significantly improves user immersion by ensuring virtual objects interact believably within the real environment.

Core Differences Between Environmental Occlusion and Depth Masking

Environmental occlusion enhances augmented reality by dynamically blending virtual objects with real-world elements based on spatial context, creating realistic interactions and shadows that respond to environmental lighting and surfaces. Depth masking utilizes depth data to precisely layer virtual content behind or in front of real objects, ensuring accurate visibility and preventing unnatural overlapping without simulating detailed light or shadow effects. The core difference lies in environmental occlusion's focus on visual integration through light and shadow interplay, whereas depth masking prioritizes spatial depth accuracy to manage object layering in AR scenes.

How Environmental Occlusion Enhances Realism in AR

Environmental Occlusion enhances realism in AR by dynamically detecting and rendering physical objects that partially block virtual elements, creating seamless integration between digital content and the real world. Unlike basic depth masking, which simply hides virtual objects behind real ones, environmental occlusion accounts for complex light interactions and shadows, delivering more natural and immersive experiences. This technology leverages sensor data and spatial mapping to faithfully replicate real-world spatial relationships, significantly improving user perception and interaction in augmented environments.

The Role of Depth Masking in Layering Virtual Objects

Depth masking plays a critical role in augmented reality by accurately layering virtual objects behind real-world elements, enhancing visual realism and immersion. By utilizing depth data from sensors or cameras, depth masking ensures that virtual objects are occluded properly when intersecting with physical surfaces or obstacles. This technique surpasses simple environmental occlusion by providing precise spatial relationships, allowing for seamless integration of digital content within complex environments.

Use Cases: When to Choose Environmental Occlusion vs Depth Masking

Environmental occlusion enhances AR realism by accurately blending virtual objects with real-world surfaces, ideal for applications requiring seamless integration like interior design and architectural visualization. Depth masking is preferred in interactive scenarios such as gaming or industrial training where precise object overlap and user interaction depend on real-time depth sensing. Choose environmental occlusion for static or semi-static environments to improve immersion, while depth masking suits dynamic contexts that demand responsive and accurate depth-based rendering.

Technical Challenges in Implementing Occlusion and Masking

Implementing environmental occlusion in augmented reality requires precise real-time depth sensing and scene understanding to accurately block virtual objects behind physical ones, often challenged by sensor limitations and dynamic lighting conditions. Depth masking depends on creating accurate depth maps, but inconsistencies in depth data and latency issues can cause noticeable artifacts and misalignment between the virtual and real environments. Both techniques demand high computational resources and robust algorithms to maintain seamless integration and user immersion in complex, changing environments.

Device and Platform Support for Occlusion and Masking Techniques

Environmental occlusion enhances augmented reality realism by dynamically blocking virtual objects behind real-world obstacles, relying heavily on device sensors like LiDAR or time-of-flight cameras available in platforms such as iOS ARKit and Android ARCore. Depth masking requires accurate depth data, supported by advanced hardware in flagship devices, enabling frameworks to generate pixel-perfect masks that integrate virtual content seamlessly with physical environments. Comprehensive platform support varies, with iOS ARKit providing robust occlusion APIs via LiDAR-enabled devices, while Android ARCore's occlusion capabilities depend on the camera's depth estimation or external depth sensors, influencing the precision and application scope of these techniques.

Impact on User Experience: Immersion and Interaction

Environmental occlusion enhances user immersion by accurately blending virtual objects with real-world environments, creating a seamless visual experience that reinforces spatial awareness. Depth masking improves interaction precision by correctly layering virtual content according to real-world depth information, preventing visual conflicts and enabling more natural object manipulation. Together, these techniques significantly elevate the realism and responsiveness of augmented reality applications, fostering deeper engagement and intuitive user interactions.

Future Trends in AR: Advancements in Occlusion and Masking

Advancements in augmented reality are driving more sophisticated environmental occlusion techniques that enhance the realism of virtual objects by accurately blocking or revealing components based on real-world depth data. Emerging depth masking algorithms leverage machine learning to dynamically interpret complex environments, improving seamless integration and interaction between digital content and physical spaces. Future trends emphasize real-time processing and sensor fusion to refine occlusion accuracy, enabling immersive AR experiences across diverse applications such as gaming, navigation, and industrial maintenance.

Environmental Occlusion vs Depth Masking Infographic

techiny.com

techiny.com