Dense data in Big Data pet projects refers to datasets with a high volume of valuable information packed closely together, enabling more comprehensive analysis and accurate predictions. Sparse data, by contrast, contains many missing or zero values, posing challenges for pattern recognition and requiring specialized techniques like matrix factorization or imputation. Choosing between dense and sparse data impacts the efficiency of data processing algorithms and the overall insights derived from Big Data pet analytics.

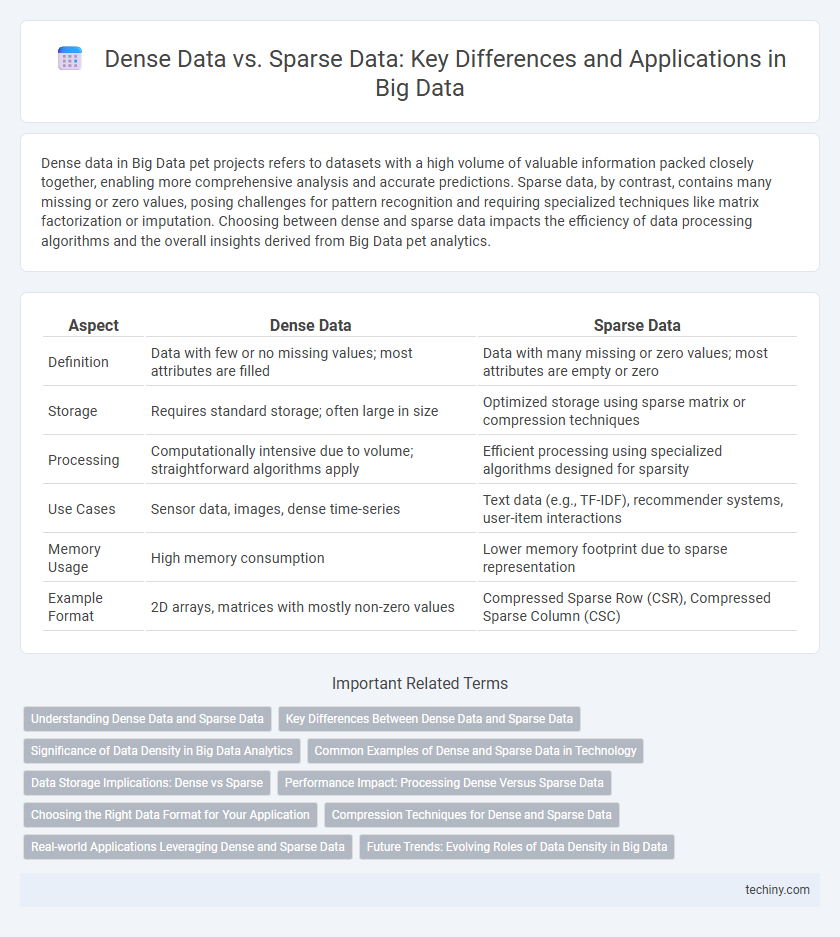

Table of Comparison

| Aspect | Dense Data | Sparse Data |

|---|---|---|

| Definition | Data with few or no missing values; most attributes are filled | Data with many missing or zero values; most attributes are empty or zero |

| Storage | Requires standard storage; often large in size | Optimized storage using sparse matrix or compression techniques |

| Processing | Computationally intensive due to volume; straightforward algorithms apply | Efficient processing using specialized algorithms designed for sparsity |

| Use Cases | Sensor data, images, dense time-series | Text data (e.g., TF-IDF), recommender systems, user-item interactions |

| Memory Usage | High memory consumption | Lower memory footprint due to sparse representation |

| Example Format | 2D arrays, matrices with mostly non-zero values | Compressed Sparse Row (CSR), Compressed Sparse Column (CSC) |

Understanding Dense Data and Sparse Data

Dense data contains a high volume of non-zero or meaningful values, making it suitable for algorithms that rely on continuous or closely packed datasets. Sparse data, characterized by numerous zero or missing values, requires specialized techniques such as sparse matrix representations to efficiently store and process. Understanding the differences between dense and sparse data is crucial for optimizing big data analytics and improving machine learning model performance.

Key Differences Between Dense Data and Sparse Data

Dense data contains a high volume of non-zero or meaningful values distributed throughout the dataset, enabling more direct and comprehensive analysis. Sparse data, characterized by a large number of zero or missing values, often requires specialized algorithms and storage techniques to handle its inefficiencies. Key differences include data storage formats, computational complexity, and analysis methods tailored to either dense matrices or sparse representations in big data environments.

Significance of Data Density in Big Data Analytics

Data density plays a crucial role in big data analytics by influencing the accuracy and efficiency of machine learning models and statistical analyses. Dense data, characterized by a high volume of meaningful information with minimal missing values, enhances pattern recognition and predictive capabilities. In contrast, sparse data, containing many zero or null entries, often requires advanced preprocessing techniques such as dimensionality reduction and imputation to extract valuable insights.

Common Examples of Dense and Sparse Data in Technology

Dense data in technology often appears in image and video files where pixel information is continuously stored, such as in high-resolution medical imaging or surveillance footage. Sparse data is typical in recommendation systems and natural language processing, where user-item interaction matrices or word occurrence vectors mostly consist of zeros. Machine learning algorithms optimize differently for dense datasets, like sensor readings, versus sparse datasets, such as social network graphs.

Data Storage Implications: Dense vs Sparse

Dense data requires more storage capacity as it contains a higher proportion of non-zero values, leading to increased memory consumption and potentially slower processing speeds. Sparse data, characterized by many zero or null values, can be stored efficiently using specialized formats such as Compressed Sparse Row (CSR) or Coordinate List (COO), significantly reducing storage requirements. Choosing the appropriate data storage method depends on the data's density, impacting both storage cost and computational performance in big data applications.

Performance Impact: Processing Dense Versus Sparse Data

Dense data, characterized by a high volume of non-zero elements, typically demands more computational resources due to extensive memory usage and processing requirements, impacting system throughput. Sparse data, with predominantly zero or null values, enables optimized storage techniques and faster processing through sparse matrix algorithms and indexing strategies, enhancing performance efficiency. The selection between dense and sparse data representations directly influences big data analytics platforms, affecting computational speed, memory allocation, and overall scalability.

Choosing the Right Data Format for Your Application

Dense data formats store information where most entries have values, optimizing for high storage efficiency and faster processing in applications with continuous data streams. Sparse data formats, ideal for datasets with many zero or null values, reduce memory usage by recording only non-zero elements, improving performance in machine learning models and recommendation systems. Selecting the right data format depends on the application's need for storage optimization, query speed, and the inherent data distribution to achieve effective big data management.

Compression Techniques for Dense and Sparse Data

Dense data, characterized by a high volume of non-zero values, benefits from compression techniques like run-length encoding and dictionary coding that efficiently reduce storage by exploiting data redundancy. Sparse data, containing predominantly zero or null values, relies on specialized methods such as coordinate list (COO), compressed sparse row (CSR), and compressed sparse column (CSC) formats to store only non-zero elements, significantly decreasing memory usage. Advanced algorithms combining bitmaps and hashing further enhance compression efficiency for large-scale sparse datasets in big data applications.

Real-world Applications Leveraging Dense and Sparse Data

Dense data enables real-time fraud detection in financial transactions by providing rich, continuous data points for machine learning models to analyze patterns and anomalies. Sparse data is essential in recommendation systems, such as in e-commerce and streaming platforms, where user-item interactions are limited but critical for personalized suggestions. Healthcare analytics leverages dense imaging data for precise diagnostics, while sparse patient record data assists in large-scale population health studies and predictive modeling.

Future Trends: Evolving Roles of Data Density in Big Data

Dense data in big data environments enables more accurate machine learning models through enriched feature sets, while sparse data challenges algorithms with gaps and noise that drive innovation in data imputation and dimensionality reduction techniques. Future trends indicate growing integration of hybrid approaches that dynamically adjust analysis methods based on data density, enhancing real-time decision-making and predictive analytics. Advances in edge computing and AI-powered data preprocessing will increasingly optimize the handling of both dense and sparse datasets, fueling more robust insights across industries.

Dense Data vs Sparse Data Infographic

techiny.com

techiny.com