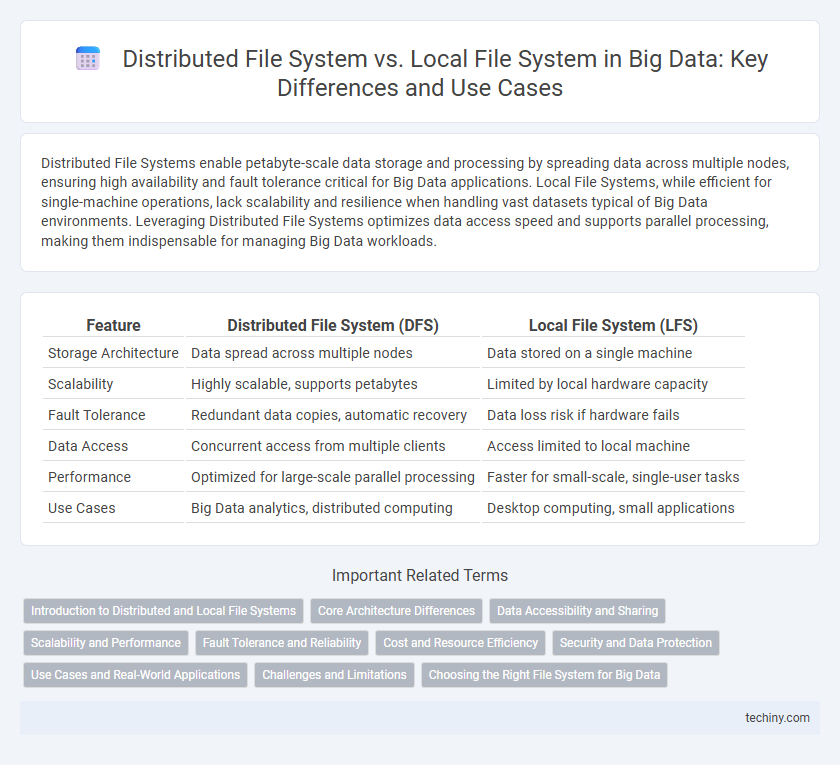

Distributed File Systems enable petabyte-scale data storage and processing by spreading data across multiple nodes, ensuring high availability and fault tolerance critical for Big Data applications. Local File Systems, while efficient for single-machine operations, lack scalability and resilience when handling vast datasets typical of Big Data environments. Leveraging Distributed File Systems optimizes data access speed and supports parallel processing, making them indispensable for managing Big Data workloads.

Table of Comparison

| Feature | Distributed File System (DFS) | Local File System (LFS) |

|---|---|---|

| Storage Architecture | Data spread across multiple nodes | Data stored on a single machine |

| Scalability | Highly scalable, supports petabytes | Limited by local hardware capacity |

| Fault Tolerance | Redundant data copies, automatic recovery | Data loss risk if hardware fails |

| Data Access | Concurrent access from multiple clients | Access limited to local machine |

| Performance | Optimized for large-scale parallel processing | Faster for small-scale, single-user tasks |

| Use Cases | Big Data analytics, distributed computing | Desktop computing, small applications |

Introduction to Distributed and Local File Systems

Distributed File Systems (DFS) enable data storage and access across multiple networked machines, enhancing scalability and fault tolerance compared to Local File Systems, which manage data on a single device with limited capacity. DFS supports parallel processing and large-scale data analytics essential for Big Data applications, while local file systems are typically constrained by hardware and are less suitable for handling vast datasets. Key technologies in distributed file systems include Hadoop Distributed File System (HDFS) and Google File System (GFS), designed to optimize data distribution and replication for reliability.

Core Architecture Differences

Distributed File Systems (DFS) leverage multiple networked nodes to store and manage data with replication and fault tolerance, enabling scalable data access across clusters. In contrast, Local File Systems operate on a single machine's storage, lacking inherent mechanisms for data distribution or high availability. Core architecture of DFS includes metadata servers and data nodes, whereas Local File Systems rely primarily on direct disk access and local I/O management.

Data Accessibility and Sharing

Distributed File Systems enable seamless data accessibility and sharing across multiple nodes, facilitating real-time collaboration and efficient management of large datasets typical in Big Data environments. Local File Systems restrict data access to a single machine, limiting scalability and hindering concurrent use by multiple users or applications. Leveraging a Distributed File System enhances fault tolerance and data redundancy, ensuring high availability and reliability critical for Big Data analytics.

Scalability and Performance

Distributed File Systems (DFS) excel in scalability by enabling data storage and access across multiple nodes, supporting vast datasets and parallel processing, which significantly boosts performance in big data environments. Local File Systems (LFS), limited to single-machine storage, often struggle with scalability and face bottlenecks when handling large-scale data, resulting in reduced performance. DFS architectures like HDFS provide fault tolerance and high throughput essential for big data analytics, whereas LFS is best suited for smaller, less complex workloads.

Fault Tolerance and Reliability

Distributed File Systems like HDFS provide high fault tolerance by replicating data across multiple nodes, ensuring data availability even if some nodes fail. Local File Systems lack built-in redundancy, making them less reliable in handling hardware failures or data corruption. The replication and automatic recovery features in distributed systems significantly enhance data reliability and minimize downtime compared to local storage solutions.

Cost and Resource Efficiency

Distributed File Systems minimize costs by enabling data storage across multiple low-cost commodity servers, reducing reliance on expensive, high-performance hardware inherent in Local File Systems. Resource efficiency improves through parallel data access and fault tolerance mechanisms, maximizing utilization of cluster-wide storage and processing power. In contrast, Local File Systems incur higher costs due to limited scalability and underutilized resources confined to single machines.

Security and Data Protection

Distributed File Systems (DFS) enhance security and data protection by enabling replication across multiple nodes, ensuring data availability and fault tolerance even during hardware failures. Local File Systems (LFS) rely on single-node storage, making them more susceptible to data loss and unauthorized access if the device is compromised. Advanced encryption, access controls, and auditing features embedded in DFS architectures provide superior protection compared to traditional LFS setups, especially in large-scale Big Data environments.

Use Cases and Real-World Applications

Distributed File Systems (DFS) are essential in Big Data environments for managing vast datasets across multiple nodes, enabling scalable storage and parallel processing in use cases like Hadoop's HDFS for analytics, machine learning, and large-scale web indexing. Local File Systems excel in scenarios requiring fast, low-latency access for single-machine applications, such as local development, small-scale data processing, and real-time streaming on edge devices. Enterprises leverage DFS for cloud storage and distributed computing clusters, while Local File Systems remain critical for on-premises servers and embedded systems needing immediate, direct file access.

Challenges and Limitations

Distributed File Systems face challenges such as network latency, limited bandwidth, and complex data consistency management across multiple nodes, which can impact performance and reliability. Local File Systems, while faster for single-node access, suffer from limited scalability and lack fault-tolerance inherent in distributed environments. Ensuring data integrity and synchronization in Distributed File Systems requires sophisticated algorithms, making them more complex to implement and maintain compared to Local File Systems.

Choosing the Right File System for Big Data

Distributed file systems like HDFS offer scalable storage and high fault tolerance essential for big data environments, whereas local file systems are limited by single-node capacity and lack redundancy. Selecting the right file system depends on data volume, access speed, and processing needs; distributed systems excel in handling vast, fault-tolerant datasets across multiple nodes. Local file systems may suffice for smaller, less complex datasets but fail to support the parallel processing and high availability required in big data analytics.

Distributed File System vs Local File System Infographic

techiny.com

techiny.com