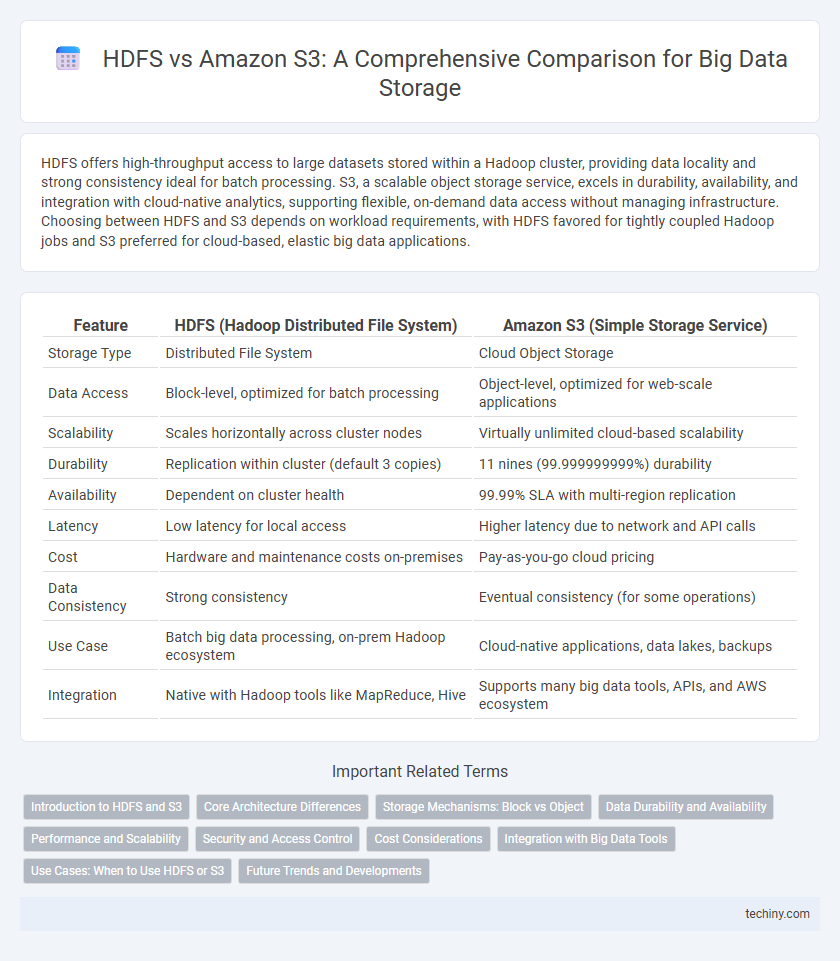

HDFS offers high-throughput access to large datasets stored within a Hadoop cluster, providing data locality and strong consistency ideal for batch processing. S3, a scalable object storage service, excels in durability, availability, and integration with cloud-native analytics, supporting flexible, on-demand data access without managing infrastructure. Choosing between HDFS and S3 depends on workload requirements, with HDFS favored for tightly coupled Hadoop jobs and S3 preferred for cloud-based, elastic big data applications.

Table of Comparison

| Feature | HDFS (Hadoop Distributed File System) | Amazon S3 (Simple Storage Service) |

|---|---|---|

| Storage Type | Distributed File System | Cloud Object Storage |

| Data Access | Block-level, optimized for batch processing | Object-level, optimized for web-scale applications |

| Scalability | Scales horizontally across cluster nodes | Virtually unlimited cloud-based scalability |

| Durability | Replication within cluster (default 3 copies) | 11 nines (99.999999999%) durability |

| Availability | Dependent on cluster health | 99.99% SLA with multi-region replication |

| Latency | Low latency for local access | Higher latency due to network and API calls |

| Cost | Hardware and maintenance costs on-premises | Pay-as-you-go cloud pricing |

| Data Consistency | Strong consistency | Eventual consistency (for some operations) |

| Use Case | Batch big data processing, on-prem Hadoop ecosystem | Cloud-native applications, data lakes, backups |

| Integration | Native with Hadoop tools like MapReduce, Hive | Supports many big data tools, APIs, and AWS ecosystem |

Introduction to HDFS and S3

HDFS (Hadoop Distributed File System) is a scalable and fault-tolerant storage system designed for big data applications, enabling high-throughput access to large datasets spread across clusters. Amazon S3 (Simple Storage Service) offers a highly durable, scalable, and object-based cloud storage platform, optimized for storing and retrieving any amount of data from anywhere. Both HDFS and S3 support big data workflows, but HDFS is tightly integrated with Hadoop ecosystems, while S3 provides a flexible, managed cloud storage solution with seamless integration across AWS services.

Core Architecture Differences

HDFS (Hadoop Distributed File System) features a master-slave architecture with a NameNode managing metadata and DataNodes storing actual data, optimized for high-throughput access within a Hadoop cluster. Amazon S3 (Simple Storage Service) operates as an object storage service with a flat namespace, providing scalability and durability via distributed, replicated storage across multiple availability zones without a centralized metadata server. Unlike HDFS's block storage and write-once-read-many model, S3 supports object storage with flexible read-write capabilities, emphasizing ease of access and integration with cloud-native applications.

Storage Mechanisms: Block vs Object

HDFS utilizes a block storage mechanism that splits large files into fixed-size blocks, typically 128 MB or 256 MB, distributed across multiple nodes to enable parallel processing and fault tolerance. In contrast, Amazon S3 employs an object storage architecture where data is stored as discrete objects within buckets, each containing the file data, metadata, and a unique identifier, optimizing scalability and retrieval performance. Block storage in HDFS is ideal for high-throughput data processing workloads, while S3's object storage excels in durability, availability, and seamless integration with cloud-native applications.

Data Durability and Availability

HDFS offers high data durability through replication across multiple nodes within a cluster, ensuring fault tolerance but limited by the physical infrastructure. Amazon S3 provides superior data durability with 99.999999999% (11 nines) durability by automatically distributing data across multiple geographically separated data centers. S3 also guarantees high availability with seamless scalability, while HDFS availability depends on cluster health and manual intervention during node failures.

Performance and Scalability

HDFS delivers high-throughput data access by distributing storage and computation across cluster nodes, optimizing performance for large-scale batch processing. S3 offers virtually unlimited scalability with durable, object-based storage, enabling seamless data expansion without cluster size constraints. Performance in cloud environments often favors S3 for elasticity and global accessibility, while HDFS excels in low-latency, tightly coupled data processing scenarios.

Security and Access Control

HDFS offers robust security features including Kerberos authentication, fine-grained access control via POSIX-compliant permissions, and encryption at rest and in transit, making it ideal for on-premises big data environments requiring stringent security. Amazon S3 provides scalable, object-based storage with flexible security controls such as AWS Identity and Access Management (IAM) policies, bucket policies, Access Control Lists (ACLs), and server-side encryption, enabling secure multi-tenant cloud access. While HDFS excels in tightly controlled cluster environments, S3's security model is designed for diverse, distributed access with comprehensive audit logging and compliance certifications.

Cost Considerations

HDFS offers lower storage costs for on-premises deployments by leveraging existing hardware resources, while Amazon S3 provides a pay-as-you-go model that eliminates upfront infrastructure expenses. S3's cost structure includes charges for data storage, retrieval, and transfer, which can add up with high-volume or frequent access patterns. For large-scale, variable workloads, S3's scalability and managed service reduce operational overhead, but total cost depends on usage patterns compared to the fixed costs of maintaining HDFS clusters.

Integration with Big Data Tools

HDFS offers native integration with Hadoop ecosystems, enabling seamless data processing with MapReduce, Apache Spark, and Hive. Amazon S3, as a scalable object storage service, supports numerous big data tools through connectors, facilitating cloud-native analytics and distributed processing. S3's compatibility with AWS services enhances flexibility, while HDFS excels in on-premises data locality and throughput for big data workloads.

Use Cases: When to Use HDFS or S3

HDFS excels in scenarios requiring high-throughput access to large datasets within a single cluster, making it ideal for on-premises big data analytics and real-time processing frameworks like Apache Spark or Hadoop MapReduce. S3 offers scalable, durable, and cost-effective object storage perfect for cloud-native applications, data archiving, and multi-region data sharing with flexible access patterns. Enterprises leverage HDFS for low-latency big data workloads and S3 for long-term storage and seamless integration with AWS analytics services such as Amazon Redshift and Athena.

Future Trends and Developments

HDFS is evolving with enhanced scalability and integration of machine learning frameworks, while Amazon S3 focuses on improving data lake architectures and serverless analytics capabilities. Continued advancements in hybrid cloud deployments and AI-driven data management are poised to blur the lines between HDFS and S3 functionalities. Emerging trends highlight stronger support for multi-cloud strategies and edge computing, driving innovation in real-time big data processing.

HDFS vs S3 Infographic

techiny.com

techiny.com