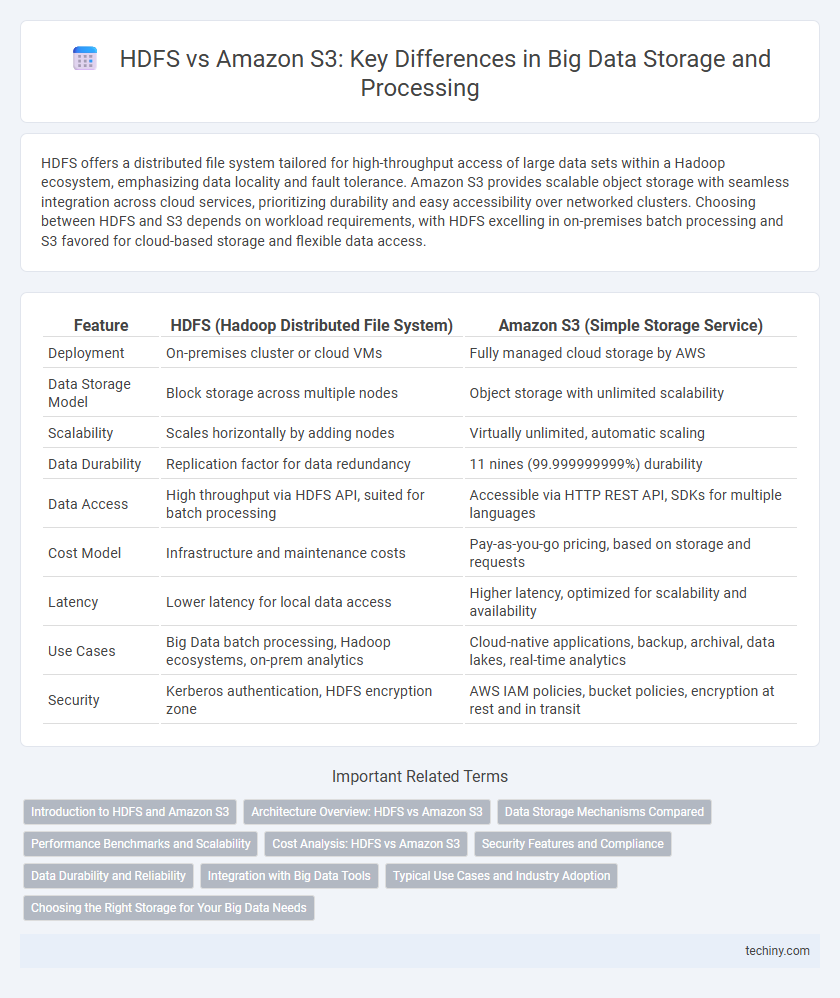

HDFS offers a distributed file system tailored for high-throughput access of large data sets within a Hadoop ecosystem, emphasizing data locality and fault tolerance. Amazon S3 provides scalable object storage with seamless integration across cloud services, prioritizing durability and easy accessibility over networked clusters. Choosing between HDFS and S3 depends on workload requirements, with HDFS excelling in on-premises batch processing and S3 favored for cloud-based storage and flexible data access.

Table of Comparison

| Feature | HDFS (Hadoop Distributed File System) | Amazon S3 (Simple Storage Service) |

|---|---|---|

| Deployment | On-premises cluster or cloud VMs | Fully managed cloud storage by AWS |

| Data Storage Model | Block storage across multiple nodes | Object storage with unlimited scalability |

| Scalability | Scales horizontally by adding nodes | Virtually unlimited, automatic scaling |

| Data Durability | Replication factor for data redundancy | 11 nines (99.999999999%) durability |

| Data Access | High throughput via HDFS API, suited for batch processing | Accessible via HTTP REST API, SDKs for multiple languages |

| Cost Model | Infrastructure and maintenance costs | Pay-as-you-go pricing, based on storage and requests |

| Latency | Lower latency for local data access | Higher latency, optimized for scalability and availability |

| Use Cases | Big Data batch processing, Hadoop ecosystems, on-prem analytics | Cloud-native applications, backup, archival, data lakes, real-time analytics |

| Security | Kerberos authentication, HDFS encryption zone | AWS IAM policies, bucket policies, encryption at rest and in transit |

Introduction to HDFS and Amazon S3

HDFS (Hadoop Distributed File System) is a scalable, fault-tolerant storage system designed for big data processing within Hadoop ecosystems, providing high-throughput access to large datasets across distributed clusters. Amazon S3 (Simple Storage Service) is a highly durable, object-based cloud storage service offering virtually unlimited scalability, data availability, and seamless integration with various AWS analytics and machine learning services. Both HDFS and Amazon S3 play critical roles in big data storage architectures, with HDFS optimized for on-premises cluster environments and Amazon S3 enabling flexible, cloud-native data lake solutions.

Architecture Overview: HDFS vs Amazon S3

HDFS (Hadoop Distributed File System) operates as a distributed file system with a master-slave architecture, utilizing a NameNode for metadata management and DataNodes for data storage, enabling high-performance batch processing. Amazon S3 (Simple Storage Service) is a fully managed object storage service with a flat architecture, designed for high durability and availability, leveraging a web-scale infrastructure and RESTful API access. While HDFS is tightly integrated with Hadoop ecosystems for on-premises deployments, Amazon S3 offers scalable, cloud-native storage optimized for diverse data workloads and global access.

Data Storage Mechanisms Compared

HDFS stores data by splitting files into large blocks, typically 128MB or 256MB, and distributing them across a cluster for parallel processing and fault tolerance. Amazon S3 uses an object storage model, storing data as discrete objects in buckets with metadata, enabling scalable, durable, and globally accessible storage. The block-based approach of HDFS optimizes for high-throughput data processing in Hadoop ecosystems, while Amazon S3's object storage supports flexible access patterns and integration with diverse cloud services.

Performance Benchmarks and Scalability

HDFS offers higher throughput for large-scale batch processing due to its data locality and distributed file system architecture, making it ideal for tight integration with Hadoop ecosystems. Amazon S3 provides virtually unlimited scalability and high availability with its object storage model, but exhibits higher latency and slightly lower throughput in benchmark tests compared to HDFS. Performance benchmarks show HDFS excels in I/O-intensive workloads, while S3's scalability supports massive data growth without complex infrastructure management.

Cost Analysis: HDFS vs Amazon S3

HDFS presents lower storage costs for on-premises setups due to its reliance on commodity hardware, while Amazon S3 adopts a pay-as-you-go model with charges based on data storage, requests, and data transfer. Amazon S3 eliminates infrastructure maintenance expenses, offering scalability and availability that may offset higher per-GB costs compared to the capital-intensive initial investment of HDFS. Cost efficiency hinges on workload patterns, data access frequency, and long-term storage requirements, with S3 favoring dynamic, scalable cloud environments and HDFS benefiting predictable high-throughput, local processing.

Security Features and Compliance

HDFS offers strong data encryption at rest and in transit, along with Kerberos-based authentication for secure access management within Hadoop clusters. Amazon S3 provides comprehensive security features including server-side encryption options (SSE-S3, SSE-KMS, SSE-C), IAM policies, and bucket policies for fine-grained access control, plus built-in compliance certifications such as HIPAA, GDPR, and FedRAMP. While HDFS requires additional configuration for compliance adherence, Amazon S3 delivers out-of-the-box compliance support, making it preferable for regulated industries.

Data Durability and Reliability

HDFS provides high data durability through replication across multiple nodes within a cluster, ensuring fault tolerance in on-premises environments. Amazon S3 offers superior durability with 99.999999999% (11 nines) and automatic multi-region replication, minimizing data loss risks in cloud storage. Both solutions support reliable data storage, but S3's scalability and global redundancy provide enhanced protection for distributed big data applications.

Integration with Big Data Tools

HDFS offers seamless integration with Apache Hadoop and related big data frameworks such as Apache Spark, providing native support for distributed storage and processing. Amazon S3 integrates easily with a wide range of big data tools including AWS Glue, AWS EMR, and Apache Spark through connectors and APIs, enabling scalable, cloud-based data lakes. Both storage solutions support ecosystems for big data analytics, but S3's cloud-native architecture facilitates easier integration with modern data pipelines and serverless compute services.

Typical Use Cases and Industry Adoption

HDFS is widely adopted in big data analytics environments where high-throughput access to large datasets is critical, particularly in industries like telecommunications, finance, and healthcare for batch processing and machine learning workloads. Amazon S3 excels in cloud-native applications, disaster recovery, and data lakes, favored by e-commerce, media, and technology enterprises for scalable storage and seamless integration with AWS analytics and AI services. Organizations often choose HDFS for on-premise, high-performance compute clusters while leveraging Amazon S3 for flexible, cost-effective cloud storage with global accessibility.

Choosing the Right Storage for Your Big Data Needs

HDFS offers distributed storage optimized for high-throughput access and seamless integration with Hadoop ecosystems, making it ideal for on-premises big data deployments requiring low-latency performance. Amazon S3 provides scalable, durable object storage with global accessibility, supporting elasticity and pay-as-you-go models suited for cloud-native big data applications and backup solutions. Evaluating workload patterns, data access frequency, cost considerations, and integration requirements ensures the optimal choice between on-premises HDFS and cloud-based Amazon S3 for big data storage.

HDFS vs Amazon S3 Infographic

techiny.com

techiny.com