Data replication involves creating and maintaining copies of data across multiple nodes to ensure consistency and availability in big data environments. Data duplication refers to redundant storage of identical data, often leading to inefficiencies and increased storage costs. Effective data replication optimizes performance and fault tolerance, whereas unchecked data duplication can degrade system scalability and resource utilization.

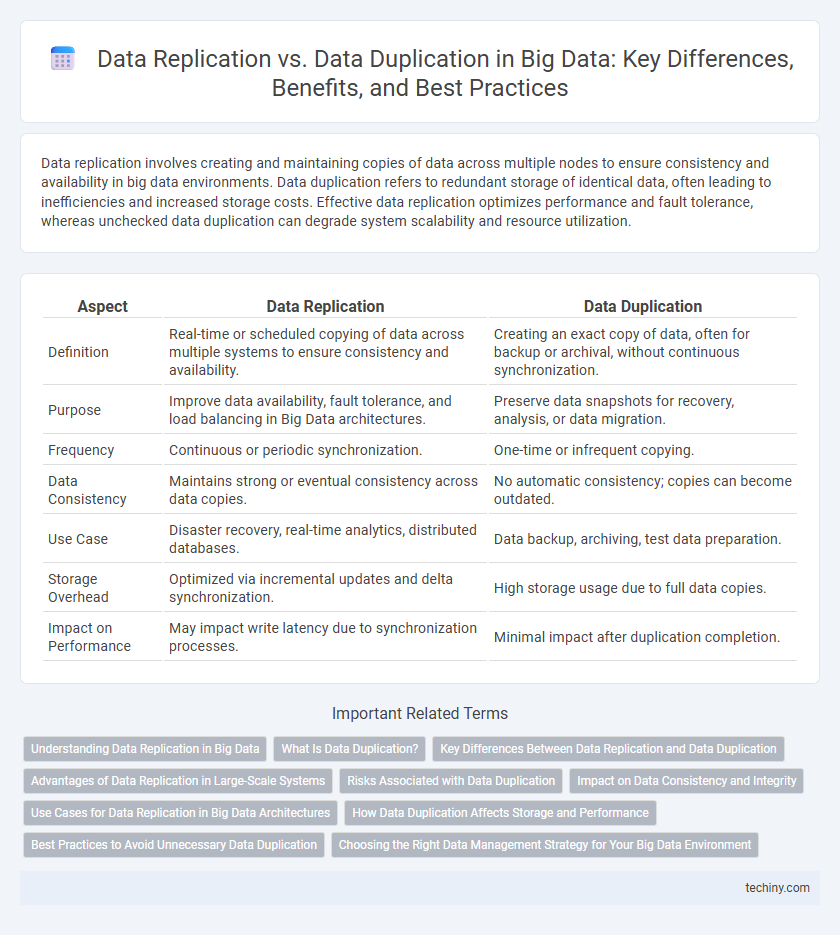

Table of Comparison

| Aspect | Data Replication | Data Duplication |

|---|---|---|

| Definition | Real-time or scheduled copying of data across multiple systems to ensure consistency and availability. | Creating an exact copy of data, often for backup or archival, without continuous synchronization. |

| Purpose | Improve data availability, fault tolerance, and load balancing in Big Data architectures. | Preserve data snapshots for recovery, analysis, or data migration. |

| Frequency | Continuous or periodic synchronization. | One-time or infrequent copying. |

| Data Consistency | Maintains strong or eventual consistency across data copies. | No automatic consistency; copies can become outdated. |

| Use Case | Disaster recovery, real-time analytics, distributed databases. | Data backup, archiving, test data preparation. |

| Storage Overhead | Optimized via incremental updates and delta synchronization. | High storage usage due to full data copies. |

| Impact on Performance | May impact write latency due to synchronization processes. | Minimal impact after duplication completion. |

Understanding Data Replication in Big Data

Data replication in Big Data involves creating and maintaining synchronized copies of datasets across multiple storage nodes to ensure high availability, fault tolerance, and improved data accessibility. Unlike data duplication, which often results in redundant and unmanaged data copies, replication uses efficient mechanisms like distributed file systems and consensus algorithms to maintain data consistency and integrity in real-time. This process is critical for scalable analytics, disaster recovery, and load balancing in large-scale data environments.

What Is Data Duplication?

Data duplication in big data refers to the process of creating exact copies of datasets across different storage locations, which can lead to increased storage costs and potential data inconsistencies. Unlike data replication, which ensures synchronized copies for high availability and disaster recovery, duplication often happens unintentionally during data ingestion or integration processes. Proper management of data duplication is essential to maintaining data quality and optimizing storage efficiency in big data environments.

Key Differences Between Data Replication and Data Duplication

Data replication involves copying data across multiple systems or locations to ensure consistency and fault tolerance, often in real-time or near real-time. Data duplication, however, refers to creating redundant copies of data usually within the same system or storage to avoid data loss but without synchronization. The key differences include replication's focus on data synchronization and availability, whereas duplication primarily addresses data backup and recovery needs.

Advantages of Data Replication in Large-Scale Systems

Data replication enhances data availability and fault tolerance by synchronizing copies across multiple nodes, ensuring continuous access even during failures. It optimizes load balancing and improves query performance in large-scale systems by distributing workloads efficiently. Unlike data duplication, replication maintains data consistency and reduces storage overhead by selectively synchronizing changes rather than creating full copies.

Risks Associated with Data Duplication

Data duplication in big data environments increases the risk of data inconsistency, leading to conflicting versions of the same information across systems. It also amplifies storage costs and complicates data management by creating redundant datasets that require additional maintenance and synchronization efforts. These risks undermine data integrity and can result in inaccurate analytics and decision-making processes.

Impact on Data Consistency and Integrity

Data replication ensures high data consistency and integrity by synchronizing multiple copies of data across distributed systems in real-time, maintaining uniformity and reliability. Data duplication, on the other hand, often involves creating static copies without continuous updates, increasing the risk of inconsistencies and data integrity issues over time. Effective data replication strategies optimize large-scale Big Data environments by minimizing data loss and ensuring transactional accuracy across nodes.

Use Cases for Data Replication in Big Data Architectures

Data replication in big data architectures is essential for ensuring high availability and disaster recovery by synchronizing data across multiple nodes or data centers in real-time. It supports distributed analytics and fast query performance by maintaining consistent datasets closer to users in geographically dispersed locations. Common use cases include fault tolerance in distributed storage systems, load balancing across data clusters, and enabling seamless data access in hybrid cloud environments.

How Data Duplication Affects Storage and Performance

Data duplication in Big Data systems leads to significant increases in storage requirements, as multiple copies of the same data consume additional disk space unnecessarily. This redundancy can degrade system performance by causing longer data retrieval times and increased resource usage during data management operations. Unlike controlled data replication strategies that optimize for fault tolerance and availability, unregulated duplication often results in inefficient storage utilization and slower query processing.

Best Practices to Avoid Unnecessary Data Duplication

Data replication involves creating and maintaining synchronized copies of data across multiple systems to ensure availability and fault tolerance, whereas data duplication refers to redundant copies that serve no distinct purpose and can lead to inefficiencies. Best practices to avoid unnecessary data duplication include implementing centralized data governance policies, utilizing deduplication algorithms during data ingestion, and choosing replication strategies that balance consistency with storage optimization. Leveraging metadata management and monitoring tools helps identify duplicate datasets early, enabling streamlined storage costs and improved data quality in big data environments.

Choosing the Right Data Management Strategy for Your Big Data Environment

Data replication ensures high availability and disaster recovery by maintaining synchronized copies of data across multiple nodes, optimizing for consistency and fault tolerance in big data environments. Data duplication involves creating independent data copies, which can lead to increased storage costs and potential data inconsistency but may be useful for backup or archiving purposes. Selecting the right data management strategy depends on factors such as scalability requirements, latency tolerance, and cost constraints within your big data architecture.

Data Replication vs Data Duplication Infographic

techiny.com

techiny.com