Fault tolerance in Big Data systems ensures continuous operation despite hardware or software failures by automatically detecting and recovering from errors, minimizing data loss and downtime. High availability focuses on maintaining system accessibility and reliability through redundant components and failover mechanisms, reducing the impact of outages on user experience. Balancing fault tolerance and high availability is crucial for delivering resilient Big Data platforms that support uninterrupted data processing and analytics.

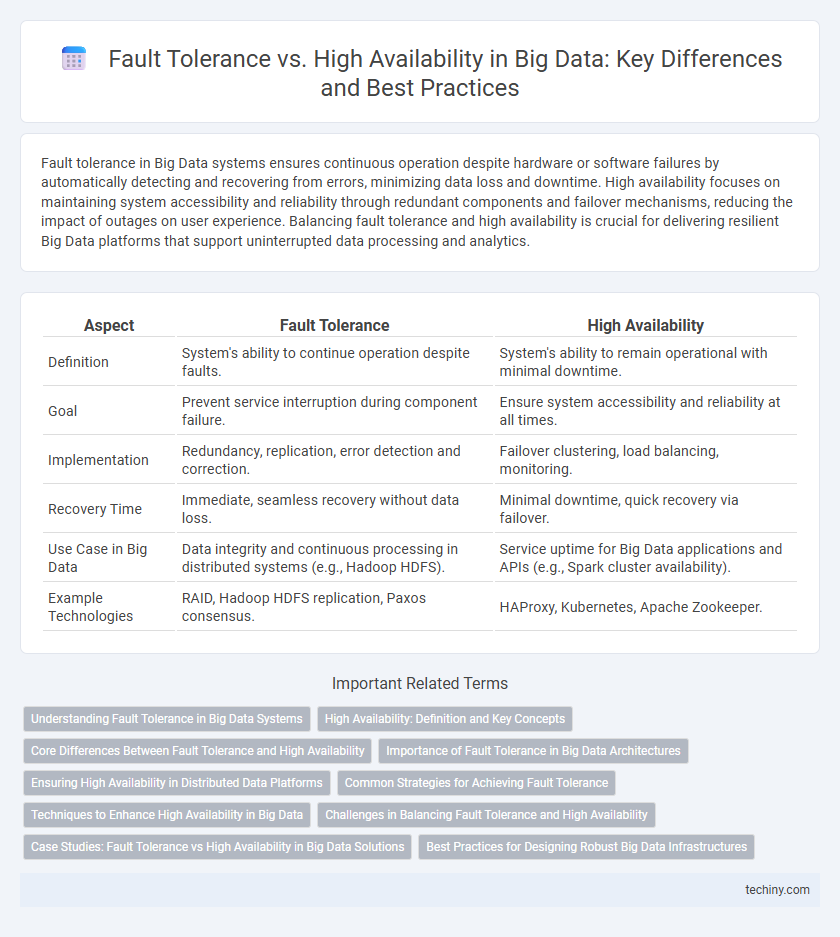

Table of Comparison

| Aspect | Fault Tolerance | High Availability |

|---|---|---|

| Definition | System's ability to continue operation despite faults. | System's ability to remain operational with minimal downtime. |

| Goal | Prevent service interruption during component failure. | Ensure system accessibility and reliability at all times. |

| Implementation | Redundancy, replication, error detection and correction. | Failover clustering, load balancing, monitoring. |

| Recovery Time | Immediate, seamless recovery without data loss. | Minimal downtime, quick recovery via failover. |

| Use Case in Big Data | Data integrity and continuous processing in distributed systems (e.g., Hadoop HDFS). | Service uptime for Big Data applications and APIs (e.g., Spark cluster availability). |

| Example Technologies | RAID, Hadoop HDFS replication, Paxos consensus. | HAProxy, Kubernetes, Apache Zookeeper. |

Understanding Fault Tolerance in Big Data Systems

Fault tolerance in big data systems ensures continuous data processing despite hardware failures or software crashes by automatically detecting and recovering from faults without data loss. It relies on techniques like data replication, checkpointing, and distributed storage to maintain system reliability and integrity. Understanding fault tolerance is crucial for designing resilient big data architectures that minimize downtime and support seamless data analytics.

High Availability: Definition and Key Concepts

High Availability (HA) in Big Data systems ensures continuous operation with minimal downtime by using redundant components and failover mechanisms. It focuses on system reliability through clustering, load balancing, and real-time monitoring to maintain seamless data processing and access. HA architectures prioritize rapid recovery and uninterrupted service to support critical data workflows and business continuity.

Core Differences Between Fault Tolerance and High Availability

Fault tolerance ensures system operations continue seamlessly despite hardware or software failures by using redundant components and error detection mechanisms, while high availability focuses on minimizing downtime and quickly restoring service through failover processes and clustering. Fault-tolerant systems aim for zero interruption with continuous operation, whereas high availability accepts brief service interruptions but targets rapid recovery to meet uptime goals, such as 99.999% (five nines). The core difference lies in fault tolerance's proactive prevention of disruptions versus high availability's emphasis on swift fault response and service restoration.

Importance of Fault Tolerance in Big Data Architectures

Fault tolerance is critical in Big Data architectures to ensure continuous data processing despite hardware failures or software errors, minimizing system downtime and preventing data loss. Implementing fault-tolerant mechanisms such as data replication, checkpointing, and automatic failure recovery enhances system reliability and maintains data integrity in distributed environments. These capabilities enable Big Data platforms like Hadoop and Apache Spark to deliver consistent performance and support real-time analytics even under adverse conditions.

Ensuring High Availability in Distributed Data Platforms

Ensuring high availability in distributed data platforms requires robust fault tolerance mechanisms such as data replication, automatic failover, and consistent state synchronization across nodes. Techniques like quorum-based consensus algorithms and partition tolerance help maintain continuous service despite hardware failures or network partitions. Optimizing resource allocation and monitoring system health in real-time further minimizes downtime and guarantees uninterrupted access to big data applications.

Common Strategies for Achieving Fault Tolerance

Common strategies for achieving fault tolerance in Big Data systems include data replication, checkpointing, and failover mechanisms, which ensure continuous operation despite hardware or software failures. Replication involves maintaining multiple copies of data across distributed nodes to prevent loss and enable quick recovery. Checkpointing periodically saves system states, allowing seamless restoration, while automated failover switches operations to standby components without downtime, collectively enhancing system reliability and data integrity.

Techniques to Enhance High Availability in Big Data

Techniques to enhance high availability in Big Data systems include data replication, load balancing, and failover strategies that ensure continuous system operation despite hardware or software failures. Distributed file systems like HDFS use replication across multiple nodes to prevent data loss and minimize downtime. Leveraging container orchestration platforms such as Kubernetes enables automated recovery and scaling, further improving availability in large-scale Big Data environments.

Challenges in Balancing Fault Tolerance and High Availability

Balancing fault tolerance and high availability in Big Data systems presents significant challenges due to the need for continuous data processing without interruptions while managing hardware failures and network issues. Ensuring fault tolerance requires redundancy and data replication, which can introduce latency and resource overhead, potentially impacting system responsiveness and availability. Achieving an optimal balance demands advanced orchestration techniques and architecture designs that minimize downtime yet maintain consistent data integrity under variable loads and failure scenarios.

Case Studies: Fault Tolerance vs High Availability in Big Data Solutions

Case studies in Big Data solutions reveal fault tolerance ensures systems continue processing despite hardware failures by replicating data and using error detection algorithms, minimizing data loss and downtime. High availability architectures prioritize system uptime through redundant components and failover mechanisms, achieving near-zero service interruptions for critical data applications. Real-world implementations like Hadoop's HDFS leverage fault tolerance by replicating data blocks, while Apache Cassandra emphasizes high availability with distributed, peer-to-peer nodes that automatically handle failover.

Best Practices for Designing Robust Big Data Infrastructures

Effective Big Data infrastructures balance fault tolerance and high availability by integrating redundant components, automated failover mechanisms, and real-time data replication. Implementing distributed storage systems like Hadoop HDFS or Apache Cassandra enhances fault tolerance by enabling data recovery in case of node failures. Prioritizing proactive monitoring, regular backup strategies, and load balancing ensures continuous service uptime and minimal data loss in large-scale Big Data environments.

Fault Tolerance vs High Availability Infographic

techiny.com

techiny.com