Big Data pets generate massive volumes of data through constant monitoring of health, activity, and environment, requiring scalable storage solutions to manage this influx efficiently. Velocity plays a crucial role as real-time data streams from sensors and wearables demand rapid processing to provide timely insights and alerts. Balancing volume and velocity ensures that pet owners and veterinarians receive actionable information without delays or data overload.

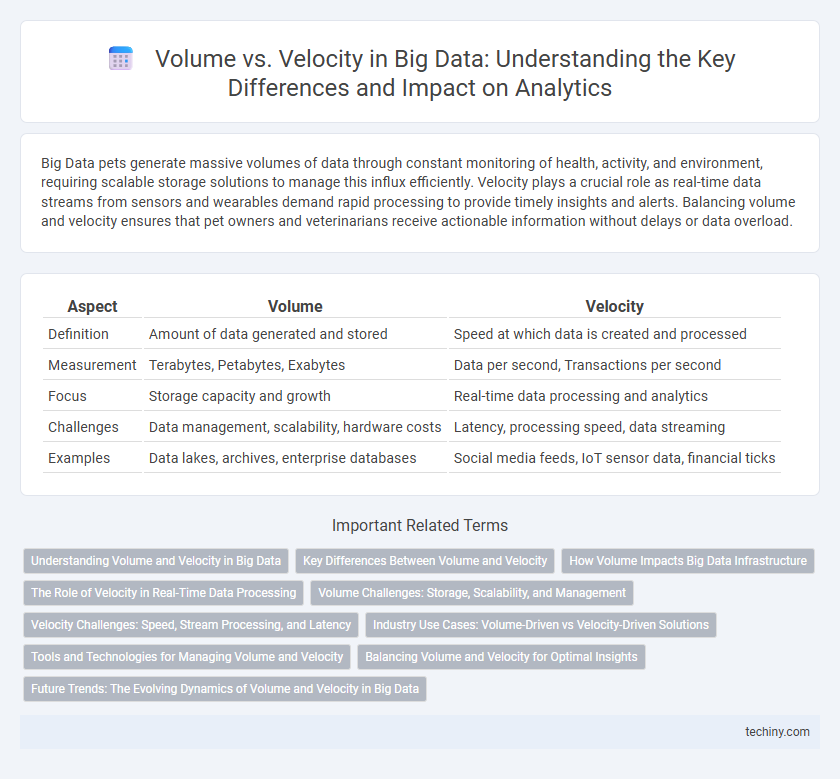

Table of Comparison

| Aspect | Volume | Velocity |

|---|---|---|

| Definition | Amount of data generated and stored | Speed at which data is created and processed |

| Measurement | Terabytes, Petabytes, Exabytes | Data per second, Transactions per second |

| Focus | Storage capacity and growth | Real-time data processing and analytics |

| Challenges | Data management, scalability, hardware costs | Latency, processing speed, data streaming |

| Examples | Data lakes, archives, enterprise databases | Social media feeds, IoT sensor data, financial ticks |

Understanding Volume and Velocity in Big Data

Volume in Big Data refers to the massive amounts of data generated from various sources such as social media, sensors, and transactions, often reaching terabytes or petabytes daily. Velocity describes the speed at which this data is produced, processed, and analyzed, highlighting the need for real-time or near-real-time data handling capabilities. Effective Big Data strategies must balance managing extensive data volume with the rapid ingestion and processing velocity to derive timely and relevant insights.

Key Differences Between Volume and Velocity

Volume in Big Data refers to the massive amounts of data generated and stored, often measured in terabytes or petabytes, highlighting the scale of information handled. Velocity describes the speed at which data is created, processed, and analyzed in real-time or near-real-time environments, emphasizing the urgency and timeliness of data flow. Key differences include volume's focus on data quantity while velocity centers on the rapidity of data generation and response times critical for time-sensitive analytics.

How Volume Impacts Big Data Infrastructure

The massive volume of data generated daily demands scalable storage solutions and robust processing capabilities within Big Data infrastructure. High data volume influences the architectural design by necessitating distributed systems such as Hadoop or cloud-based platforms to efficiently manage and analyze information. Infrastructure must also address challenges related to data transfer speeds, storage costs, and real-time accessibility to handle the growing scale of datasets.

The Role of Velocity in Real-Time Data Processing

Velocity in Big Data refers to the speed at which data is generated, collected, and processed, making it crucial for real-time analytics. High-velocity data streams enable immediate decision-making by continuously feeding systems with up-to-date information from sources like social media, sensors, and transactional systems. Real-time data processing leverages velocity to detect trends, anomalies, and insights instantly, enhancing responsiveness in dynamic environments such as finance, healthcare, and IoT.

Volume Challenges: Storage, Scalability, and Management

Volume challenges in Big Data primarily revolve around storing vast amounts of information efficiently, requiring scalable infrastructure to handle exponential data growth. Traditional databases often struggle with scalability, prompting the adoption of distributed storage systems like Hadoop Distributed File System (HDFS) and cloud-based solutions to manage petabytes of data. Effective data management techniques, including compression, partitioning, and indexing, are essential to optimize retrieval speed and maintain system performance under high volume conditions.

Velocity Challenges: Speed, Stream Processing, and Latency

Velocity in Big Data refers to the rapid speed at which data is generated and processed, presenting significant challenges in stream processing and maintaining low latency. Handling high-velocity data streams requires robust real-time analytics frameworks capable of ingesting and analyzing continuous data flows without delay. Minimizing latency is critical for applications such as financial trading, telecommunications, and IoT sensor networks where immediate insights drive decision-making.

Industry Use Cases: Volume-Driven vs Velocity-Driven Solutions

Volume-driven Big Data solutions excel in industries like retail and banking, where massive datasets such as transaction histories and customer records require deep analysis for trends and risk assessment. Velocity-driven solutions are critical in sectors like telecommunications and real-time fraud detection, processing rapid data streams to enable instantaneous decision-making and responsiveness. Understanding the distinction allows businesses to tailor their Big Data strategies to either prioritize extensive data accumulation or high-speed data processing based on their operational demands.

Tools and Technologies for Managing Volume and Velocity

Apache Hadoop and Apache Spark are essential tools for managing big data volume, providing distributed storage and parallel processing capabilities. For handling velocity, technologies like Apache Kafka and Apache Flink enable real-time data streaming and low-latency processing. Scalable cloud platforms such as AWS EMR and Google BigQuery integrate these tools to optimize both data volume storage and high-speed data ingestion.

Balancing Volume and Velocity for Optimal Insights

Balancing volume and velocity in big data processing ensures efficient handling of massive datasets while maintaining real-time analytics capabilities. High data volume requires scalable storage solutions such as distributed file systems, whereas velocity demands stream processing frameworks like Apache Kafka or Apache Flink. Optimizing the interplay between data volume and velocity enables businesses to extract timely, actionable insights without compromising system performance.

Future Trends: The Evolving Dynamics of Volume and Velocity in Big Data

Future trends in Big Data highlight a shifting balance where velocity increasingly rivals volume in importance, driven by real-time analytics and the surge of IoT devices generating continuous data streams. Advanced technologies like edge computing and AI-powered data processing are enhancing the capability to handle high-velocity data while ensuring scalability for ever-growing data volumes. Organizations adopting these innovations can achieve faster insights, improved decision-making, and greater agility in managing complex data environments.

Volume vs Velocity Infographic

techiny.com

techiny.com