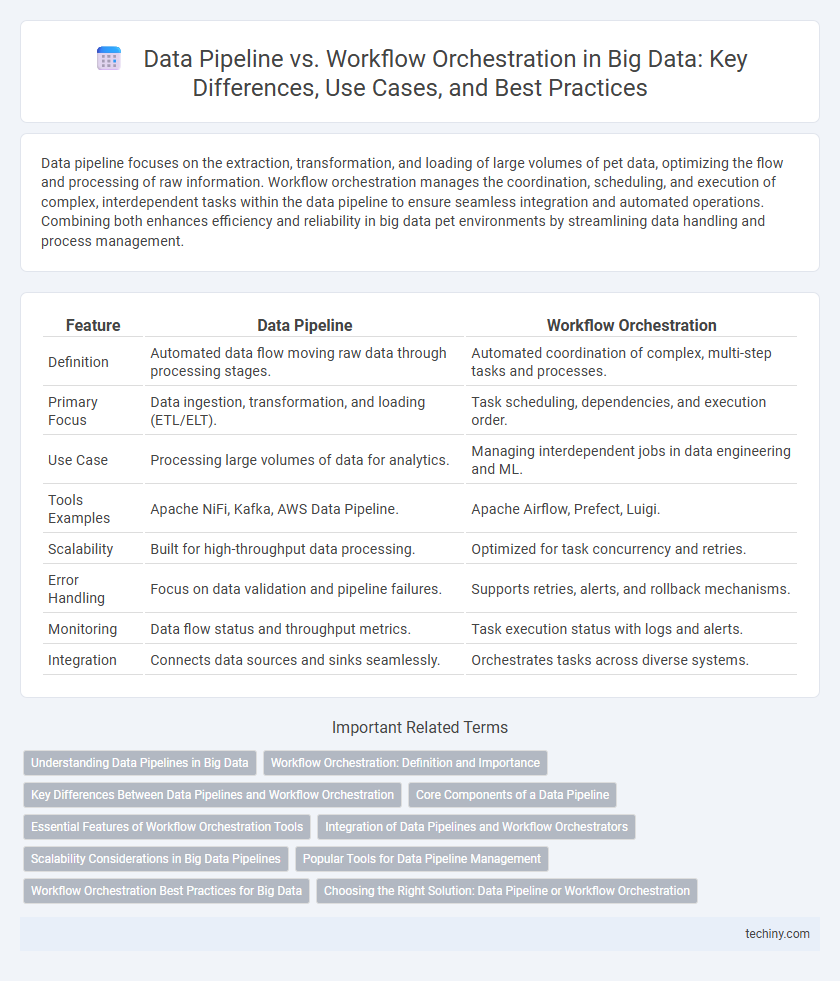

Data pipeline focuses on the extraction, transformation, and loading of large volumes of pet data, optimizing the flow and processing of raw information. Workflow orchestration manages the coordination, scheduling, and execution of complex, interdependent tasks within the data pipeline to ensure seamless integration and automated operations. Combining both enhances efficiency and reliability in big data pet environments by streamlining data handling and process management.

Table of Comparison

| Feature | Data Pipeline | Workflow Orchestration |

|---|---|---|

| Definition | Automated data flow moving raw data through processing stages. | Automated coordination of complex, multi-step tasks and processes. |

| Primary Focus | Data ingestion, transformation, and loading (ETL/ELT). | Task scheduling, dependencies, and execution order. |

| Use Case | Processing large volumes of data for analytics. | Managing interdependent jobs in data engineering and ML. |

| Tools Examples | Apache NiFi, Kafka, AWS Data Pipeline. | Apache Airflow, Prefect, Luigi. |

| Scalability | Built for high-throughput data processing. | Optimized for task concurrency and retries. |

| Error Handling | Focus on data validation and pipeline failures. | Supports retries, alerts, and rollback mechanisms. |

| Monitoring | Data flow status and throughput metrics. | Task execution status with logs and alerts. |

| Integration | Connects data sources and sinks seamlessly. | Orchestrates tasks across diverse systems. |

Understanding Data Pipelines in Big Data

Data pipelines in Big Data systems are designed to automate the movement and transformation of large-scale data from source to destination, ensuring continuous and reliable data flow. Unlike workflow orchestration, which manages and schedules complex dependent tasks across various systems, data pipelines specifically focus on processing and integrating raw data into usable formats for analytics. Efficient data pipeline architecture enhances data quality, reduces latency, and supports scalable real-time or batch processing environments critical for Big Data applications.

Workflow Orchestration: Definition and Importance

Workflow orchestration in Big Data refers to the automated coordination and management of complex data processes and tasks to ensure seamless execution and resource optimization. It plays a crucial role in integrating various data sources, tools, and systems, enabling real-time data processing and improving operational efficiency. Effective workflow orchestration reduces data latency, enhances scalability, and supports robust data governance across distributed environments.

Key Differences Between Data Pipelines and Workflow Orchestration

Data pipelines primarily focus on the automated movement and transformation of large datasets across various systems, ensuring seamless data ingestion, processing, and storage. Workflow orchestration manages and sequences multiple interdependent tasks, integrating diverse processes and systems to optimize resource allocation, scheduling, and error handling. Key differences include pipelines emphasizing data flow and transformation, while orchestration centers on coordinating workflows and task dependencies across complex environments.

Core Components of a Data Pipeline

Core components of a data pipeline include data ingestion, data processing, and data storage, enabling seamless flow and transformation of raw data into actionable insights. Data ingestion collects data from diverse sources using batch or real-time methods, while processing involves data cleaning, transformation, and enrichment. The final component, data storage, ensures organized, scalable repositories such as data lakes or warehouses for efficient querying and analytics.

Essential Features of Workflow Orchestration Tools

Workflow orchestration tools provide essential features such as automated task scheduling, real-time monitoring, and error handling that streamline complex data pipeline processes. They enable seamless integration of heterogeneous data sources, ensuring data consistency and efficient resource management throughout the pipeline lifecycle. Robust orchestration frameworks support scalability and fault tolerance, crucial for maintaining high-performance big data workflows.

Integration of Data Pipelines and Workflow Orchestrators

Data pipeline integration involves seamless data extraction, transformation, and loading (ETL) processes that feed into various systems, ensuring continuous data flow and consistency. Workflow orchestration platforms like Apache Airflow or Prefect manage and automate complex sequences of data tasks, coordinating dependencies and error handling across pipelines. Combining data pipelines with workflow orchestration enables scalable, automated, and reliable big data processing environments essential for real-time analytics and data-driven decision-making.

Scalability Considerations in Big Data Pipelines

Scalability considerations in big data pipelines emphasize the efficient handling of increasing data volumes and processing demands through robust data pipeline architectures. Data pipelines must support scalable data ingestion, transformation, and storage mechanisms, leveraging distributed systems like Apache Kafka and Apache Hadoop to manage high-throughput data streams. Workflow orchestration tools such as Apache Airflow or Luigi enable dynamic scaling of complex task dependencies and resource allocation, ensuring seamless execution and fault tolerance as data workloads grow exponentially.

Popular Tools for Data Pipeline Management

Popular tools for data pipeline management include Apache Airflow, Luigi, and Apache NiFi, each offering robust capabilities for designing, scheduling, and monitoring complex data workflows. Apache Airflow is widely favored for its dynamic workflow generation and scalable architecture, while Luigi excels in handling batch data processing tasks with its dependency resolution features. Apache NiFi provides a user-friendly interface for real-time data ingestion, transformation, and routing, making it ideal for data flow automation across varied sources.

Workflow Orchestration Best Practices for Big Data

Workflow orchestration in big data environments ensures seamless integration and automation of complex data pipelines, optimizing resource allocation and reducing processing latency. Best practices include modular pipeline design, implementing fault tolerance with automatic retries, and using scalable orchestration tools like Apache Airflow or Kubeflow to manage dependencies and monitor execution. Prioritizing clear logging and alerting mechanisms enables swift troubleshooting and maintains data pipeline reliability at scale.

Choosing the Right Solution: Data Pipeline or Workflow Orchestration

Choosing the right solution between data pipeline and workflow orchestration involves evaluating the complexity and scale of data processing tasks, where data pipelines streamline the movement and transformation of large datasets in real-time or batch modes, while workflow orchestration manages the automated execution of interdependent tasks across diverse systems. Data pipeline tools like Apache Kafka, Apache NiFi, and AWS Glue emphasize efficient data ingestion and transformation, whereas workflow orchestration platforms such as Apache Airflow and Prefect excel in scheduling, monitoring, and error handling of multi-step workflows. Organizations should consider data volume, integration requirements, latency needs, and operational visibility to select between robust data pipelines for high-throughput data flows or comprehensive workflow orchestrators for managing complex, conditional task dependencies.

Data Pipeline vs Workflow Orchestration Infographic

techiny.com

techiny.com