In Big Data systems, the CAP theorem highlights the trade-off between consistency and availability, where ensuring data consistency across distributed nodes can reduce system availability during network partitions. Prioritizing consistency guarantees that all users see the same data simultaneously, critical for applications requiring accurate real-time updates. Conversely, emphasizing availability allows the system to respond quickly despite partitions, accepting temporary inconsistencies to maintain user accessibility.

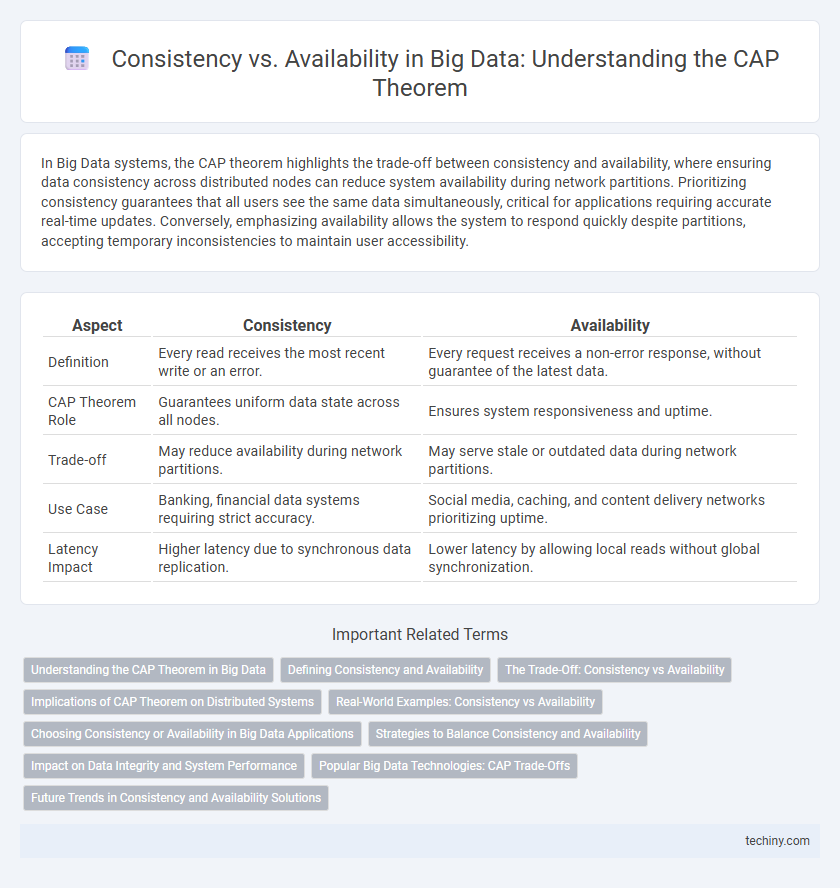

Table of Comparison

| Aspect | Consistency | Availability |

|---|---|---|

| Definition | Every read receives the most recent write or an error. | Every request receives a non-error response, without guarantee of the latest data. |

| CAP Theorem Role | Guarantees uniform data state across all nodes. | Ensures system responsiveness and uptime. |

| Trade-off | May reduce availability during network partitions. | May serve stale or outdated data during network partitions. |

| Use Case | Banking, financial data systems requiring strict accuracy. | Social media, caching, and content delivery networks prioritizing uptime. |

| Latency Impact | Higher latency due to synchronous data replication. | Lower latency by allowing local reads without global synchronization. |

Understanding the CAP Theorem in Big Data

The CAP Theorem in Big Data highlights the trade-offs between Consistency, Availability, and Partition Tolerance in distributed systems, asserting that only two of these three properties can be achieved simultaneously. Consistency ensures that every read receives the most recent write, while Availability guarantees that every request receives a response without a guarantee of the most recent data. Partition Tolerance allows systems to continue operating despite network failures, making it a critical consideration in designing scalable and reliable Big Data architectures.

Defining Consistency and Availability

Consistency ensures that every read receives the most recent write or an error, guaranteeing a uniform view of the data across all nodes. Availability guarantees that every request receives a response, regardless of the state of any individual node, ensuring system responsiveness. The CAP Theorem highlights the trade-off between achieving full consistency and high availability in distributed big data systems.

The Trade-Off: Consistency vs Availability

The CAP Theorem highlights an inherent trade-off between consistency and availability in distributed big data systems, where ensuring strict consistency may reduce system availability during network partitions. Achieving high availability often requires compromising on consistency guarantees, allowing for eventual consistency rather than immediate data synchronization. System architects must carefully balance these factors based on application requirements such as fault tolerance, latency sensitivity, and data integrity to optimize big data performance.

Implications of CAP Theorem on Distributed Systems

The CAP Theorem highlights the trade-offs between consistency and availability in distributed systems, stating that only two of the three properties--consistency, availability, and partition tolerance--can be fully achieved simultaneously. In large-scale big data environments, maintaining strong consistency often reduces system availability during network partitions, impacting real-time data processing and response times. Designing distributed databases involves strategic decisions on prioritizing consistency or availability based on application requirements, influencing scalability and fault tolerance in data architectures.

Real-World Examples: Consistency vs Availability

In real-world distributed databases like Cassandra and MongoDB, consistency is often sacrificed to achieve higher availability, enabling continuous operation during network partitions. Systems such as HBase prioritize consistency over availability, ensuring data accuracy but risking downtime if nodes become unreachable. These trade-offs illustrate the CAP theorem's principle that distributed systems cannot simultaneously guarantee consistency, availability, and partition tolerance.

Choosing Consistency or Availability in Big Data Applications

Choosing consistency in Big Data applications ensures data accuracy and reliability by guaranteeing all nodes reflect the same data state, crucial for financial or healthcare systems. Prioritizing availability allows continuous data access and system responsiveness despite network partitions, essential for user-facing services like social media platforms. Balancing these trade-offs requires analyzing application requirements, latency tolerance, and fault tolerance to align with business objectives and user expectations.

Strategies to Balance Consistency and Availability

Strategies to balance consistency and availability in Big Data systems often involve tuning replication methods, such as using quorum-based reads and writes to maintain data integrity while improving uptime. Techniques like eventual consistency allow systems to maximize availability by accepting temporary data conflicts that reconcile over time. Implementing dynamic partition tolerance and adaptive consistency models ensures that distributed databases can respond effectively to network failures without sacrificing critical access or data accuracy.

Impact on Data Integrity and System Performance

In Big Data systems, the CAP Theorem highlights the trade-off between consistency and availability, directly impacting data integrity and system performance. Prioritizing consistency ensures accurate and reliable data across distributed nodes but may introduce latency and reduce system availability during network partitions. Conversely, emphasizing availability enhances system responsiveness and uptime but can result in temporary data inconsistencies, challenging data integrity management.

Popular Big Data Technologies: CAP Trade-Offs

Popular big data technologies like Apache Cassandra and MongoDB often prioritize availability over consistency to ensure continuous operation during network partitions, reflecting the AP choice in the CAP theorem. Apache HBase and Google's Bigtable lean towards consistency and partition tolerance, ensuring data accuracy at the expense of availability during failures. Understanding these CAP trade-offs is crucial for architects when designing distributed systems that balance performance and reliability according to specific application needs.

Future Trends in Consistency and Availability Solutions

Future trends in consistency and availability solutions emphasize adaptive mechanisms that dynamically balance the CAP theorem trade-offs based on real-time application needs and network conditions. Emerging approaches leverage machine learning algorithms to predict partition likelihood and adjust data replication strategies, optimizing both consistency and availability. Innovations in edge computing and decentralized architectures also promise enhanced availability without sacrificing strong consistency guarantees in distributed big data systems.

Consistency vs Availability (CAP Theorem) Infographic

techiny.com

techiny.com