In Big Data architectures, the Master Node manages the overall cluster, coordinating tasks, resource allocation, and job scheduling to ensure efficient processing. Worker Nodes execute the data processing tasks assigned by the Master Node, handling storage and computation across distributed systems. The separation of roles between Master and Worker Nodes enables scalable, fault-tolerant data processing in large-scale environments.

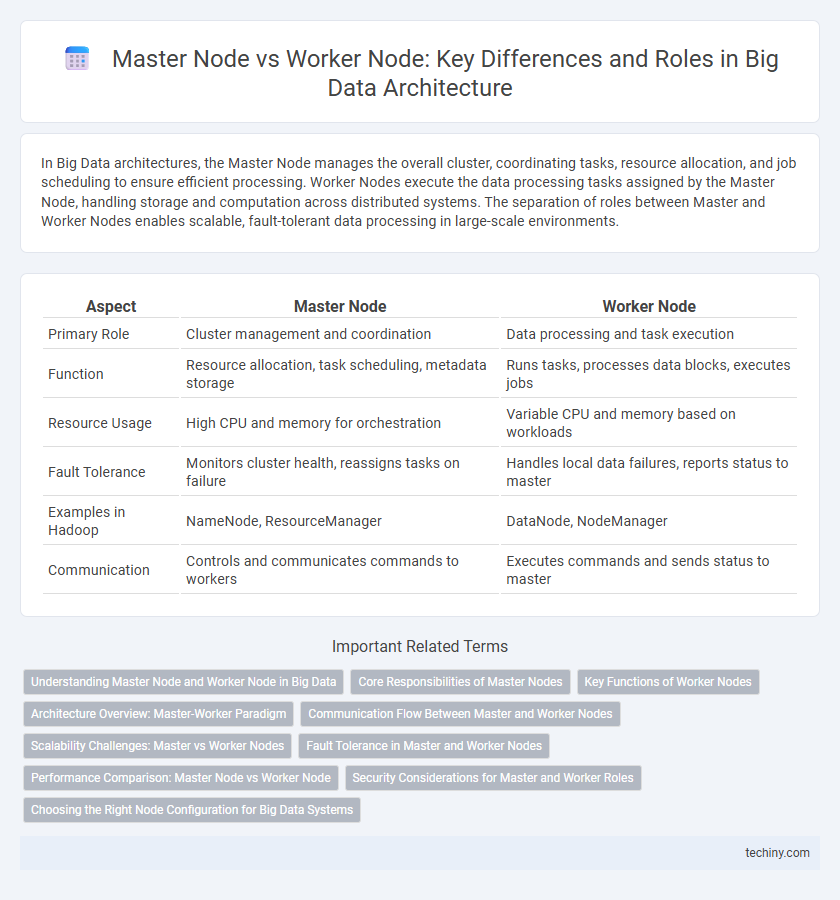

Table of Comparison

| Aspect | Master Node | Worker Node |

|---|---|---|

| Primary Role | Cluster management and coordination | Data processing and task execution |

| Function | Resource allocation, task scheduling, metadata storage | Runs tasks, processes data blocks, executes jobs |

| Resource Usage | High CPU and memory for orchestration | Variable CPU and memory based on workloads |

| Fault Tolerance | Monitors cluster health, reassigns tasks on failure | Handles local data failures, reports status to master |

| Examples in Hadoop | NameNode, ResourceManager | DataNode, NodeManager |

| Communication | Controls and communicates commands to workers | Executes commands and sends status to master |

Understanding Master Node and Worker Node in Big Data

Master nodes coordinate cluster operations, managing resource allocation, job scheduling, and maintaining system metadata in Big Data environments. Worker nodes perform the actual data processing tasks by executing computations, storing data locally, and reporting status to the master node. The efficient interaction between master and worker nodes ensures scalable and fault-tolerant processing of large datasets across distributed systems.

Core Responsibilities of Master Nodes

Master Nodes in Big Data architecture coordinate cluster management, oversee resource allocation, and handle task scheduling to ensure efficient processing. They maintain metadata, monitor system health, and manage communication between Worker Nodes for optimized data distribution. Master Nodes also orchestrate fault tolerance mechanisms, crucial for maintaining cluster reliability during node failures.

Key Functions of Worker Nodes

Worker Nodes handle the execution of tasks assigned by the Master Node, managing data processing and computation in distributed Big Data frameworks like Hadoop and Spark. They perform key functions such as data storage, task execution, intermediate data computation, and result reporting back to the Master Node. Worker Nodes also manage resource allocation locally, ensuring efficient processing and scalability within the cluster.

Architecture Overview: Master-Worker Paradigm

The Master-Worker paradigm in Big Data architecture involves a Master Node that orchestrates the overall process by assigning tasks, managing resources, and monitoring the cluster's health, while multiple Worker Nodes execute the assigned tasks in parallel, ensuring scalability and fault tolerance. Master Nodes handle metadata management and job scheduling, maintaining system coherence and data consistency across the distributed environment. Worker Nodes perform data processing and computation, reporting progress and results back to the Master Node, enabling efficient large-scale data processing workflows.

Communication Flow Between Master and Worker Nodes

The communication flow between Master and Worker Nodes in Big Data architectures is essential for efficient task coordination and data processing. Master Nodes assign tasks, distribute data chunks, and monitor the health and status of Worker Nodes, while Worker Nodes execute computations, process data locally, and return results or status updates to the Master Node. This continuous bidirectional communication ensures fault tolerance, load balancing, and optimized resource utilization across distributed systems.

Scalability Challenges: Master vs Worker Nodes

Master nodes coordinate cluster management and allocate tasks, often becoming bottlenecks when scaling due to limited processing capacity and memory constraints. Worker nodes handle data processing and computation, scaling more effectively by adding nodes to distribute workload across the cluster. Scalability challenges arise as master nodes struggle to manage metadata and cluster state at large scale, while worker nodes can be elastically expanded to improve parallel processing performance.

Fault Tolerance in Master and Worker Nodes

Master nodes coordinate cluster activities and maintain metadata, implementing fault tolerance through leader election and state replication to ensure continuous availability. Worker nodes execute tasks and store data, leveraging data replication and task re-execution to recover from failures and maintain processing integrity. Fault tolerance in big data clusters depends on the master node's ability to manage cluster state reliably and the worker nodes' capacity to handle hardware or software faults without data loss.

Performance Comparison: Master Node vs Worker Node

Master Nodes coordinate cluster operations and manage resource allocation, which limits their performance in data processing tasks compared to Worker Nodes that execute parallel computations directly on large datasets. Worker Nodes maximize throughput and minimize latency by handling distributed processing workloads, making them essential for high-performance big data environments. Performance metrics such as task execution time, CPU utilization, and network I/O significantly favor Worker Nodes over Master Nodes in operational efficiency.

Security Considerations for Master and Worker Roles

Master nodes in Big Data architectures require stringent security measures such as role-based access control (RBAC) and encrypted communication channels to protect cluster management and configuration data. Worker nodes must implement robust authentication protocols and data encryption at rest and in transit to safeguard processing tasks and intermediate data. Securing both nodes with continuous monitoring and regular patching mitigates risks of unauthorized access and potential data breaches within the distributed environment.

Choosing the Right Node Configuration for Big Data Systems

Selecting the right node configuration in Big Data systems is crucial for optimizing cluster performance, where the Master Node manages cluster resources and coordinates tasks, while Worker Nodes execute data processing jobs. Balancing the number of Master Nodes ensures high availability and fault tolerance, whereas scaling Worker Nodes enhances parallel processing capacity and reduces job completion time. Optimizing CPU, memory, and storage parameters on both node types ensures efficient resource utilization and meets specific workload requirements.

Master Node vs Worker Node Infographic

techiny.com

techiny.com