MapReduce processes massive datasets by distributing tasks across clusters, optimizing for fault tolerance and scalability but often resulting in higher latency. In-memory processing stores data in RAM, enabling faster analytical computations and real-time insights, though it requires substantial memory resources. Choosing between these depends on workload size, speed requirements, and resource availability in big data environments.

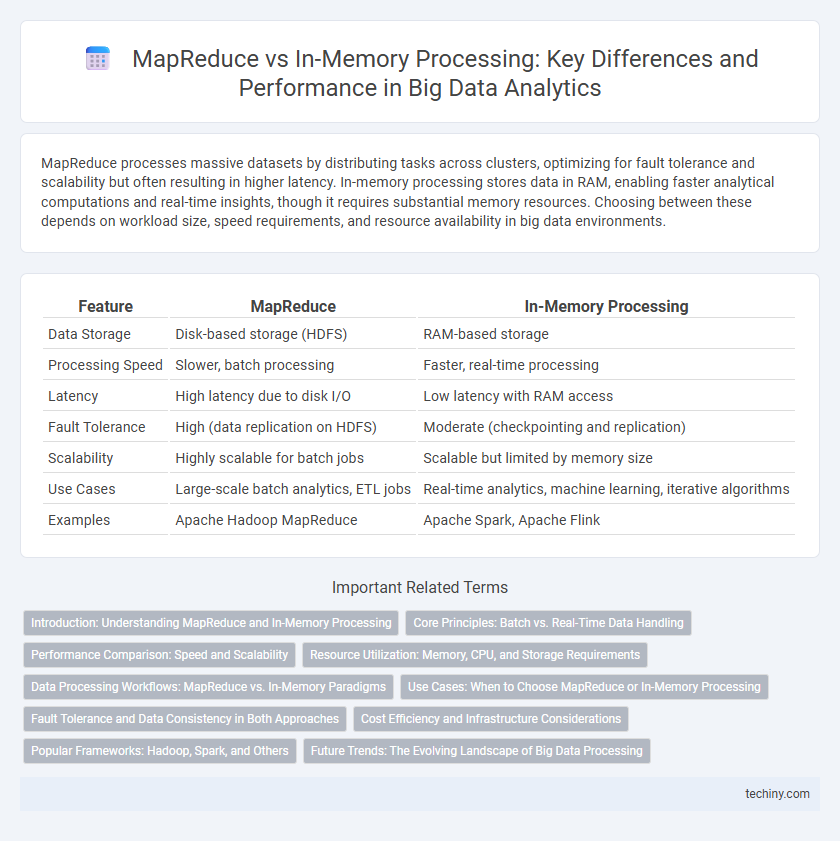

Table of Comparison

| Feature | MapReduce | In-Memory Processing |

|---|---|---|

| Data Storage | Disk-based storage (HDFS) | RAM-based storage |

| Processing Speed | Slower, batch processing | Faster, real-time processing |

| Latency | High latency due to disk I/O | Low latency with RAM access |

| Fault Tolerance | High (data replication on HDFS) | Moderate (checkpointing and replication) |

| Scalability | Highly scalable for batch jobs | Scalable but limited by memory size |

| Use Cases | Large-scale batch analytics, ETL jobs | Real-time analytics, machine learning, iterative algorithms |

| Examples | Apache Hadoop MapReduce | Apache Spark, Apache Flink |

Introduction: Understanding MapReduce and In-Memory Processing

MapReduce is a programming model designed for processing large data sets with a distributed algorithm on a cluster, leveraging disk-based storage to handle vast amounts of data across nodes. In-memory processing, by contrast, stores data in RAM, enabling faster data retrieval and real-time analytics by reducing the latency associated with disk I/O operations. Understanding the fundamental differences between MapReduce's batch-oriented, disk-centric approach and in-memory processing's speed-driven, memory-resident techniques is critical for optimizing big data workflows.

Core Principles: Batch vs. Real-Time Data Handling

MapReduce operates on a core principle of batch data processing, dividing large datasets into chunks and executing parallel computations across distributed nodes to handle massive volumes efficiently. In-memory processing, exemplified by frameworks like Apache Spark, prioritizes real-time data handling by storing data in RAM, enabling faster iterative computations and low-latency analytics. The fundamental distinction lies in MapReduce's disk-based sequential processing versus in-memory's ability to process streaming or dynamic data with minimal delays.

Performance Comparison: Speed and Scalability

MapReduce processes large datasets by distributing tasks across clusters, offering scalability but often slower execution due to disk I/O operations. In-memory processing frameworks like Apache Spark accelerate computation by storing data in RAM, drastically improving speed and enabling low-latency analytics. Scalability is enhanced in-memory through optimized resource management, but MapReduce retains advantages in handling massive, disk-based datasets where memory constraints exist.

Resource Utilization: Memory, CPU, and Storage Requirements

MapReduce relies heavily on disk storage for intermediate data, resulting in higher latency and increased I/O overhead, while its CPU utilization is moderate due to batch processing. In-memory processing optimizes resource utilization by storing data directly in RAM, significantly reducing read/write cycles and accelerating CPU performance for real-time analytics. Memory-intensive in-memory frameworks demand substantial RAM capacity, but decrease reliance on storage, contrasting with MapReduce's disk-centric approach that can handle larger datasets with less memory.

Data Processing Workflows: MapReduce vs. In-Memory Paradigms

MapReduce processes large datasets through distributed batch jobs that read and write to disk at each stage, optimizing for fault tolerance and scalability but incurring higher latency. In-memory processing frameworks such as Apache Spark leverage RAM to perform iterative algorithms and interactive analytics with significantly faster data retrieval and reduced I/O overhead. Choosing between MapReduce and in-memory paradigms depends on workload characteristics, with MapReduce suited for complex, disk-intensive batch jobs and in-memory processing excelling in real-time, iterative data workflows.

Use Cases: When to Choose MapReduce or In-Memory Processing

MapReduce excels in processing massive datasets across distributed systems, making it ideal for batch processing tasks such as log analysis, data warehousing, and large-scale ETL operations. In-memory processing platforms like Apache Spark are better suited for real-time analytics, iterative algorithms, and machine learning tasks that require low latency and rapid data access. Choosing between MapReduce and in-memory processing depends on factors like data size, processing speed requirements, and the nature of the workload.

Fault Tolerance and Data Consistency in Both Approaches

MapReduce ensures fault tolerance through its distributed processing model by re-executing failed tasks on different nodes, maintaining data consistency via intermediate data checkpoints stored on disk. In-memory processing frameworks like Apache Spark offer faster execution by keeping data in RAM but achieve fault tolerance using lineage-based recomputation, where lost data partitions are reconstructed from original input data. Both approaches prioritize data consistency; MapReduce leverages disk-based persistence, while in-memory processing relies on transformational lineage and data replication techniques to recover from faults.

Cost Efficiency and Infrastructure Considerations

MapReduce leverages distributed disk-based storage, reducing upfront hardware costs but potentially increasing latency and operational expenses due to slower data processing. In-memory processing demands significant RAM investment, driving higher initial infrastructure costs but enabling real-time analytics and faster query execution that can reduce long-term computational expenses. Organizations must balance MapReduce's cost-effective scalability against in-memory processing's higher performance for time-sensitive big data workloads.

Popular Frameworks: Hadoop, Spark, and Others

Hadoop's MapReduce framework processes large datasets by distributing tasks across clusters, optimizing batch processing but often suffering from higher latency. Apache Spark leverages in-memory processing to accelerate data analytics, significantly reducing execution time for iterative algorithms and real-time applications. Other emerging frameworks like Flink and Dask also emphasize in-memory computation, enhancing stream processing capabilities and scalability in big data environments.

Future Trends: The Evolving Landscape of Big Data Processing

MapReduce, traditionally dominant in batch processing, is gradually supplemented by in-memory processing frameworks like Apache Spark that offer faster data analytics through real-time computation. Future trends in big data processing emphasize hybrid architectures combining disk-based scalability of MapReduce with the low-latency capabilities of in-memory systems to handle growing volumes and velocity of data. Emerging technologies leverage machine learning integration and hardware advancements such as NVMe and persistent memory to optimize both batch and streaming workloads in scalable, high-performance big data ecosystems.

MapReduce vs In-Memory Processing Infographic

techiny.com

techiny.com