Data sampling involves selecting a representative subset of a large dataset to analyze patterns while preserving computational efficiency. Data aggregation combines multiple data points, summarizing them into comprehensive metrics or insights that highlight overall trends. Both techniques optimize big data processing but serve different purposes: sampling reduces volume for analysis, whereas aggregation simplifies data interpretation.

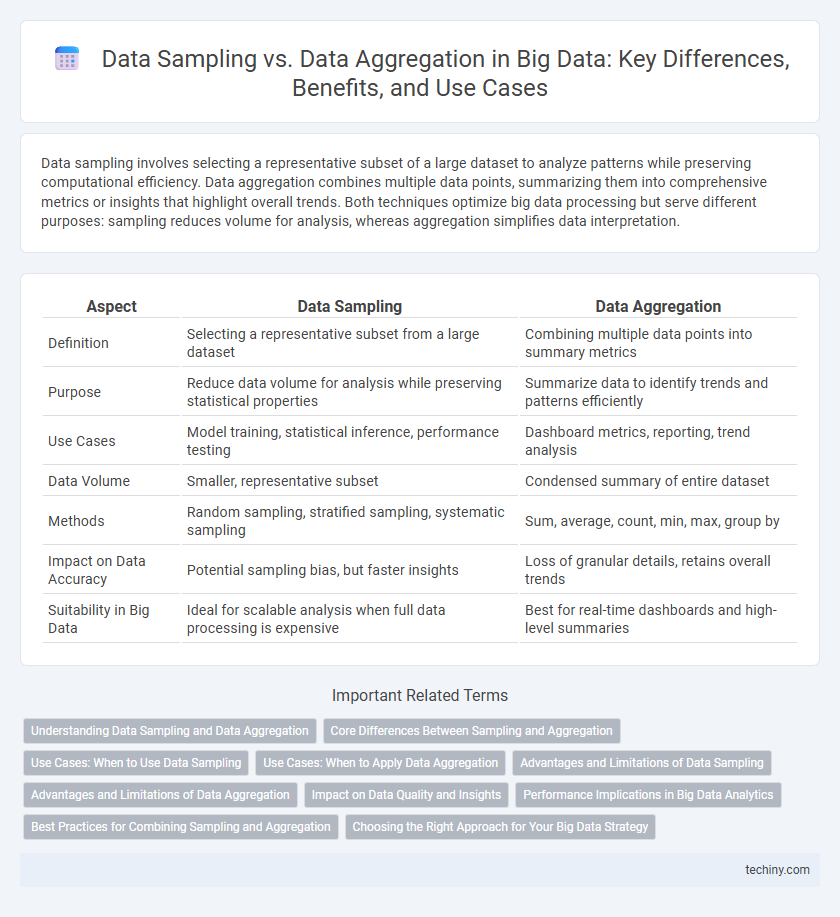

Table of Comparison

| Aspect | Data Sampling | Data Aggregation |

|---|---|---|

| Definition | Selecting a representative subset from a large dataset | Combining multiple data points into summary metrics |

| Purpose | Reduce data volume for analysis while preserving statistical properties | Summarize data to identify trends and patterns efficiently |

| Use Cases | Model training, statistical inference, performance testing | Dashboard metrics, reporting, trend analysis |

| Data Volume | Smaller, representative subset | Condensed summary of entire dataset |

| Methods | Random sampling, stratified sampling, systematic sampling | Sum, average, count, min, max, group by |

| Impact on Data Accuracy | Potential sampling bias, but faster insights | Loss of granular details, retains overall trends |

| Suitability in Big Data | Ideal for scalable analysis when full data processing is expensive | Best for real-time dashboards and high-level summaries |

Understanding Data Sampling and Data Aggregation

Data sampling involves selecting a representative subset of a large dataset to enable faster analysis while preserving the overall data characteristics. Data aggregation combines multiple data points into summarized metrics such as averages or totals, facilitating trend identification and decision-making. These techniques optimize big data processing by reducing complexity and improving computational efficiency.

Core Differences Between Sampling and Aggregation

Data sampling involves selecting a representative subset of a large dataset to analyze patterns or make inferences, prioritizing accuracy and efficiency by reducing volume without altering original data points. Data aggregation combines multiple data records into summarized metrics, such as averages or totals, to provide a high-level overview that supports decision-making and reporting. The core difference lies in sampling preserving raw data characteristics for detailed analysis, while aggregation transforms data into consolidated forms for trend identification and simplified insights.

Use Cases: When to Use Data Sampling

Data sampling is ideal for exploratory data analysis and model training when working with massive datasets that exceed computational capacity, enabling efficient insights without full data processing. It proves valuable in scenarios requiring quick decision-making, such as real-time analytics or preliminary hypothesis testing. Data aggregation suits summary reporting and trend analysis where detailed individual data points are unnecessary, contrasting with sampling's focus on representative subset selection for accuracy in predictive modeling.

Use Cases: When to Apply Data Aggregation

Data aggregation is ideal for scenarios where summarizing large datasets is essential for generating high-level insights, such as in sales trend analysis, customer behavior monitoring, or real-time dashboard reporting. It simplifies complex data by consolidating measurements into meaningful metrics like totals, averages, or counts, enabling faster decision-making and resource optimization. This method is particularly effective when the objective is to identify overarching patterns rather than analyze individual data points in detail.

Advantages and Limitations of Data Sampling

Data sampling in big data offers advantages such as reduced processing time and lower storage requirements by analyzing a representative subset of data instead of the entire dataset. It enables quicker insights and efficient model training but may lead to biased results or inaccurate conclusions if the sample is not truly representative. Limitations include potential loss of valuable information and decreased accuracy compared to full data aggregation, which compiles all data points for comprehensive analysis.

Advantages and Limitations of Data Aggregation

Data aggregation consolidates large datasets into summary statistics, enhancing computational efficiency and enabling faster insights across extensive big data environments. Its advantages include reduced data volume, streamlined analysis, and improved data visualization, but limitations arise from potential loss of detailed information and possible obscuring of outliers or anomalies critical for granular analysis. Effective use of data aggregation requires balancing data reduction with the need for preserving essential data characteristics to maintain analytical accuracy.

Impact on Data Quality and Insights

Data sampling can improve processing speed and reduce costs but risks omitting critical information, potentially leading to biased or incomplete insights. Data aggregation consolidates information to provide a comprehensive view, enhancing data quality by minimizing noise and highlighting trends. Balancing sampling and aggregation techniques is essential for accurate, high-quality insights in big data analytics.

Performance Implications in Big Data Analytics

Data sampling reduces dataset size by selecting representative subsets, significantly improving query speed and lowering resource consumption in big data analytics. Data aggregation combines multiple data points into summarized metrics, which accelerates analytical tasks but may obscure granular insights. Choosing between sampling and aggregation depends on the performance requirements and the need for detailed versus summarized information in large-scale data processing.

Best Practices for Combining Sampling and Aggregation

Combining data sampling and data aggregation enhances Big Data analytics by balancing accuracy and performance. Best practices involve selecting representative samples that maintain key statistical properties before applying aggregation functions to reduce dimensionality and highlight trends. Leveraging stratified sampling ensures diverse data coverage, while aggregation techniques like grouping and summarization optimize query efficiency and resource utilization.

Choosing the Right Approach for Your Big Data Strategy

Data sampling involves selecting a representative subset of data to analyze patterns efficiently, while data aggregation combines data from multiple sources or records to provide summarized insights. Choosing the right approach for your big data strategy depends on the specific use case, with sampling ideal for reducing computational costs and speeding up analysis, and aggregation best suited for deriving high-level trends and metrics across large datasets. Balancing these methods ensures optimized performance and accurate decision-making in big data analytics.

Data Sampling vs Data Aggregation Infographic

techiny.com

techiny.com