Distributed File Systems enable scalable storage across multiple machines, enhancing fault tolerance and data availability compared to Traditional File Systems that store data on a single device. By distributing data, they support parallel processing and higher throughput essential for Big Data applications. Traditional File Systems are limited by hardware constraints and lack the ability to efficiently handle massive datasets common in Big Data environments.

Table of Comparison

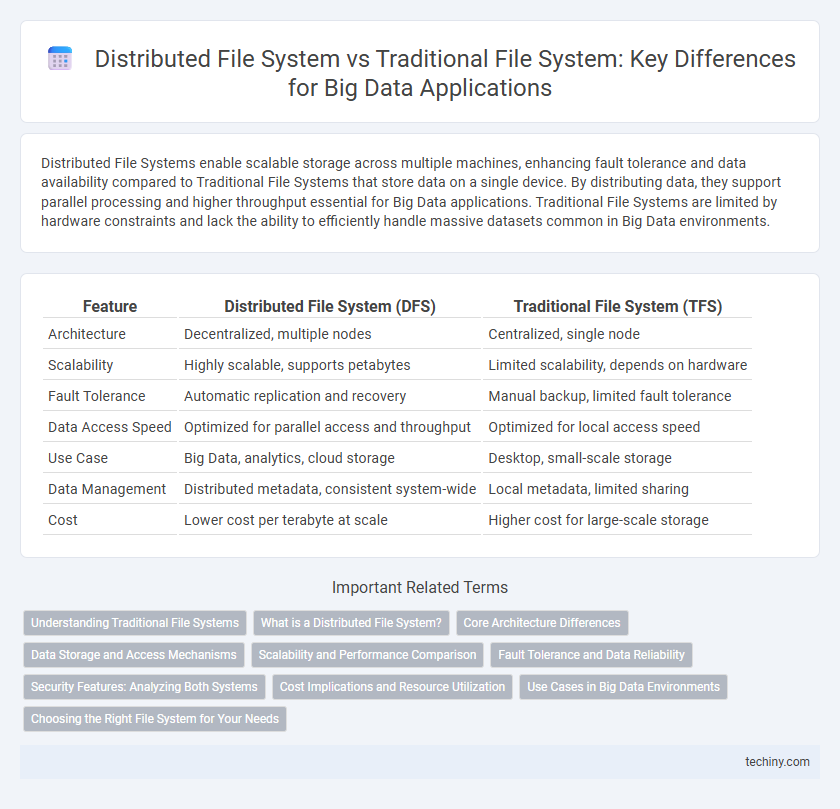

| Feature | Distributed File System (DFS) | Traditional File System (TFS) |

|---|---|---|

| Architecture | Decentralized, multiple nodes | Centralized, single node |

| Scalability | Highly scalable, supports petabytes | Limited scalability, depends on hardware |

| Fault Tolerance | Automatic replication and recovery | Manual backup, limited fault tolerance |

| Data Access Speed | Optimized for parallel access and throughput | Optimized for local access speed |

| Use Case | Big Data, analytics, cloud storage | Desktop, small-scale storage |

| Data Management | Distributed metadata, consistent system-wide | Local metadata, limited sharing |

| Cost | Lower cost per terabyte at scale | Higher cost for large-scale storage |

Understanding Traditional File Systems

Traditional file systems organize data in a hierarchical structure on a single storage device, making them suitable for managing files on individual computers. These systems emphasize metadata management, file security, and efficient access but encounter limitations in scalability and fault tolerance when handling large datasets. Unlike distributed file systems, traditional file systems lack the ability to distribute data across multiple nodes, which restricts their use in big data environments requiring high availability and parallel processing.

What is a Distributed File System?

A Distributed File System (DFS) enables storage and access of data across multiple networked servers, providing scalability and fault tolerance for Big Data applications. It allows users to access and process large datasets in parallel, improving performance compared to traditional file systems limited to a single machine's storage. DFS architectures like HDFS (Hadoop Distributed File System) support high-throughput data access essential for analytics and large-scale data processing tasks.

Core Architecture Differences

Distributed File Systems (DFS) utilize multiple networked nodes to store and manage data, enabling horizontal scalability and fault tolerance, whereas Traditional File Systems rely on a single centralized storage device, limiting scalability and increasing vulnerability to hardware failures. DFS architecture employs data replication and partitioning across nodes to ensure high availability and load balancing, while Traditional File Systems manage data through hierarchical directory structures confined to local disk storage. The core architectural difference lies in DFS's decentralized metadata management and parallel data access capabilities, contrasting with the centralized metadata and sequential access methods of Traditional File Systems.

Data Storage and Access Mechanisms

Distributed File Systems (DFS) store data across multiple nodes, enabling parallel access and fault tolerance through data replication, which enhances scalability and availability in Big Data environments. Traditional File Systems (TFS) rely on centralized storage with hierarchical data organization, limiting scalability and often facing bottlenecks during high-demand access scenarios. DFS uses metadata servers to manage distributed data locations, optimizing data retrieval by accessing nodes closest to the request, while TFS depends on direct file access within a single storage device.

Scalability and Performance Comparison

Distributed File Systems (DFS) like HDFS offer superior scalability by enabling storage and processing across numerous nodes, handling petabytes of data efficiently, unlike Traditional File Systems (TFS) that struggle with scale due to single-node limitations. Performance in DFS improves with parallel data access and fault tolerance features, reducing bottlenecks common in TFS, which rely on centralized storage and have slower I/O operations under heavy workloads. Large-scale data environments benefit from DFS's horizontal scaling and distributed architecture, providing faster data retrieval and high throughput compared to the vertical scaling constraints of TFS.

Fault Tolerance and Data Reliability

Distributed File Systems (DFS) achieve higher fault tolerance and data reliability compared to Traditional File Systems by replicating data across multiple nodes, ensuring continuous availability even if some nodes fail. DFS employs mechanisms like automatic data replication and error detection, enabling seamless recovery without data loss, while traditional systems rely on single storage points that pose risks of data corruption and downtime. This redundancy in DFS significantly reduces the risk of data unavailability and enhances resilience in large-scale Big Data environments.

Security Features: Analyzing Both Systems

Distributed File Systems employ robust security protocols such as encryption, access control lists (ACLs), and authentication mechanisms tailored for large-scale data environments, ensuring data integrity and confidentiality across multiple nodes. Traditional File Systems rely primarily on local permissions and simpler security models, which may not effectively address vulnerabilities in distributed or cloud-based architectures. The scalability and dynamic nature of Distributed File Systems necessitate advanced security features like fault tolerance and data replication with secure authentication, enhancing protection against unauthorized access and data breaches.

Cost Implications and Resource Utilization

Distributed file systems significantly reduce costs by leveraging commodity hardware and enabling scalable storage solutions, whereas traditional file systems often require expensive, high-performance servers. Resource utilization in distributed systems is optimized through parallel data processing and load balancing across multiple nodes, leading to improved efficiency and reduced downtime. Traditional file systems typically face bottlenecks due to centralized storage, resulting in underutilized resources and higher maintenance expenses.

Use Cases in Big Data Environments

Distributed File Systems like HDFS are essential in Big Data environments due to their ability to store and process massive datasets across multiple nodes, ensuring fault tolerance and high throughput. Traditional File Systems, while suitable for smaller-scale or single-machine scenarios, lack scalability and redundancy needed for big data analytics and real-time processing. Use cases such as large-scale data warehousing, machine learning model training, and real-time log analysis heavily rely on distributed file systems to manage vast volumes of unstructured data efficiently.

Choosing the Right File System for Your Needs

Distributed File Systems (DFS) excel in handling massive, unstructured Big Data by distributing storage across multiple nodes, enhancing scalability and fault tolerance compared to Traditional File Systems (TFS), which are limited to single-machine storage and lower data volumes. When choosing the right file system, consider your data size, speed, and access requirements; DFS like Hadoop Distributed File System (HDFS) is optimal for large-scale analytics and parallel processing, while TFS suits smaller, localized storage tasks with simpler data management. Evaluate workload complexity, budget constraints, and integration capabilities to align your infrastructure with business objectives and ensure data accessibility and reliability.

Distributed File System vs Traditional File System Infographic

techiny.com

techiny.com