Deduplication eliminates redundant copies of data to optimize storage efficiency, while compression reduces the size of data by encoding information more compactly. In Big Data environments, deduplication is ideal for datasets with many repeated elements, significantly cutting storage needs by storing only unique instances. Compression complements this by shrinking data volume, enabling faster transmission and reduced storage costs without losing original information.

Table of Comparison

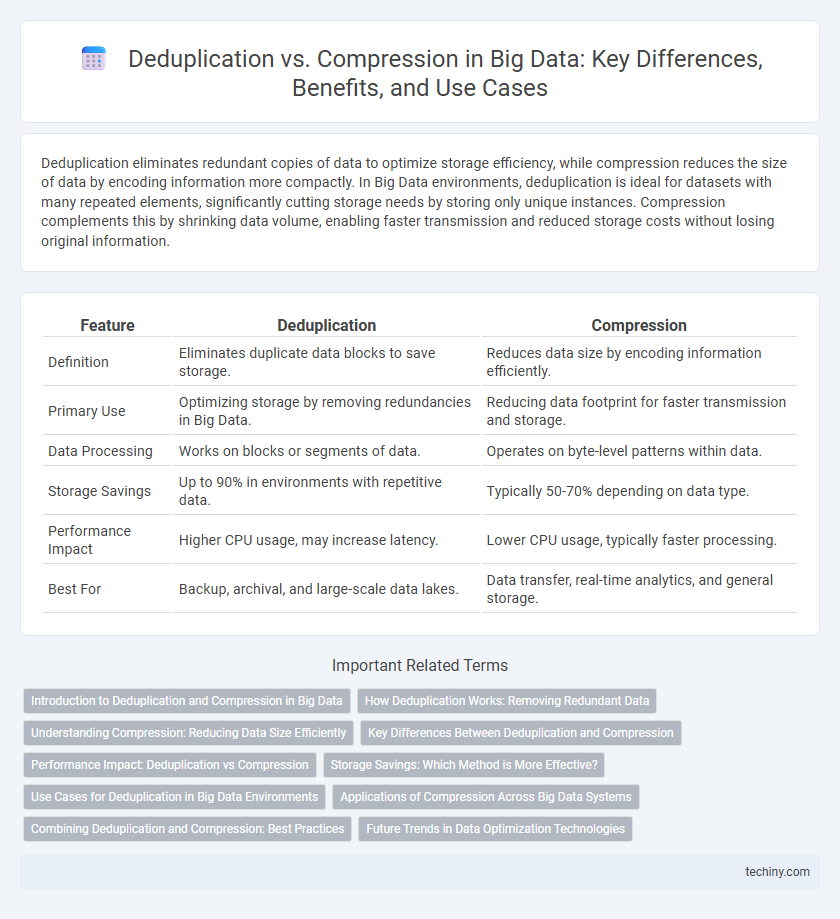

| Feature | Deduplication | Compression |

|---|---|---|

| Definition | Eliminates duplicate data blocks to save storage. | Reduces data size by encoding information efficiently. |

| Primary Use | Optimizing storage by removing redundancies in Big Data. | Reducing data footprint for faster transmission and storage. |

| Data Processing | Works on blocks or segments of data. | Operates on byte-level patterns within data. |

| Storage Savings | Up to 90% in environments with repetitive data. | Typically 50-70% depending on data type. |

| Performance Impact | Higher CPU usage, may increase latency. | Lower CPU usage, typically faster processing. |

| Best For | Backup, archival, and large-scale data lakes. | Data transfer, real-time analytics, and general storage. |

Introduction to Deduplication and Compression in Big Data

Deduplication and compression are critical techniques for optimizing storage efficiency in big data environments by minimizing data redundancy and reducing overall data size. Deduplication eliminates duplicate copies of repeating data segments, significantly decreasing storage needs in massive datasets commonly generated by big data applications. Compression algorithms encode data more compactly, enhancing storage utilization and accelerating data transmission without compromising data integrity.

How Deduplication Works: Removing Redundant Data

Deduplication works by identifying and eliminating duplicate data segments within large datasets, replacing redundant copies with references to a single instance. This process significantly reduces storage requirements by storing only unique data chunks, improving efficiency in big data environments. Unlike compression, which encodes data to reduce size, deduplication targets redundancy at the byte or block level to optimize storage capacity.

Understanding Compression: Reducing Data Size Efficiently

Compression reduces data size by encoding information more efficiently, leveraging algorithms like Huffman coding, Lempel-Ziv-Welch (LZW), and Run-Length Encoding (RLE) to minimize storage requirements. It transforms data into compact representations without losing critical information, enhancing storage capacity and accelerating data transfer across networks. Effective compression techniques significantly improve Big Data management by optimizing resource utilization and reducing latency in processing massive datasets.

Key Differences Between Deduplication and Compression

Deduplication eliminates redundant copies of data by identifying and storing unique data segments only once, significantly reducing storage needs in Big Data environments. Compression encodes data to reduce file size without losing information, optimizing storage efficiency but processing data in smaller units than deduplication. Deduplication is more effective for large datasets with repetitive data patterns, while compression offers broader application across diverse data types for space savings.

Performance Impact: Deduplication vs Compression

Deduplication uniquely identifies and eliminates redundant data segments, significantly reducing storage requirements while demanding substantial indexing overhead that can impact throughput. Compression encodes data more efficiently by reducing file size through algorithms, generally offering faster processing speeds but potentially less storage savings compared to deduplication in highly redundant datasets. Evaluating performance impact depends on workload characteristics, with deduplication favoring backup and archival systems and compression better suited for real-time data processing.

Storage Savings: Which Method is More Effective?

Deduplication typically achieves greater storage savings by identifying and eliminating redundant data blocks across a dataset, resulting in more efficient use of storage space compared to compression, which reduces the size of individual data blocks without addressing redundancy. In large-scale Big Data environments, deduplication can reduce storage requirements by up to 90%, especially in backup and archival systems with high levels of duplicate information. Compression algorithms offer space savings ranging from 2:1 to 5:1, making deduplication the more effective method for maximizing storage efficiency in most Big Data applications.

Use Cases for Deduplication in Big Data Environments

Deduplication in big data environments is essential for optimizing storage by eliminating redundant data copies, particularly in backup and archival systems where vast amounts of duplicated information accumulate. It enables efficient data management in cloud storage platforms, disaster recovery solutions, and distributed file systems, significantly reducing storage costs and improving retrieval times. High-performance computing and analytics workloads benefit from deduplication by minimizing data transfer overhead and accelerating processing speeds.

Applications of Compression Across Big Data Systems

Compression in big data systems optimizes storage and accelerates data processing by reducing file sizes across distributed databases, data lakes, and cloud storage platforms. Techniques like columnar compression in Apache Parquet or ORC formats enhance query performance in frameworks such as Apache Spark and Hadoop by minimizing I/O operations. Effective compression algorithms enable efficient bandwidth usage during data transfer and facilitate real-time analytics on large-scale datasets.

Combining Deduplication and Compression: Best Practices

Combining deduplication and compression effectively maximizes storage efficiency by removing redundant data and then shrinking the remaining dataset, significantly reducing storage costs and improving data transfer speeds. Best practices include applying deduplication first to eliminate duplicate blocks or files, followed by compression algorithms optimized for the unique, deduplicated data to enhance compression ratios. Ensuring compatibility between deduplication and compression technologies and monitoring performance impact are critical for achieving optimal results in big data environments.

Future Trends in Data Optimization Technologies

Future trends in data optimization technologies emphasize advanced deduplication algorithms integrated with AI to identify redundant data patterns more accurately and reduce storage costs. Compression techniques are evolving with machine learning models that adapt to diverse data types, enhancing efficiency beyond traditional methods. Combined innovations in deduplication and compression are expected to enable real-time data optimization, critical for managing exponential growth in Big Data environments.

Deduplication vs Compression Infographic

techiny.com

techiny.com