Load balancing distributes incoming data traffic evenly across multiple servers, enhancing the performance and reliability of Big Data applications. Failover ensures system continuity by automatically redirecting traffic to a standby server when the primary one fails, minimizing downtime. Together, these mechanisms optimize resource utilization and maintain high availability in Big Data environments.

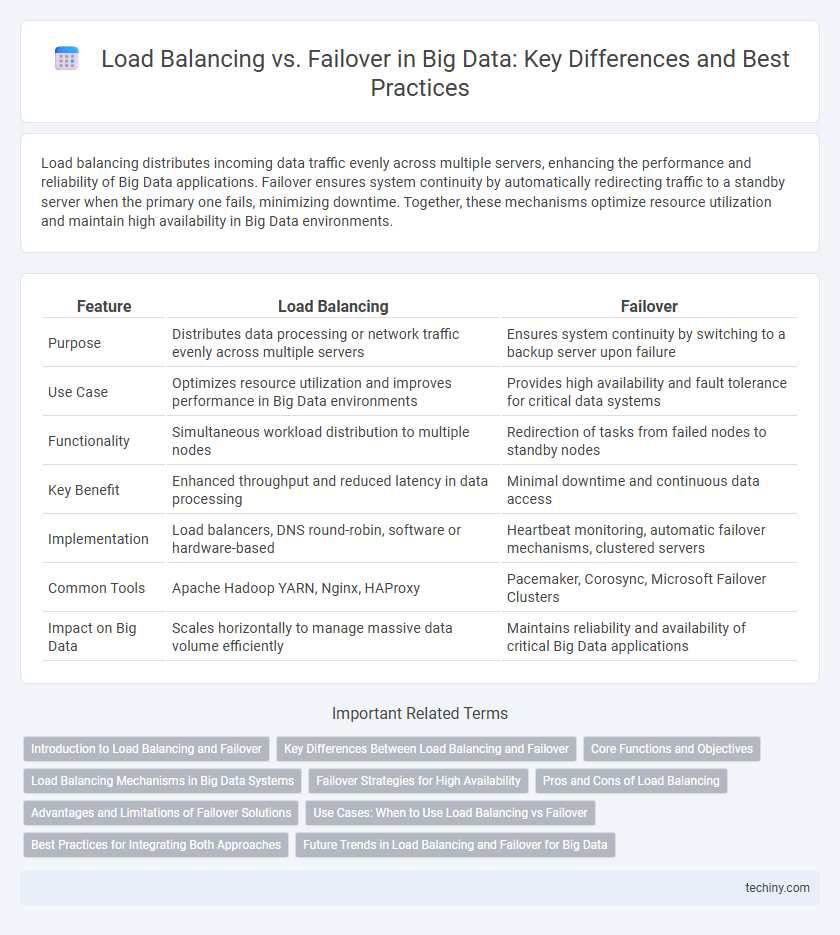

Table of Comparison

| Feature | Load Balancing | Failover |

|---|---|---|

| Purpose | Distributes data processing or network traffic evenly across multiple servers | Ensures system continuity by switching to a backup server upon failure |

| Use Case | Optimizes resource utilization and improves performance in Big Data environments | Provides high availability and fault tolerance for critical data systems |

| Functionality | Simultaneous workload distribution to multiple nodes | Redirection of tasks from failed nodes to standby nodes |

| Key Benefit | Enhanced throughput and reduced latency in data processing | Minimal downtime and continuous data access |

| Implementation | Load balancers, DNS round-robin, software or hardware-based | Heartbeat monitoring, automatic failover mechanisms, clustered servers |

| Common Tools | Apache Hadoop YARN, Nginx, HAProxy | Pacemaker, Corosync, Microsoft Failover Clusters |

| Impact on Big Data | Scales horizontally to manage massive data volume efficiently | Maintains reliability and availability of critical Big Data applications |

Introduction to Load Balancing and Failover

Load balancing distributes incoming data traffic across multiple servers to optimize resource use, maximize throughput, and minimize response time in Big Data environments. Failover ensures system reliability by automatically switching to a backup server or network if the primary one fails, preventing data loss and downtime. Both strategies are critical for maintaining high availability and performance in large-scale data processing systems.

Key Differences Between Load Balancing and Failover

Load balancing distributes incoming data traffic across multiple servers to optimize resource use, maximize throughput, and minimize response time, enhancing overall system performance in big data environments. Failover ensures system reliability by automatically switching to a standby server or system component when the primary one fails, minimizing downtime and preventing data loss. Key differences lie in the objectives: load balancing focuses on efficiency and scalability, while failover prioritizes fault tolerance and continuous availability.

Core Functions and Objectives

Load balancing in Big Data systems distributes incoming data traffic evenly across multiple servers to optimize resource use, maximize throughput, and minimize response time. Failover focuses on system reliability by automatically switching to a standby server or cluster when a primary node fails, ensuring continuous data processing and high availability. Both mechanisms aim to maintain seamless operations, but load balancing prioritizes performance efficiency while failover emphasizes fault tolerance.

Load Balancing Mechanisms in Big Data Systems

Load balancing mechanisms in big data systems distribute incoming data processing tasks evenly across multiple nodes to optimize resource utilization and minimize latency. Techniques such as round-robin, least connections, and consistent hashing enable scalable and efficient handling of large datasets by preventing bottlenecks and ensuring fault tolerance. Effective load balancing improves system throughput, reliability, and responsiveness in distributed big data architectures.

Failover Strategies for High Availability

Failover strategies in big data environments are critical for ensuring high availability by automatically switching to backup systems when primary components fail, minimizing downtime and data loss. Techniques such as active-passive failover, where standby nodes remain ready to take over, and active-active configurations, which allow continuous load distribution even during failures, are widely implemented. Integrating robust failover mechanisms with real-time monitoring and automated recovery processes enhances resilience and maintains seamless data processing in large-scale distributed systems.

Pros and Cons of Load Balancing

Load balancing in big data environments optimizes resource utilization by distributing workloads across multiple servers, enhancing system performance and reducing latency. However, it can introduce complexity in managing stateful applications and may require sophisticated algorithms to prevent uneven traffic distribution. Unlike failover, which primarily ensures high availability during system failures, load balancing focuses on maximizing efficiency but may not provide immediate redundancy in case of server outages.

Advantages and Limitations of Failover Solutions

Failover solutions in Big Data environments ensure high availability by automatically switching to backup systems during failures, minimizing downtime and data loss. These solutions provide robust fault tolerance but can introduce complexity and cost due to the need for redundant infrastructure and synchronization mechanisms. Limitations include potential delays in failover detection and recovery, which may impact real-time data processing performance and consistency.

Use Cases: When to Use Load Balancing vs Failover

Load balancing is ideal for distributing incoming big data workloads evenly across multiple servers to optimize resource utilization and improve processing speed during high-traffic scenarios. Failover is crucial in mission-critical big data systems where continuous availability is required, automatically switching to backup nodes when primary ones fail to minimize downtime. Choosing load balancing suits environments with variable load demands, while failover is essential for maintaining system reliability and disaster recovery.

Best Practices for Integrating Both Approaches

Load balancing in Big Data systems distributes workloads evenly across multiple servers to optimize resource utilization and improve processing speed, while failover ensures system reliability by automatically switching to backup servers during failures. Best practices for integrating both approaches include configuring health checks for real-time server monitoring and implementing dynamic load redistribution to prevent bottlenecks during failover scenarios. Employing resource-aware algorithms and maintaining data consistency through synchronized replication further enhances system resilience and performance.

Future Trends in Load Balancing and Failover for Big Data

Future trends in load balancing and failover for big data emphasize dynamic resource allocation powered by AI-driven algorithms to optimize performance under varying workloads. Advances in predictive analytics enable proactive failover mechanisms, minimizing downtime by anticipating component failures before they occur. Integration of container orchestration tools like Kubernetes enhances scalability and resilience, ensuring seamless data processing in distributed big data environments.

Load Balancing vs Failover Infographic

techiny.com

techiny.com