Real-time analytics processes data as it is generated, enabling immediate insights and quicker decision-making for dynamic environments. Historical analytics examines past data to identify trends and patterns, supporting strategic planning and long-term forecasting. Combining both approaches empowers businesses to respond promptly while leveraging comprehensive data context for informed decisions.

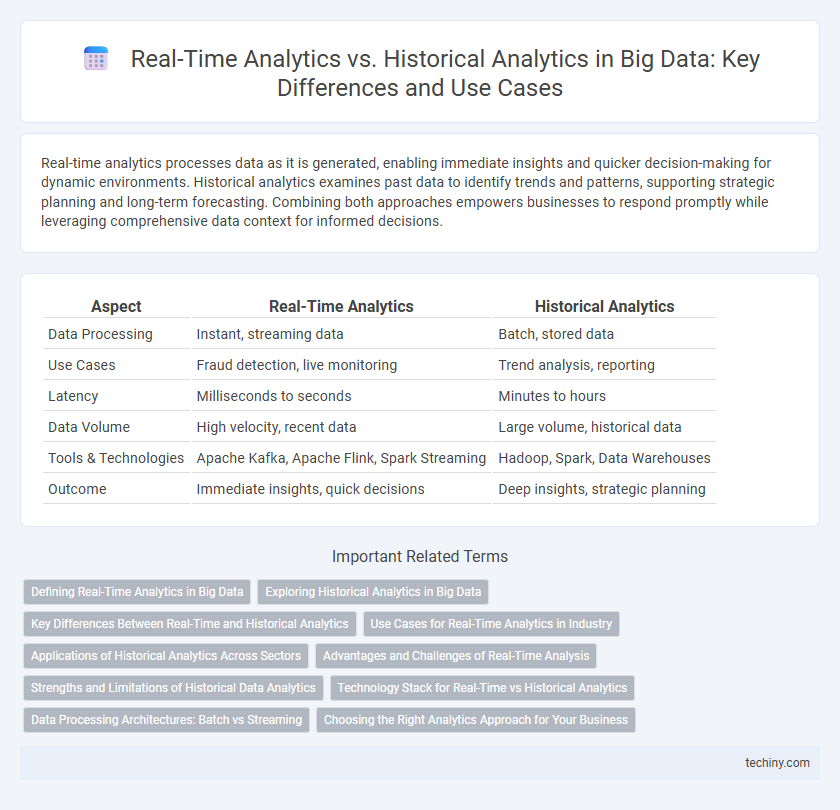

Table of Comparison

| Aspect | Real-Time Analytics | Historical Analytics |

|---|---|---|

| Data Processing | Instant, streaming data | Batch, stored data |

| Use Cases | Fraud detection, live monitoring | Trend analysis, reporting |

| Latency | Milliseconds to seconds | Minutes to hours |

| Data Volume | High velocity, recent data | Large volume, historical data |

| Tools & Technologies | Apache Kafka, Apache Flink, Spark Streaming | Hadoop, Spark, Data Warehouses |

| Outcome | Immediate insights, quick decisions | Deep insights, strategic planning |

Defining Real-Time Analytics in Big Data

Real-time analytics in big data involves processing and analyzing data streams instantly as they are generated, enabling immediate insights and decision-making. This approach leverages complex event processing, in-memory computing, and optimized data pipelines to handle high-velocity data from sources like IoT devices, social media feeds, and transactional systems. Real-time analytics contrasts with historical analytics by prioritizing current data analysis over past data aggregation to support dynamic, time-sensitive business operations.

Exploring Historical Analytics in Big Data

Historical analytics in Big Data involves analyzing vast volumes of past data to identify trends, patterns, and correlations that inform strategic decision-making. Techniques such as batch processing and data warehousing enable in-depth examination of datasets accumulated over time, providing valuable insights for forecasting and long-term planning. Leveraging machine learning algorithms on historical data enhances predictive accuracy and uncovers hidden relationships essential for business intelligence.

Key Differences Between Real-Time and Historical Analytics

Real-time analytics processes data instantly to provide immediate insights and support time-sensitive decision-making, while historical analytics examines past data to identify long-term trends and patterns. Key differences include data latency, with real-time analytics requiring low latency for up-to-the-second updates, versus historical analytics which operates on data batches collected over extended periods. Real-time analytics relies heavily on stream processing technologies like Apache Kafka, whereas historical analytics often uses data warehouses and batch processing frameworks such as Hadoop.

Use Cases for Real-Time Analytics in Industry

Real-time analytics enables industries to process and analyze streaming data instantly, supporting applications like fraud detection in finance, predictive maintenance in manufacturing, and dynamic pricing in retail. These use cases rely on low-latency data processing to drive rapid decision-making and enhance operational efficiency. By leveraging technologies such as Apache Kafka and Apache Flink, organizations achieve immediate insights that improve customer experience and reduce downtime.

Applications of Historical Analytics Across Sectors

Historical analytics leverages past data to uncover trends, patterns, and insights essential for strategic decision-making across sectors such as finance, healthcare, and retail. In finance, historical analytics enables risk assessment and fraud detection by analyzing transaction records over time. Healthcare uses historical patient data to improve treatment protocols and predict disease outbreaks, while retail relies on sales history to optimize inventory management and personalize marketing campaigns.

Advantages and Challenges of Real-Time Analysis

Real-time analytics offers the advantage of immediate data processing, enabling businesses to make instant decisions and respond quickly to emerging trends or anomalies. It faces challenges such as high computational demands, the need for continuous data streaming infrastructure, and the complexity of handling large volumes of rapidly changing information. Despite these hurdles, real-time analysis enhances operational efficiency and customer experience by providing up-to-the-minute insights that historical analytics cannot deliver.

Strengths and Limitations of Historical Data Analytics

Historical data analytics excels in uncovering long-term trends and patterns by analyzing extensive datasets collected over time, enabling accurate forecasting and strategic decision-making. Its strength lies in providing comprehensive insights that support risk assessment and identify cyclical behaviors, but it often struggles with real-time responsiveness and adaptability to sudden changes. Limitations include latency in data processing and reliance on outdated information, which may reduce relevance in fast-paced environments requiring immediate action.

Technology Stack for Real-Time vs Historical Analytics

Real-time analytics technology stacks typically involve streaming platforms like Apache Kafka or AWS Kinesis, in-memory databases such as Redis, and fast processing engines like Apache Flink or Apache Spark Streaming to handle continuous data flow with minimal latency. Historical analytics stacks rely on data warehouses like Amazon Redshift, Google BigQuery, or Snowflake, combined with ETL tools such as Apache NiFi or Talend, enabling batch processing of large datasets and complex queries over stored data. Both stacks integrate data storage solutions but differ fundamentally in processing speed and architecture tailored for live event handling versus aggregate trend analysis.

Data Processing Architectures: Batch vs Streaming

Real-time analytics relies on streaming data processing architectures that handle continuous data flow with low latency, enabling immediate insights and timely decision-making. Historical analytics uses batch processing architectures that aggregate large volumes of data over time, optimizing for throughput and complex queries on past data sets. The choice between batch and streaming architectures impacts data storage, processing speed, and the ability to react to changing business conditions dynamically.

Choosing the Right Analytics Approach for Your Business

Real-time analytics enables businesses to process and analyze data instantly, providing actionable insights that drive immediate decision-making and operational efficiency. Historical analytics leverages stored data to identify trends, patterns, and long-term insights, essential for strategic planning and predictive modeling. Selecting the right analytics approach depends on the business objectives, data volume, velocity, and the need for instant versus in-depth analysis to optimize decision outcomes.

Real-Time Analytics vs Historical Analytics Infographic

techiny.com

techiny.com