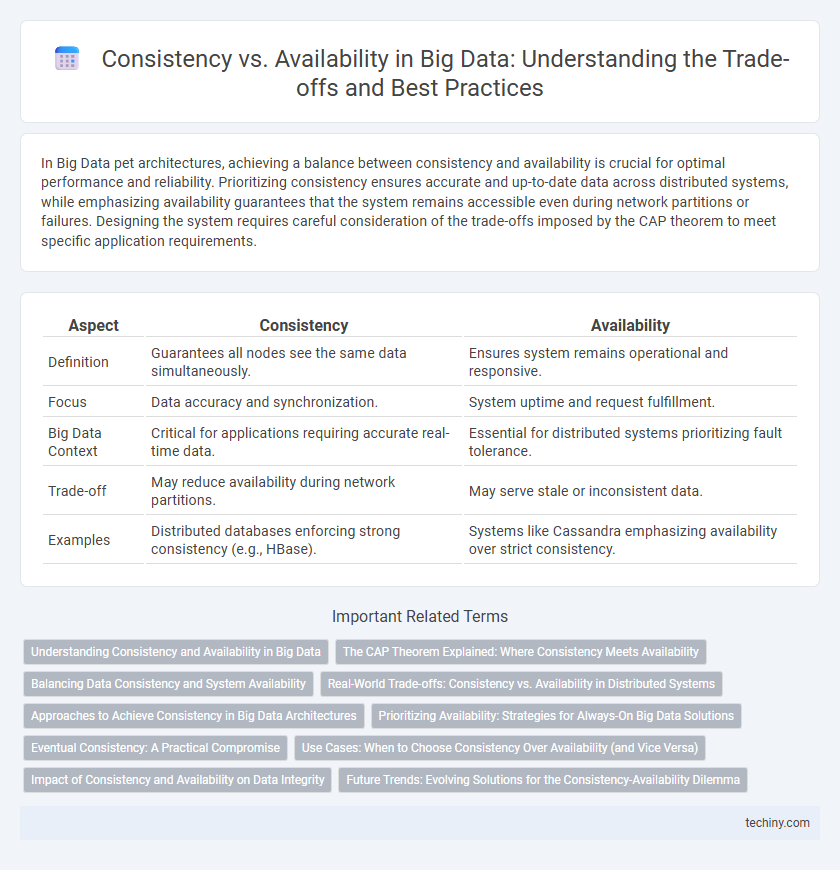

In Big Data pet architectures, achieving a balance between consistency and availability is crucial for optimal performance and reliability. Prioritizing consistency ensures accurate and up-to-date data across distributed systems, while emphasizing availability guarantees that the system remains accessible even during network partitions or failures. Designing the system requires careful consideration of the trade-offs imposed by the CAP theorem to meet specific application requirements.

Table of Comparison

| Aspect | Consistency | Availability |

|---|---|---|

| Definition | Guarantees all nodes see the same data simultaneously. | Ensures system remains operational and responsive. |

| Focus | Data accuracy and synchronization. | System uptime and request fulfillment. |

| Big Data Context | Critical for applications requiring accurate real-time data. | Essential for distributed systems prioritizing fault tolerance. |

| Trade-off | May reduce availability during network partitions. | May serve stale or inconsistent data. |

| Examples | Distributed databases enforcing strong consistency (e.g., HBase). | Systems like Cassandra emphasizing availability over strict consistency. |

Understanding Consistency and Availability in Big Data

Consistency in big data ensures that all nodes reflect the same data at any given time, which is crucial for accurate analytics and decision-making processes. Availability guarantees that the system remains operational and accessible despite node failures, enabling uninterrupted access to critical data. Balancing consistency and availability involves trade-offs influenced by the CAP theorem, where achieving strong consistency often reduces availability and vice versa.

The CAP Theorem Explained: Where Consistency Meets Availability

The CAP Theorem illustrates the trade-offs between consistency, availability, and partition tolerance in distributed big data systems. Ensuring consistency means all nodes reflect the same data state, while availability guarantees uninterrupted system responsiveness despite failures. Balancing these elements is crucial for designing scalable and reliable big data architectures that meet specific application requirements.

Balancing Data Consistency and System Availability

Balancing data consistency and system availability is crucial in Big Data architectures to ensure reliable and timely data processing. Techniques such as eventual consistency models and quorum-based replication optimize system availability while maintaining acceptable levels of data accuracy. Implementing adaptive consistency protocols enables systems to dynamically adjust based on workload demands and failure tolerance requirements.

Real-World Trade-offs: Consistency vs. Availability in Distributed Systems

Real-world distributed systems often face trade-offs between consistency and availability due to the CAP theorem, which states a system cannot simultaneously guarantee consistency, availability, and partition tolerance. Systems like Cassandra prioritize availability and partition tolerance, allowing eventual consistency, while systems like HBase emphasize strong consistency at the cost of availability during network partitions. Understanding these trade-offs guides database designers in selecting appropriate architectures based on application requirements for reliability and real-time data accuracy.

Approaches to Achieve Consistency in Big Data Architectures

Approaches to achieve consistency in Big Data architectures include strong consistency models, which ensure immediate data synchronization across distributed nodes using protocols like Paxos or Raft. Eventual consistency models rely on asynchronous replication to improve availability but require conflict resolution mechanisms such as vector clocks or versioning to maintain data integrity. Hybrid approaches combine both strategies by offering tunable consistency settings, enabling systems like Cassandra and DynamoDB to balance latency, availability, and consistency based on application requirements.

Prioritizing Availability: Strategies for Always-On Big Data Solutions

Prioritizing availability in Big Data solutions involves designing systems that ensure continuous data access and real-time processing despite network partitions or node failures. Techniques such as data replication, eventual consistency models, and distributed caching optimize system uptime and responsiveness for mission-critical applications. These strategies enable scalable, fault-tolerant architectures that maintain high availability without significantly compromising data consistency.

Eventual Consistency: A Practical Compromise

Eventual consistency offers a practical compromise in distributed big data systems, ensuring that all replicas converge to the same state over time despite temporary inconsistencies. This model enhances system availability by allowing reads and writes to proceed without immediate synchronization, crucial for handling massive data volumes across distributed nodes. By prioritizing availability and partition tolerance, eventual consistency supports scalable architectures like NoSQL databases and stream processing platforms.

Use Cases: When to Choose Consistency Over Availability (and Vice Versa)

In Big Data systems, choose consistency over availability when applications demand accurate, up-to-date data, such as financial transactions or inventory management, where stale data can cause critical errors. Availability is prioritized in use cases like social media feeds or content delivery networks, where system uptime and responsiveness matter more than immediate consistency. Understanding the CAP theorem helps architects balance these trade-offs to meet specific business requirements effectively.

Impact of Consistency and Availability on Data Integrity

Consistency ensures that all nodes reflect the same data state simultaneously, directly safeguarding data integrity by preventing discrepancies. High availability prioritizes system uptime, which can lead to temporary inconsistencies during network partitions, potentially compromising data accuracy. Balancing consistency and availability is crucial for maintaining reliable data integrity in distributed Big Data systems.

Future Trends: Evolving Solutions for the Consistency-Availability Dilemma

Future trends in Big Data emphasize evolving solutions that balance consistency and availability, leveraging advancements such as distributed ledger technologies and edge computing architectures. Innovations in consensus algorithms and adaptive replication methods aim to minimize latency while ensuring data integrity across globally distributed systems. Emerging AI-driven optimization models predict workload patterns to dynamically adjust consistency levels, addressing the ongoing trade-offs in large-scale data management.

Consistency vs Availability Infographic

techiny.com

techiny.com