MapReduce processes big data using a two-stage model that involves mapping and reducing tasks, which is effective for batch processing but can be inefficient for complex workflows. DAG (Directed Acyclic Graph) execution frameworks, such as Apache Spark, optimize task execution by representing computations as a graph of stages, enabling better resource utilization and faster processing. DAG execution supports iterative algorithms and in-memory processing, making it more suitable for big data applications requiring real-time insights and complex transformations.

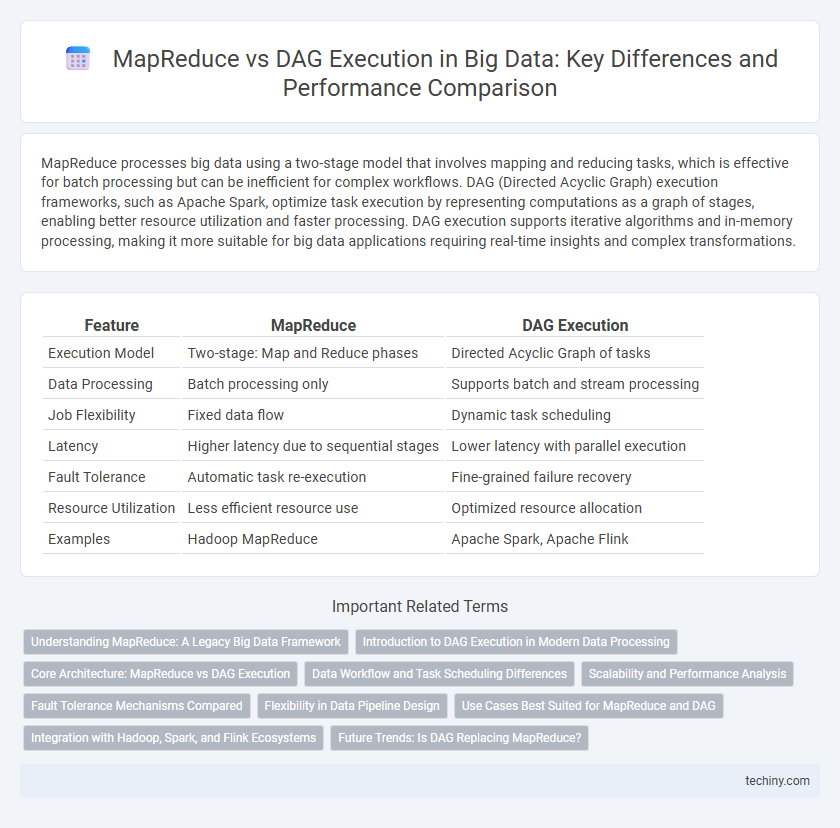

Table of Comparison

| Feature | MapReduce | DAG Execution |

|---|---|---|

| Execution Model | Two-stage: Map and Reduce phases | Directed Acyclic Graph of tasks |

| Data Processing | Batch processing only | Supports batch and stream processing |

| Job Flexibility | Fixed data flow | Dynamic task scheduling |

| Latency | Higher latency due to sequential stages | Lower latency with parallel execution |

| Fault Tolerance | Automatic task re-execution | Fine-grained failure recovery |

| Resource Utilization | Less efficient resource use | Optimized resource allocation |

| Examples | Hadoop MapReduce | Apache Spark, Apache Flink |

Understanding MapReduce: A Legacy Big Data Framework

MapReduce is a foundational big data processing framework that simplifies large-scale data analysis by dividing tasks into map and reduce phases, enabling parallel computation across distributed systems. Its rigid two-stage workflow limits optimization opportunities compared to DAG (Directed Acyclic Graph) execution engines, which allow more flexible and efficient task scheduling. Despite its legacy status, MapReduce remains significant for batch processing in Hadoop ecosystems, though modern big data applications often prefer DAG-based frameworks like Apache Spark for enhanced performance.

Introduction to DAG Execution in Modern Data Processing

DAG execution enables efficient data processing by representing tasks as a Directed Acyclic Graph, allowing parallel execution of dependent operations. Unlike MapReduce's rigid two-phase model, DAG frameworks such as Apache Spark optimize task scheduling and fault tolerance, reducing overhead and latency. This approach supports complex workflows with iterative and interactive computations, enhancing scalability in big data environments.

Core Architecture: MapReduce vs DAG Execution

MapReduce architecture processes data through two distinct phases--Map and Reduce--facilitating batch processing with rigid data flow and limited task parallelism. In contrast, DAG (Directed Acyclic Graph) execution employs a flexible graph-based model allowing complex workflows with multiple stages and optimized task dependencies. DAG engines enhance resource utilization and fault tolerance by dynamically scheduling tasks based on data lineage and execution status.

Data Workflow and Task Scheduling Differences

MapReduce processes data through distinct map and reduce phases with rigid synchronization points, limiting flexibility in complex data workflows, while DAG (Directed Acyclic Graph) execution models enable dynamic task scheduling by representing tasks as nodes with dependencies, allowing parallelism and optimized resource utilization. In MapReduce, intermediate data is written to disk between phases, causing higher I/O overhead, whereas DAG-based systems like Apache Spark keep data in-memory, significantly improving iterative and streaming task performance. The DAG execution's fine-grained scheduling adapts to varying workloads efficiently, contrasting with the batch-oriented synchronization in MapReduce that can lead to latency in data processing pipelines.

Scalability and Performance Analysis

MapReduce offers robust scalability through its parallel processing of large-scale data across distributed clusters but often suffers from high latency due to its rigid two-stage execution model. DAG execution frameworks, such as Apache Spark, optimize performance by enabling complex, multi-stage task graphs that reduce disk I/O and improve resource utilization, leading to faster data processing speeds. Scalability in DAG execution is enhanced by in-memory computation and dynamic scheduling, providing superior performance for iterative and real-time big data workloads compared to traditional MapReduce.

Fault Tolerance Mechanisms Compared

MapReduce employs a straightforward fault tolerance mechanism by re-executing failed map or reduce tasks, ensuring data consistency through deterministic outputs and checkpointing intermediate results on disk. Directed Acyclic Graph (DAG) execution frameworks like Apache Spark use lineage graphs to recompute only the lost partitions of data, enabling more efficient fault recovery without writing all intermediate data to disk. This fine-grained recovery combined with in-memory data persistence significantly reduces recovery time and improves overall fault tolerance in big data processing.

Flexibility in Data Pipeline Design

MapReduce enforces a rigid, two-stage processing model that limits pipeline flexibility by requiring data to pass sequentially through map and reduce tasks, often resulting in higher latency for complex workflows. Directed Acyclic Graph (DAG) execution frameworks, such as Apache Spark, enable more flexible data pipeline design by allowing multiple stages of transformations and actions to be expressed as a series of directed nodes, optimizing task scheduling and reducing unnecessary data shuffling. This flexibility in DAG-based systems leads to more efficient processing of iterative algorithms and real-time data streams, enhancing overall big data workflow performance.

Use Cases Best Suited for MapReduce and DAG

MapReduce excels in batch processing large-scale data sets, making it ideal for tasks like log analysis, indexing, and ETL (Extract, Transform, Load) pipelines where data transformation follows a linear flow. DAG execution frameworks such as Apache Spark are better suited for complex iterative algorithms and real-time data processing workflows, including machine learning, graph processing, and interactive analytics, where the data passes through multiple interconnected stages. Choosing MapReduce or DAG execution optimizes performance based on workload characteristics like task dependencies, iterative computation needs, and latency requirements.

Integration with Hadoop, Spark, and Flink Ecosystems

MapReduce integrates natively with the Hadoop ecosystem, leveraging HDFS for distributed storage and batch processing through its two-phase map and reduce tasks, optimized for fault tolerance and scalability. Apache Spark utilizes a Directed Acyclic Graph (DAG) execution engine that allows in-memory cluster computing, providing faster iterative processing and seamless integration with Hadoop's HDFS and YARN resource manager. Apache Flink's DAG execution model supports both batch and real-time stream processing with native connectors to Hadoop, HDFS, and YARN, enabling low-latency analytics and stateful computations across distributed data streams.

Future Trends: Is DAG Replacing MapReduce?

DAG (Directed Acyclic Graph) execution frameworks like Apache Spark and Apache Flink offer significant performance improvements over traditional MapReduce by enabling in-memory processing and optimized task scheduling. The shift towards DAG-based systems reflects the growing demand for low-latency, iterative, and complex data processing workflows in big data analytics. Future trends indicate that DAG execution is gradually replacing MapReduce due to its scalability, flexibility, and support for real-time streaming data applications.

MapReduce vs DAG Execution Infographic

techiny.com

techiny.com