MapReduce processes large data sets by dividing tasks into map and reduce functions, optimizing batch processing for scalability and fault tolerance. Dataflow offers a more flexible, unified programming model supporting both batch and stream processing with real-time data analysis capabilities. The choice between MapReduce and Dataflow depends on latency requirements, workload types, and the need for event-driven processing.

Table of Comparison

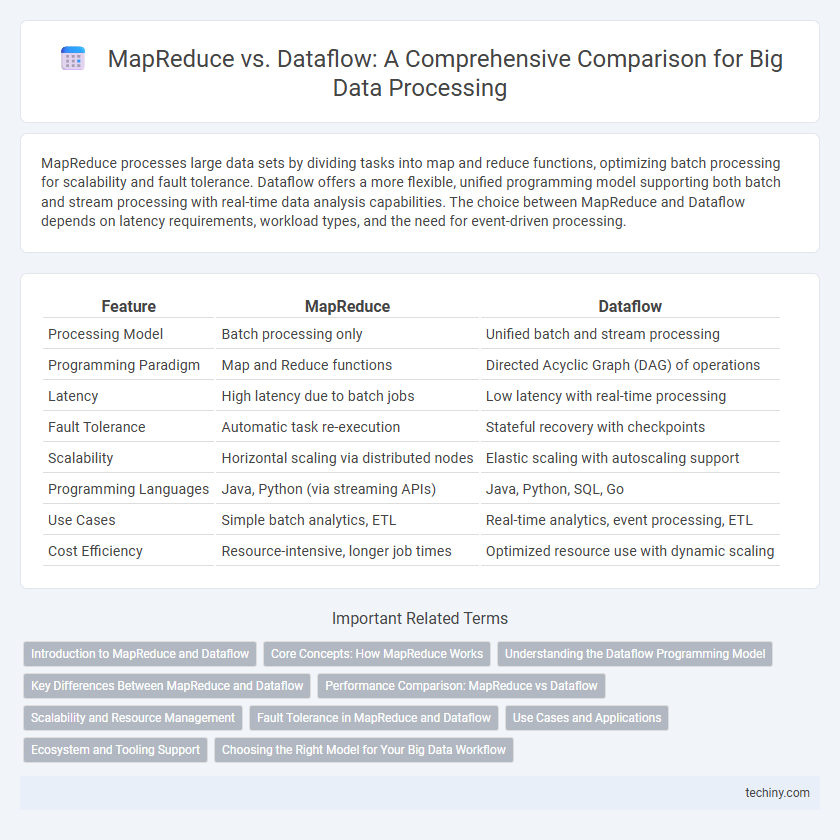

| Feature | MapReduce | Dataflow |

|---|---|---|

| Processing Model | Batch processing only | Unified batch and stream processing |

| Programming Paradigm | Map and Reduce functions | Directed Acyclic Graph (DAG) of operations |

| Latency | High latency due to batch jobs | Low latency with real-time processing |

| Fault Tolerance | Automatic task re-execution | Stateful recovery with checkpoints |

| Scalability | Horizontal scaling via distributed nodes | Elastic scaling with autoscaling support |

| Programming Languages | Java, Python (via streaming APIs) | Java, Python, SQL, Go |

| Use Cases | Simple batch analytics, ETL | Real-time analytics, event processing, ETL |

| Cost Efficiency | Resource-intensive, longer job times | Optimized resource use with dynamic scaling |

Introduction to MapReduce and Dataflow

MapReduce is a programming model designed for processing large-scale datasets with a distributed algorithm, emphasizing parallel computation through 'map' and 'reduce' functions that transform and aggregate data efficiently across clusters. Dataflow represents an advanced, flexible programming model that supports both batch and stream processing by defining data pipelines as directed graphs, allowing dynamic optimization and fault tolerance. Both frameworks are foundational for big data analytics but differ in execution paradigms, with MapReduce suited for batch processing and Dataflow enabling unified stream and batch applications.

Core Concepts: How MapReduce Works

MapReduce operates by dividing large data sets into smaller chunks processed in parallel through a map function that extracts key-value pairs. These pairs undergo a shuffle and sort phase, grouping data by keys before the reduce function aggregates or summarizes the results. This core concept enables efficient distributed processing of massive volumes of data across clusters, optimizing performance and fault tolerance in big data environments.

Understanding the Dataflow Programming Model

The Dataflow programming model enables parallel processing by representing computations as directed acyclic graphs where nodes denote operations and edges represent data dependencies, offering finer-grained control over execution compared to MapReduce's rigid map and reduce phases. Unlike MapReduce's batch-oriented processing, Dataflow supports both batch and stream processing, allowing it to handle real-time analytics and dynamic workloads more efficiently. Dataflow frameworks optimize resource utilization and fault tolerance through pipeline parallelism and incremental processing, making them ideal for complex Big Data workflows in distributed environments.

Key Differences Between MapReduce and Dataflow

MapReduce processes data using fixed stages of map and reduce tasks that operate on key-value pairs, emphasizing batch processing and fault tolerance through data replication. Dataflow supports a more flexible, unified programming model that handles both batch and stream processing with advanced windowing, triggering, and event-time processing capabilities. Unlike MapReduce's rigid data flow, Dataflow enables dynamic data pipelines with seamless integration of parallelism optimization and stateful processing for real-time analytics.

Performance Comparison: MapReduce vs Dataflow

MapReduce processes large datasets through rigid batch-oriented stages, resulting in higher latency and less efficient resource utilization compared to Dataflow's optimized, dynamic execution model. Dataflow leverages parallelism and incremental processing, significantly enhancing processing speed and scalability for real-time analytics. Performance benchmarks consistently show Dataflow reducing job completion times by up to 70% over traditional MapReduce implementations, especially in streaming and iterative tasks.

Scalability and Resource Management

MapReduce offers high scalability by distributing tasks across numerous nodes but often faces resource inefficiencies due to its rigid batching process. Dataflow enhances resource management through dynamic work rebalancing and real-time processing, enabling more elastic scaling in cloud environments. This flexibility allows Dataflow to optimize resource allocation and handle varying workloads more efficiently than traditional MapReduce frameworks.

Fault Tolerance in MapReduce and Dataflow

MapReduce achieves fault tolerance by dividing tasks into small chunks called map and reduce tasks, with automatic re-execution of failed tasks on other nodes to ensure data processing completion. Dataflow employs checkpointing and state management with automatic retries, enabling consistent state restoration after failures and minimizing data loss. Both frameworks emphasize fault tolerance but Dataflow's sophisticated state handling supports more complex stream processing scenarios.

Use Cases and Applications

MapReduce excels in batch processing scenarios such as large-scale log analysis, indexing, and offline data transformations, where fault tolerance and straightforward parallelism are crucial. Dataflow supports both batch and streaming pipelines, making it ideal for real-time analytics, event detection, and complex ETL workflows with dynamic windowing and stateful processing. Enterprises leverage MapReduce for heavy, resource-intensive tasks on historical data, while Dataflow is preferred for continuous data ingestion and low-latency processing across various cloud-native environments.

Ecosystem and Tooling Support

MapReduce, pioneered by Google, has a mature ecosystem with robust tooling support including Hadoop's distributed file system and YARN resource management, widely adopted in batch processing scenarios. Dataflow, Google's unified programming model, offers advanced streaming and batch processing with seamless integration into the Apache Beam ecosystem, enabling portability across various runners and cloud platforms. The Dataflow ecosystem emphasizes real-time data processing and sophisticated windowing, while MapReduce's strength lies in its simplicity and extensive developer community tools.

Choosing the Right Model for Your Big Data Workflow

Choosing the right model for your Big Data workflow depends on the specific processing needs and scalability requirements. MapReduce excels in batch processing with its straightforward key-value pair paradigm, making it ideal for large-scale, disk-based computations. Dataflow offers a unified programming model for both stream and batch processing, providing greater flexibility and real-time analytics capabilities suited for complex data pipelines.

MapReduce vs Dataflow Infographic

techiny.com

techiny.com