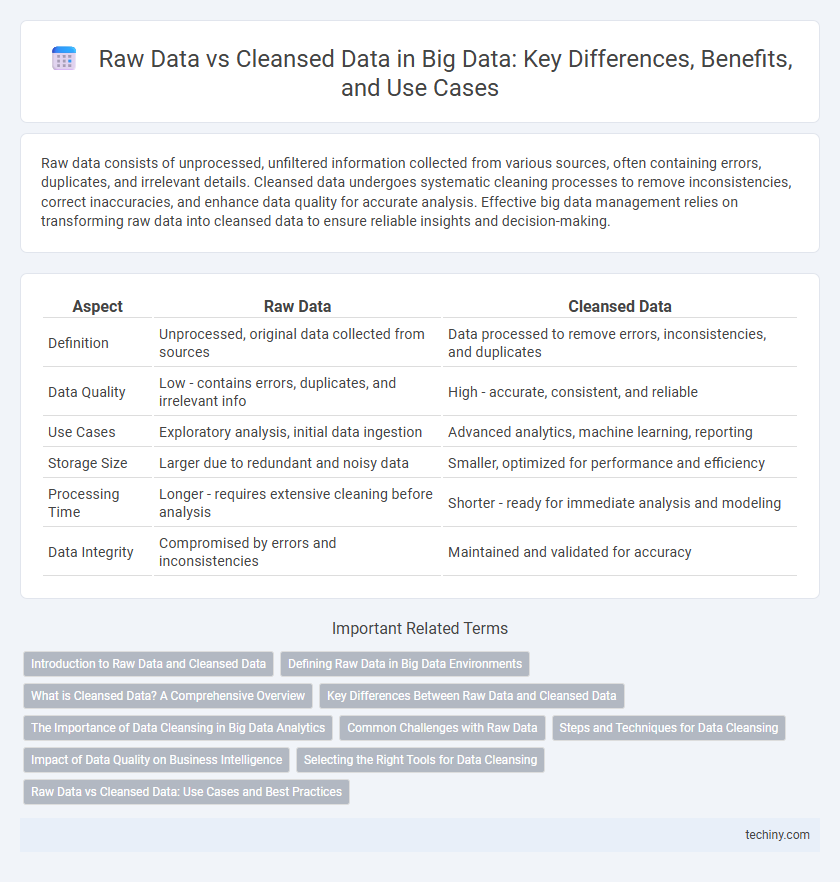

Raw data consists of unprocessed, unfiltered information collected from various sources, often containing errors, duplicates, and irrelevant details. Cleansed data undergoes systematic cleaning processes to remove inconsistencies, correct inaccuracies, and enhance data quality for accurate analysis. Effective big data management relies on transforming raw data into cleansed data to ensure reliable insights and decision-making.

Table of Comparison

| Aspect | Raw Data | Cleansed Data |

|---|---|---|

| Definition | Unprocessed, original data collected from sources | Data processed to remove errors, inconsistencies, and duplicates |

| Data Quality | Low - contains errors, duplicates, and irrelevant info | High - accurate, consistent, and reliable |

| Use Cases | Exploratory analysis, initial data ingestion | Advanced analytics, machine learning, reporting |

| Storage Size | Larger due to redundant and noisy data | Smaller, optimized for performance and efficiency |

| Processing Time | Longer - requires extensive cleaning before analysis | Shorter - ready for immediate analysis and modeling |

| Data Integrity | Compromised by errors and inconsistencies | Maintained and validated for accuracy |

Introduction to Raw Data and Cleansed Data

Raw data consists of unprocessed, original datasets collected from various sources, often containing errors, duplicates, or inconsistencies. Cleansed data is the refined version of raw data after undergoing processes such as error correction, normalization, and removal of irrelevant information to ensure accuracy and reliability. Proper distinction between raw and cleansed data is crucial for effective big data analytics and decision-making.

Defining Raw Data in Big Data Environments

Raw data in big data environments refers to unprocessed, original datasets collected from various sources like sensors, social media, and transactional systems, often containing errors, inconsistencies, and irrelevant information. This raw data is characterized by its volume, velocity, and variety, posing challenges for storage and analysis due to its unstructured or semi-structured formats. Defining raw data accurately is essential for implementing effective data cleansing processes that enhance data quality and enable reliable analytics.

What is Cleansed Data? A Comprehensive Overview

Cleansed data refers to information that has been processed to remove errors, inconsistencies, duplicates, and inaccuracies, ensuring higher quality and reliability for analysis. This process involves validation, standardization, and enrichment techniques that transform raw data into a structured, accurate dataset. Optimizing data quality through cleansing enhances decision-making, predictive analytics, and operational efficiency within big data ecosystems.

Key Differences Between Raw Data and Cleansed Data

Raw data consists of unprocessed, unfiltered information collected from various sources, often containing errors, duplicates, and inconsistencies. Cleansed data has undergone transformation to remove inaccuracies, standardize formats, and fill in missing values, enhancing its quality for accurate analysis. Key differences include the level of noise, data integrity, and usability, with cleansed data being more reliable and suitable for decision-making processes in big data analytics.

The Importance of Data Cleansing in Big Data Analytics

Data cleansing is crucial in Big Data analytics as it transforms raw data, which often contains errors, duplicates, or inconsistencies, into accurate and reliable datasets. Cleaned data enhances the quality of insights, improving decision-making and predictive analytics accuracy. Effective data cleansing processes optimize storage, reduce processing time, and increase overall efficiency in Big Data environments.

Common Challenges with Raw Data

Raw data often contains inconsistencies, duplicates, and errors that complicate analysis and reduce accuracy in Big Data processing. Incomplete or missing values in raw datasets lead to biased models and unreliable insights, requiring extensive data cleansing. Handling diverse data formats and noise from multiple sources further challenges the reliability and usability of raw data.

Steps and Techniques for Data Cleansing

Data cleansing in big data involves steps such as data profiling to identify inaccuracies, data normalization to standardize formats, and deduplication to remove redundant records. Techniques include outlier detection using statistical methods, imputation to handle missing values, and validation against predefined rules or reference datasets. Effective cleansing improves data quality, ensuring reliable analytics and decision-making processes.

Impact of Data Quality on Business Intelligence

Raw data often contains errors, duplicates, and inconsistencies that can significantly reduce the accuracy of business intelligence insights. Cleansed data enhances data quality by removing inaccuracies and standardizing formats, leading to more reliable analytics and better decision-making. High-quality data directly improves predictive modeling, customer segmentation, and operational efficiency across business intelligence platforms.

Selecting the Right Tools for Data Cleansing

Selecting the right tools for data cleansing is crucial to transforming raw data into high-quality, cleansed data suitable for Big Data analytics. Effective tools like Apache NiFi, Talend, and Trifacta automate error detection, data normalization, and duplication removal, ensuring accuracy and consistency. Leveraging machine learning algorithms within these tools enhances the identification of anomalies and missing values, optimizing the data preparation process for more reliable insights.

Raw Data vs Cleansed Data: Use Cases and Best Practices

Raw data serves as the foundational input in big data analytics, capturing unprocessed information directly from sources such as sensors, logs, and transactions, enabling comprehensive analysis and discovery of hidden patterns. Cleansed data, refined through processes like deduplication, normalization, and error correction, enhances accuracy and reliability, supporting critical applications such as predictive modeling, decision-making, and real-time reporting. Best practices emphasize maintaining data lineage, implementing automated cleaning pipelines, and balancing data completeness with quality to optimize use case outcomes across industries like healthcare, finance, and retail.

Raw Data vs Cleansed Data Infographic

techiny.com

techiny.com