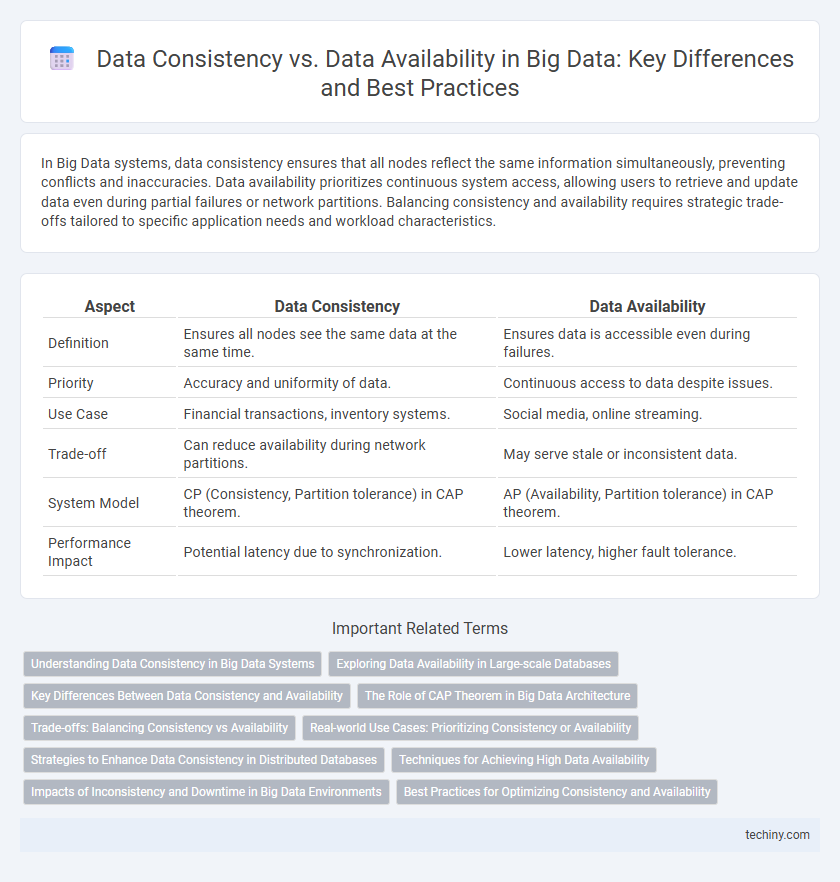

In Big Data systems, data consistency ensures that all nodes reflect the same information simultaneously, preventing conflicts and inaccuracies. Data availability prioritizes continuous system access, allowing users to retrieve and update data even during partial failures or network partitions. Balancing consistency and availability requires strategic trade-offs tailored to specific application needs and workload characteristics.

Table of Comparison

| Aspect | Data Consistency | Data Availability |

|---|---|---|

| Definition | Ensures all nodes see the same data at the same time. | Ensures data is accessible even during failures. |

| Priority | Accuracy and uniformity of data. | Continuous access to data despite issues. |

| Use Case | Financial transactions, inventory systems. | Social media, online streaming. |

| Trade-off | Can reduce availability during network partitions. | May serve stale or inconsistent data. |

| System Model | CP (Consistency, Partition tolerance) in CAP theorem. | AP (Availability, Partition tolerance) in CAP theorem. |

| Performance Impact | Potential latency due to synchronization. | Lower latency, higher fault tolerance. |

Understanding Data Consistency in Big Data Systems

Data consistency in Big Data systems ensures that all nodes reflect the same data values at any given time, preventing anomalies during simultaneous updates. Achieving strong consistency often requires coordination and synchronization mechanisms like consensus protocols, which can introduce latency and reduce system availability. Understanding the trade-offs between strict consistency models, such as linearizability, and eventual consistency helps in designing scalable Big Data architectures tailored to specific application requirements.

Exploring Data Availability in Large-scale Databases

Data availability in large-scale databases ensures that information remains accessible even during system failures or network partitions, critical for real-time analytics and continuous operations. Techniques such as data replication and distributed consensus algorithms enhance availability by minimizing downtime and avoiding single points of failure. Balancing high availability with eventual consistency models supports scalable querying and fault tolerance in big data environments.

Key Differences Between Data Consistency and Availability

Data consistency ensures that all users see the same data at the same time, maintaining accuracy and reliability across distributed systems. Data availability prioritizes system uptime and access to data, even if some data segments are temporarily outdated or inconsistent. The key difference lies in choosing between immediate uniformity of data (consistency) and uninterrupted access to data (availability) within distributed databases.

The Role of CAP Theorem in Big Data Architecture

Data consistency and data availability are critical dimensions addressed by the CAP Theorem, which states that in distributed Big Data systems, it is impossible to simultaneously guarantee consistency, availability, and partition tolerance. Big Data architectures often prioritize partition tolerance due to network failures, forcing a trade-off between consistency and availability based on application requirements. Understanding the CAP Theorem guides architects in designing systems that balance these factors to optimize performance and reliability in large-scale data processing environments.

Trade-offs: Balancing Consistency vs Availability

Balancing data consistency and availability in big data systems involves critical trade-offs governed by the CAP theorem, which states that a distributed database can only guarantee two out of three: Consistency, Availability, and Partition tolerance. Prioritizing consistency ensures that all nodes reflect the same data at any given time, crucial for applications requiring reliable transactional integrity like financial services, while favoring availability supports uninterrupted access and fault tolerance, essential for real-time analytics and high-traffic web services. Effective big data architecture often employs configurable consistency models, such as eventual consistency, to optimize performance, scalability, and user experience based on specific application requirements and workload characteristics.

Real-world Use Cases: Prioritizing Consistency or Availability

In financial services, data consistency is prioritized to ensure accurate transaction records and maintain regulatory compliance, where even minor discrepancies can lead to serious errors or fraud. In contrast, social media platforms often emphasize data availability to guarantee seamless user experiences and real-time updates, accepting eventual consistency to handle massive volumes of user-generated content. E-commerce systems balance both by ensuring consistent inventory data during checkout while maintaining high availability to support continuous user interactions and prevent lost sales.

Strategies to Enhance Data Consistency in Distributed Databases

Ensuring data consistency in distributed databases involves implementing techniques such as strong consistency models, quorum-based replication, and consensus algorithms like Paxos or Raft to synchronize updates across nodes. Leveraging distributed transaction protocols such as two-phase commit (2PC) or three-phase commit (3PC) can help maintain atomicity and consistency during concurrent operations. Data partitioning strategies combined with conflict-free replicated data types (CRDTs) optimize consistency without compromising availability in large-scale distributed systems.

Techniques for Achieving High Data Availability

Techniques for achieving high data availability in big data systems include replication, where data is copied across multiple nodes to prevent data loss and ensure continuous access. Employing distributed consensus algorithms like Paxos or Raft helps maintain consistency while enabling fault tolerance among replicated data stores. Utilizing partitioning strategies combined with failure detection mechanisms further enhances system resilience by isolating faults and rerouting requests to operational nodes swiftly.

Impacts of Inconsistency and Downtime in Big Data Environments

Data inconsistency in Big Data environments leads to unreliable analytics and decision-making errors, significantly impacting business outcomes. Downtime reduces system availability, causing delays in real-time data processing and loss of critical insights. Balancing data consistency and availability is crucial to maintain operational efficiency and trust in Big Data applications.

Best Practices for Optimizing Consistency and Availability

Implementing strong consistency models such as distributed consensus algorithms like Paxos or Raft ensures data integrity across nodes but may impact availability during network partitions. Employing eventual consistency with conflict resolution strategies like vector clocks or CRDTs can enhance availability while maintaining acceptable consistency levels in distributed systems. Balancing trade-offs through hybrid approaches and monitoring latency metrics optimizes both data consistency and system availability in big data architectures.

Data Consistency vs Data Availability Infographic

techiny.com

techiny.com