In-memory computing offers significantly faster data processing speeds by storing data directly in RAM, enabling real-time analytics and immediate insights for Big Data applications. Disk-based computing relies on slower read-write operations from hard drives or SSDs, resulting in higher latency and less efficient handling of massive datasets. Choosing in-memory solutions enhances performance and scalability, making it ideal for time-sensitive Big Data pet projects requiring rapid data access and processing.

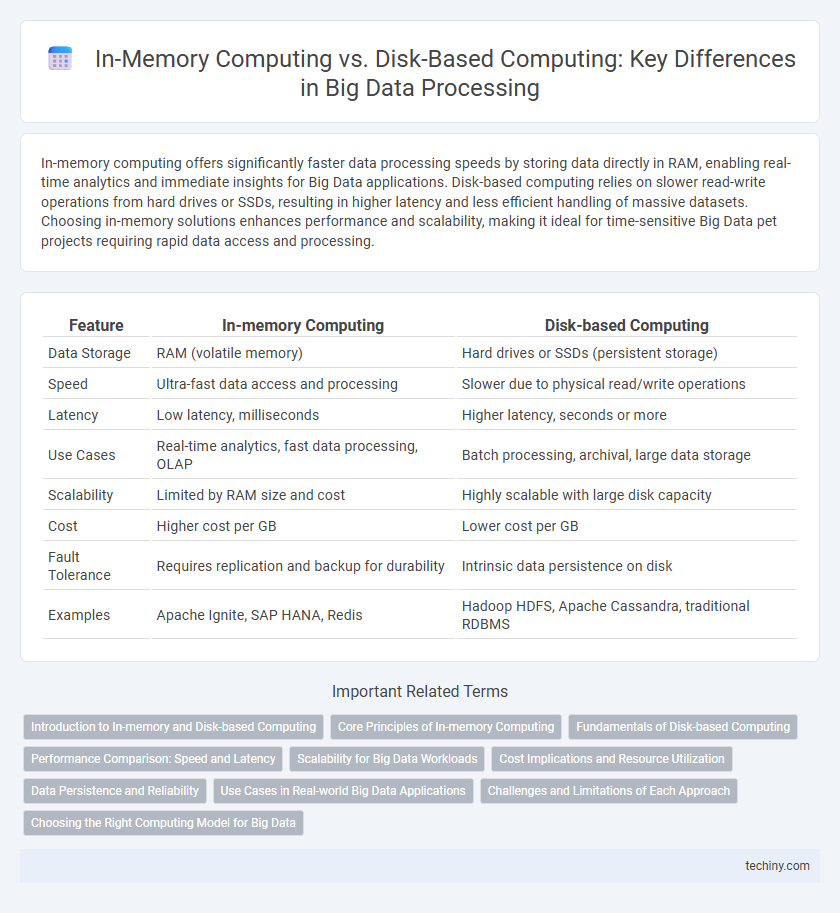

Table of Comparison

| Feature | In-memory Computing | Disk-based Computing |

|---|---|---|

| Data Storage | RAM (volatile memory) | Hard drives or SSDs (persistent storage) |

| Speed | Ultra-fast data access and processing | Slower due to physical read/write operations |

| Latency | Low latency, milliseconds | Higher latency, seconds or more |

| Use Cases | Real-time analytics, fast data processing, OLAP | Batch processing, archival, large data storage |

| Scalability | Limited by RAM size and cost | Highly scalable with large disk capacity |

| Cost | Higher cost per GB | Lower cost per GB |

| Fault Tolerance | Requires replication and backup for durability | Intrinsic data persistence on disk |

| Examples | Apache Ignite, SAP HANA, Redis | Hadoop HDFS, Apache Cassandra, traditional RDBMS |

Introduction to In-memory and Disk-based Computing

In-memory computing stores data directly in the RAM, enabling ultra-fast data processing and real-time analytics, which is critical for Big Data applications requiring low latency. Disk-based computing relies on traditional hard drives or SSDs to store data, often resulting in slower access times due to physical read/write constraints. The choice between in-memory and disk-based computing significantly impacts the performance, scalability, and cost efficiency of Big Data processing systems.

Core Principles of In-memory Computing

In-memory computing stores data directly in the system's RAM, enabling rapid data processing and real-time analytics by minimizing latency associated with disk I/O operations. This approach leverages distributed memory architecture to ensure scalability and fault tolerance, while utilizing parallel processing for simultaneous data access and computation. Core principles include data locality, computational speed, and efficient memory management to handle large datasets effectively compared to traditional disk-based systems.

Fundamentals of Disk-based Computing

Disk-based computing relies on persistent storage devices such as hard disk drives (HDDs) or solid-state drives (SSDs) to read and write data, enabling large-scale data management at lower costs compared to in-memory systems. It handles extensive datasets by sequentially accessing data from slower storage tiers, leveraging techniques like caching and indexing to optimize performance despite higher latency. This fundamental approach supports big data applications requiring durability and fault tolerance but faces constraints in processing speed due to physical read/write limitations of disk storage.

Performance Comparison: Speed and Latency

In-memory computing significantly outperforms disk-based computing in Big Data processing by reducing latency to microseconds and enabling real-time analytics through data storage within RAM. Disk-based computing relies on slower I/O operations with latencies often in milliseconds, which limits throughput and delays data retrieval and processing. This speed advantage makes in-memory systems ideal for applications requiring instantaneous data access and high transaction volumes.

Scalability for Big Data Workloads

In-memory computing provides superior scalability for big data workloads by enabling rapid data access and processing directly within RAM, significantly reducing latency compared to disk-based computing. Disk-based systems face bottlenecks due to slower read/write speeds and I/O constraints, limiting their ability to efficiently scale with increasing data volumes. Technologies such as Apache Spark leverage in-memory computing to optimize parallel processing and resource utilization, facilitating enhanced scalability in big data environments.

Cost Implications and Resource Utilization

In-memory computing significantly reduces data access latency by storing data in RAM, resulting in faster processing speeds compared to traditional disk-based computing, which relies on slower storage devices like HDDs or SSDs. Although in-memory systems require higher upfront investment in RAM and specialized hardware, they optimize resource utilization by minimizing I/O bottlenecks and reducing CPU idle times, potentially lowering operational costs over time. Disk-based computing incurs lower initial hardware costs but demands more maintenance and energy usage due to higher data retrieval times and increased wear on physical drives.

Data Persistence and Reliability

In-memory computing stores data primarily in RAM, offering faster processing speeds but requiring strategies like snapshotting or replication to ensure data persistence and reliability. Disk-based computing writes data directly to persistent storage, providing inherent durability but at the cost of slower access times compared to memory. Balancing speed and reliability, hybrid approaches often combine in-memory speed with disk-based persistence to optimize big data workflows.

Use Cases in Real-world Big Data Applications

In-memory computing accelerates real-time analytics and decision-making by storing and processing Big Data directly in RAM, making it ideal for use cases like fraud detection, dynamic pricing, and streaming analytics. Disk-based computing, while slower due to I/O latency, remains essential for batch processing, historical data analysis, and large-scale data storage in applications such as data warehousing and ETL pipelines. Enterprises leverage in-memory systems like Apache Ignite for speed-critical tasks and Hadoop HDFS for cost-effective, scalable long-term data management.

Challenges and Limitations of Each Approach

In-memory computing accelerates data processing by storing data in RAM, but faces challenges such as high cost, limited memory capacity, and data volatility issues during power failures. Disk-based computing offers greater storage capacity and data persistence, yet struggles with slower data retrieval speeds and latency caused by mechanical read/write operations. Both approaches require careful consideration of scalability, cost-efficiency, and data integrity to optimize performance in big data environments.

Choosing the Right Computing Model for Big Data

In-memory computing processes Big Data by storing and analyzing data directly in RAM, offering faster data retrieval and real-time analytics compared to disk-based computing, which relies on slower hard drive storage. Disk-based computing can handle larger volumes of data economically but often incurs higher latency, making it suitable for batch processing and archival purposes. Selecting the right computing model depends on specific Big Data use cases, prioritizing speed and immediate insights favors in-memory systems, whereas cost-efficiency and massive data capacity lean toward disk-based solutions.

In-memory Computing vs Disk-based Computing Infographic

techiny.com

techiny.com