In-memory processing enables real-time analytics by storing data directly in RAM, significantly reducing latency compared to disk-based processing, which relies on slower read/write speeds of physical storage. This approach enhances performance for big data applications requiring rapid data access and iterative computations. Disk-based processing, while more cost-effective for massive datasets, often struggles with speed and efficiency, making it less ideal for time-sensitive analytics.

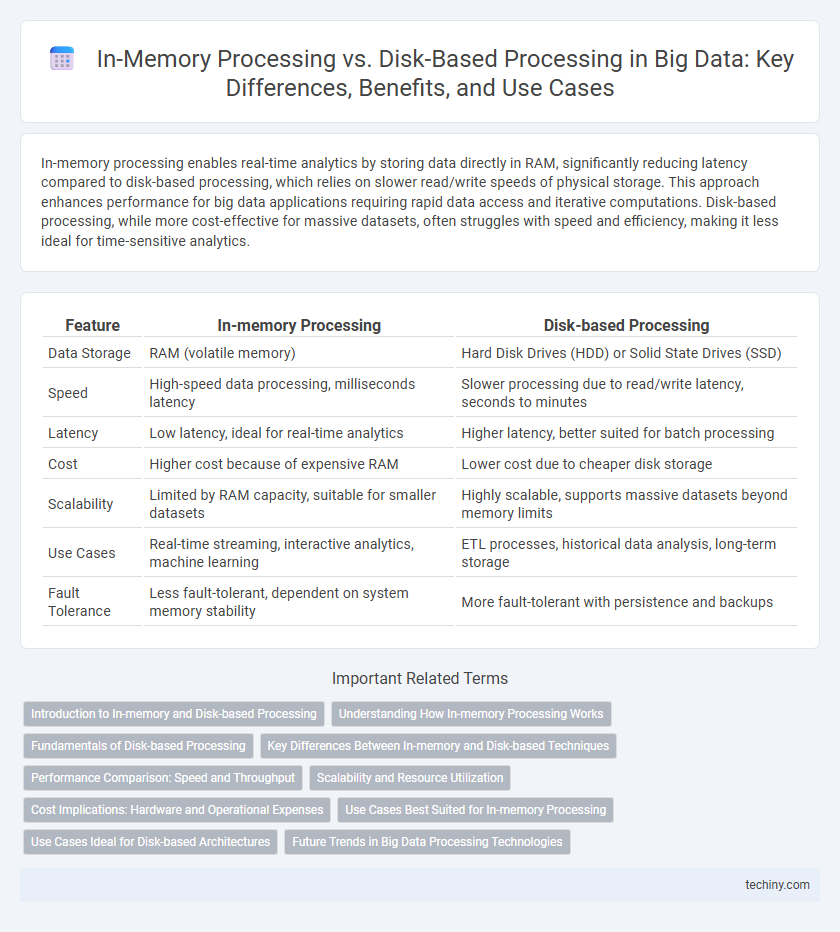

Table of Comparison

| Feature | In-memory Processing | Disk-based Processing |

|---|---|---|

| Data Storage | RAM (volatile memory) | Hard Disk Drives (HDD) or Solid State Drives (SSD) |

| Speed | High-speed data processing, milliseconds latency | Slower processing due to read/write latency, seconds to minutes |

| Latency | Low latency, ideal for real-time analytics | Higher latency, better suited for batch processing |

| Cost | Higher cost because of expensive RAM | Lower cost due to cheaper disk storage |

| Scalability | Limited by RAM capacity, suitable for smaller datasets | Highly scalable, supports massive datasets beyond memory limits |

| Use Cases | Real-time streaming, interactive analytics, machine learning | ETL processes, historical data analysis, long-term storage |

| Fault Tolerance | Less fault-tolerant, dependent on system memory stability | More fault-tolerant with persistence and backups |

Introduction to In-memory and Disk-based Processing

In-memory processing stores data directly in the system's RAM, enabling ultra-fast access and real-time analytics by minimizing latency compared to disk-based systems. Disk-based processing relies on persistent storage like HDDs or SSDs, which offers greater capacity but incurs slower data retrieval times due to physical read/write constraints. This fundamental difference impacts throughput, query speed, and resource utilization in big data environments.

Understanding How In-memory Processing Works

In-memory processing leverages RAM to store and analyze data, enabling significantly faster data retrieval and computation compared to disk-based processing, which relies on slower hard drive access. This approach reduces input/output latency by keeping data directly accessible in memory, facilitating real-time analytics and accelerated decision-making. Technologies like Apache Spark utilize in-memory processing to optimize big data workloads, enhancing performance in complex data scenarios.

Fundamentals of Disk-based Processing

Disk-based processing relies on reading and writing data to persistent storage devices such as HDDs or SSDs, prioritizing capacity over speed compared to in-memory solutions. It uses strategies like indexing, caching, and batch processing to optimize data retrieval and manage large-scale datasets that exceed available RAM. Despite higher latency, disk-based processing is essential for handling massive volumes of historical data where cost-effective storage is critical.

Key Differences Between In-memory and Disk-based Techniques

In-memory processing leverages RAM to store and analyze data, resulting in significantly faster data retrieval and real-time analytics compared to disk-based processing, which relies on slower persistent storage like HDDs or SSDs. Disk-based techniques, while offering larger storage capacity at a lower cost, suffer from higher latency due to frequent read/write operations, making them less suitable for time-sensitive big data applications. The key difference lies in speed and efficiency: in-memory processing excels in rapid data access and iterative computations, whereas disk-based processing is preferable for handling massive datasets that exceed memory limits.

Performance Comparison: Speed and Throughput

In-memory processing significantly outperforms disk-based processing in big data applications by offering faster data access speeds and higher throughput due to data being stored in RAM rather than slower disk storage. This reduces latency and accelerates real-time analytics, enabling quicker decision-making and more efficient handling of large data volumes. Disk-based processing, while cost-effective for massive datasets, encounters bottlenecks from slower I/O operations, limiting its performance in speed-sensitive tasks.

Scalability and Resource Utilization

In-memory processing enables faster data analysis by storing data directly in RAM, significantly improving scalability for real-time big data applications. Disk-based processing relies on slower rotational storage or SSDs, leading to increased latency and less efficient resource utilization under heavy workloads. Effective scalability in big data environments depends on balancing high-speed memory access with storage capacity to optimize overall system performance.

Cost Implications: Hardware and Operational Expenses

In-memory processing requires high-capacity RAM, which significantly increases upfront hardware costs compared to cheaper disk-based storage solutions. Operational expenses for in-memory systems tend to be higher due to increased power consumption and cooling requirements associated with maintaining large volatile memory arrays. Disk-based processing, while slower, lowers total cost of ownership with less expensive hardware and reduced energy demands.

Use Cases Best Suited for In-memory Processing

In-memory processing excels in real-time analytics, where rapid data retrieval and low latency are critical for decision-making in industries such as finance, healthcare, and e-commerce. It is ideal for complex event processing, fraud detection, and interactive data visualization that require instant access to large datasets. Use cases involving iterative machine learning algorithms and streaming data also benefit significantly from the high-speed performance of in-memory processing compared to disk-based alternatives.

Use Cases Ideal for Disk-based Architectures

Disk-based processing is ideal for big data use cases involving extremely large datasets that exceed available memory capacity, such as historical data analysis, batch processing, and archive querying. Workloads requiring fault tolerance and data persistence, like ETL pipelines and large-scale data warehousing, benefit from disk-based storage due to its durability and lower cost per terabyte. Applications with less frequent data access patterns or complex data transformations, including log analytics and regulatory compliance reporting, also leverage disk-based architectures effectively.

Future Trends in Big Data Processing Technologies

In-memory processing is rapidly advancing with the integration of high-capacity RAM and non-volatile memory technologies, enabling faster analytics and real-time data insights compared to traditional disk-based processing. Future trends point to hybrid architectures combining in-memory and disk storage to balance speed, cost, and scalability, while leveraging AI-driven optimizations for workload management and resource allocation. Emerging big data platforms will increasingly adopt edge computing and distributed in-memory frameworks to enhance latency-sensitive applications and support massive-scale data environments.

In-memory Processing vs Disk-based Processing Infographic

techiny.com

techiny.com