Hadoop MapReduce processes large datasets by dividing tasks into map and reduce phases, offering reliability and scalability but with slower performance due to disk-based operations. Apache Spark improves upon this by using in-memory processing, significantly accelerating data analytics and supporting complex workflows like machine learning. Spark's versatility and speed make it the preferred choice for real-time big data pet analytics compared to the batch-centric approach of Hadoop MapReduce.

Table of Comparison

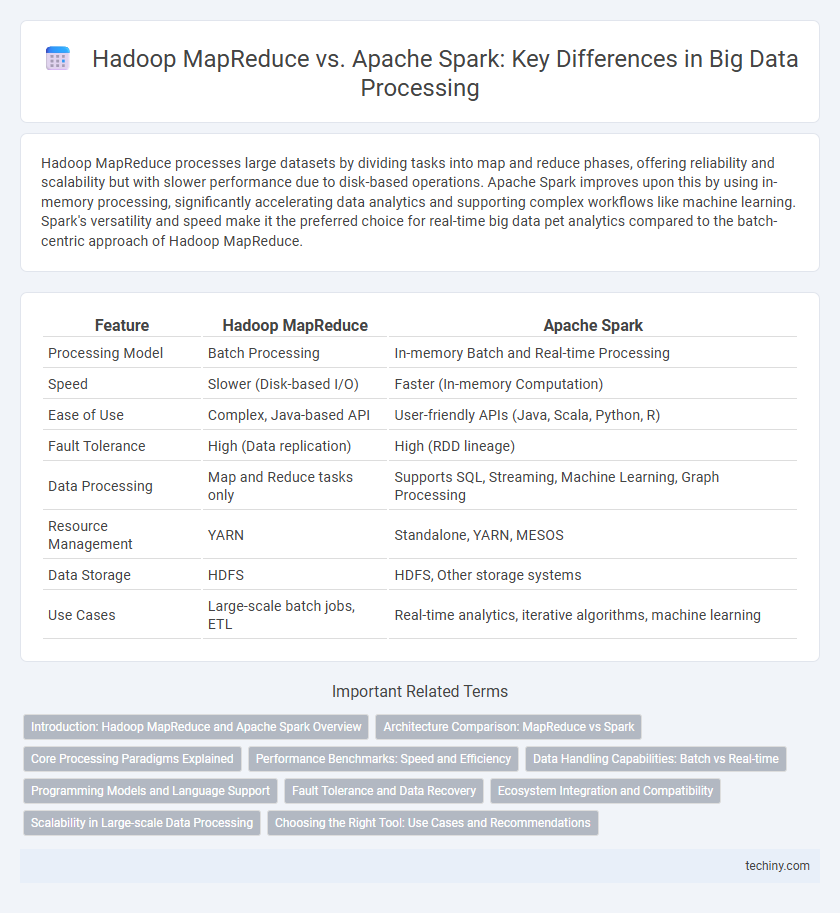

| Feature | Hadoop MapReduce | Apache Spark |

|---|---|---|

| Processing Model | Batch Processing | In-memory Batch and Real-time Processing |

| Speed | Slower (Disk-based I/O) | Faster (In-memory Computation) |

| Ease of Use | Complex, Java-based API | User-friendly APIs (Java, Scala, Python, R) |

| Fault Tolerance | High (Data replication) | High (RDD lineage) |

| Data Processing | Map and Reduce tasks only | Supports SQL, Streaming, Machine Learning, Graph Processing |

| Resource Management | YARN | Standalone, YARN, MESOS |

| Data Storage | HDFS | HDFS, Other storage systems |

| Use Cases | Large-scale batch jobs, ETL | Real-time analytics, iterative algorithms, machine learning |

Introduction: Hadoop MapReduce and Apache Spark Overview

Hadoop MapReduce is a distributed computing framework designed for processing large datasets across clusters using a batch processing model, emphasizing fault tolerance and scalability. Apache Spark offers an in-memory processing engine that accelerates data analytics, supporting batch and real-time streaming workloads with higher speed and versatility. Both frameworks are integral to big data ecosystems, with Hadoop MapReduce suited for extensive, high-latency tasks and Spark optimized for iterative algorithms and low-latency applications.

Architecture Comparison: MapReduce vs Spark

Hadoop MapReduce relies on a two-stage disk-based data processing model, where the Map phase outputs intermediate data to disk before the Reduce phase processes it, resulting in higher latency and I/O overhead. Apache Spark employs an in-memory computation framework using Resilient Distributed Datasets (RDDs) to enable faster data processing by minimizing disk I/O and enabling iterative operations. Spark's DAG (Directed Acyclic Graph) execution engine offers optimized task scheduling and fault tolerance, outperforming Hadoop MapReduce's linear data flow in complex, multi-stage workflows.

Core Processing Paradigms Explained

Hadoop MapReduce processes data through a batch-oriented, disk-based model where tasks are divided into map and reduce phases, enabling fault tolerance and scalability for large datasets. Apache Spark utilizes an in-memory processing engine with Resilient Distributed Datasets (RDDs), offering faster computation and iterative processing by minimizing disk I/O. The core paradigm shift from Hadoop's disk-bound map and reduce steps to Spark's memory-first, DAG-based execution graph significantly enhances performance for complex analytics and real-time applications.

Performance Benchmarks: Speed and Efficiency

Hadoop MapReduce processes large datasets by breaking tasks into map and reduce phases, but its disk-based storage causes higher latency compared to Apache Spark's in-memory computation, resulting in slower speed for iterative algorithms. Apache Spark demonstrates superior performance benchmarks with up to 100x faster processing speed in memory and 10x faster on disk, significantly enhancing efficiency for complex big data analytics. Spark's optimized DAG execution engine and fault-tolerant resilience further improve processing throughput and reduce job latency compared to traditional MapReduce frameworks.

Data Handling Capabilities: Batch vs Real-time

Hadoop MapReduce excels in processing large-scale batch data by dividing tasks into independent batch jobs, enabling efficient disk-based storage and fault tolerance for extensive datasets. Apache Spark offers superior real-time data handling with in-memory processing, significantly speeding up iterative and interactive workloads while supporting stream processing frameworks like Spark Streaming. Spark's architecture optimizes latency-sensitive applications, making it ideal for scenarios requiring both batch and real-time analytics.

Programming Models and Language Support

Hadoop MapReduce relies on a rigid batch processing model primarily supporting Java, which limits flexibility and real-time analytics capabilities. Apache Spark offers a versatile in-memory processing framework with rich APIs in Java, Scala, Python, and R, enabling faster iterative computations and interactive data analysis. The advanced programming model of Spark supports complex workflows and seamless integration with machine learning libraries, significantly outperforming Hadoop MapReduce in developer productivity and performance.

Fault Tolerance and Data Recovery

Hadoop MapReduce ensures fault tolerance by breaking jobs into smaller tasks and rerunning failed tasks to recover lost data. Apache Spark employs resilient distributed datasets (RDDs) that automatically track data lineage, enabling efficient recomputation of lost partitions during node failures. Spark's in-memory processing combined with lineage-based recovery allows faster data recovery compared to MapReduce's disk-based approach.

Ecosystem Integration and Compatibility

Hadoop MapReduce integrates seamlessly with the Hadoop ecosystem, leveraging HDFS for distributed storage and YARN for resource management, making it highly compatible with existing Hadoop tools like Hive and HBase. Apache Spark, while also compatible with HDFS and YARN, extends ecosystem integration by supporting a broader range of data sources and formats such as Apache Cassandra, Apache Kafka, and Amazon S3, enabling versatile data processing workflows. Spark's in-memory computing capability further enhances performance within hybrid ecosystems, optimizing compatibility for real-time analytics and iterative algorithms.

Scalability in Large-scale Data Processing

Hadoop MapReduce excels in scalability by distributing large-scale data processing tasks across extensive clusters, efficiently handling massive datasets through its batch processing model. Apache Spark offers superior scalability with in-memory computing, enabling faster iterative processing and real-time analytics on large data volumes in distributed environments. Both frameworks support horizontal scaling, but Spark's architecture provides more flexibility and performance benefits for scalable big data workloads.

Choosing the Right Tool: Use Cases and Recommendations

Hadoop MapReduce excels in batch processing large volumes of data with its robust fault tolerance and scalability, making it suitable for legacy systems and long-running analytics jobs. Apache Spark offers superior performance for iterative algorithms, real-time data processing, and machine learning tasks due to its in-memory computing capabilities and ease of use with APIs in Scala, Python, and Java. Organizations should evaluate data latency requirements, computational complexity, and existing infrastructure when choosing between MapReduce for heavy-duty batch operations and Spark for interactive analytics and streaming applications.

Hadoop MapReduce vs Apache Spark Infographic

techiny.com

techiny.com