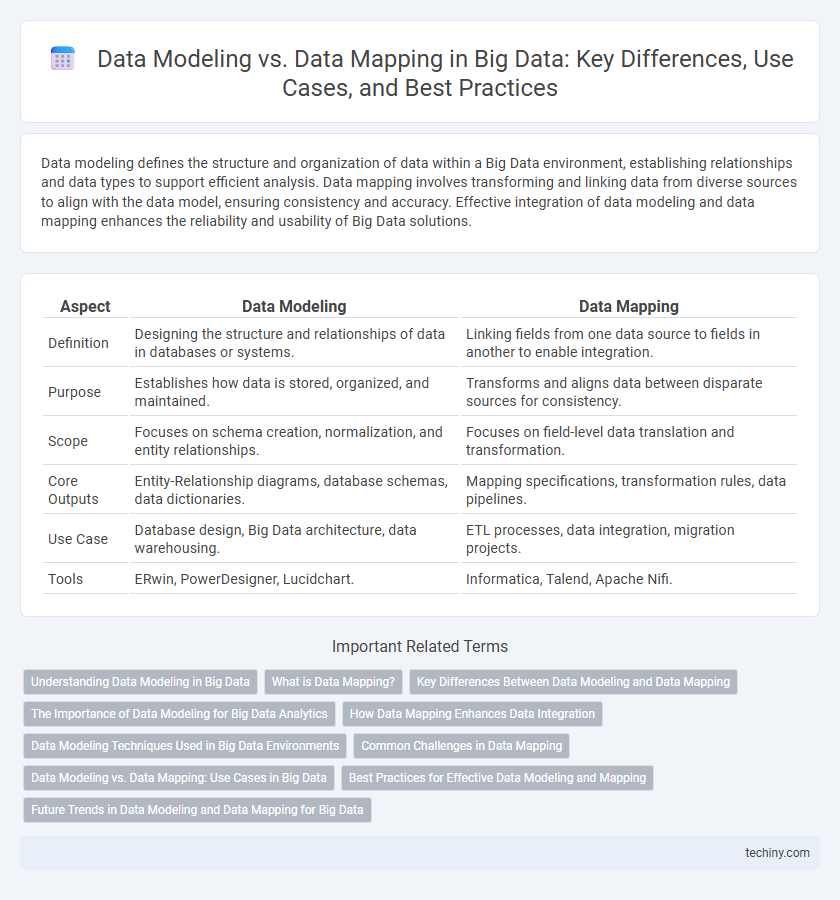

Data modeling defines the structure and organization of data within a Big Data environment, establishing relationships and data types to support efficient analysis. Data mapping involves transforming and linking data from diverse sources to align with the data model, ensuring consistency and accuracy. Effective integration of data modeling and data mapping enhances the reliability and usability of Big Data solutions.

Table of Comparison

| Aspect | Data Modeling | Data Mapping |

|---|---|---|

| Definition | Designing the structure and relationships of data in databases or systems. | Linking fields from one data source to fields in another to enable integration. |

| Purpose | Establishes how data is stored, organized, and maintained. | Transforms and aligns data between disparate sources for consistency. |

| Scope | Focuses on schema creation, normalization, and entity relationships. | Focuses on field-level data translation and transformation. |

| Core Outputs | Entity-Relationship diagrams, database schemas, data dictionaries. | Mapping specifications, transformation rules, data pipelines. |

| Use Case | Database design, Big Data architecture, data warehousing. | ETL processes, data integration, migration projects. |

| Tools | ERwin, PowerDesigner, Lucidchart. | Informatica, Talend, Apache Nifi. |

Understanding Data Modeling in Big Data

Data modeling in Big Data involves creating abstract representations of complex data structures to facilitate efficient storage, retrieval, and analysis across distributed systems. It defines the schema, relationships, and constraints that guide how data is organized, ensuring scalability and consistency in massive datasets such as those processed by Hadoop or Spark. Understanding data modeling is crucial for designing data architectures that optimize query performance, data integration, and machine learning applications within big data ecosystems.

What is Data Mapping?

Data mapping in big data involves creating connections between data elements from different sources to enable seamless integration and analysis. It translates data fields from one database schema to another, ensuring accurate data transformation and consistency across systems. Effective data mapping supports data migration, synchronization, and interoperability in complex big data environments.

Key Differences Between Data Modeling and Data Mapping

Data modeling defines the overall structure and organization of data by creating abstract representations like entities, attributes, and relationships, while data mapping focuses on the exact transformation and translation of data elements between different systems or formats. Data modeling serves as a blueprint for database design and integration, establishing the semantic framework and constraints, whereas data mapping ensures accurate data migration, synchronization, and interoperability by aligning source and target data fields. Key differences include purpose--design versus transformation--and scope--conceptual schema versus field-level correspondence.

The Importance of Data Modeling for Big Data Analytics

Data modeling structures vast datasets by defining entities, relationships, and data flows, enabling efficient organization crucial for Big Data analytics. By creating accurate data models, organizations ensure data quality and consistency, which directly impact the performance of analytics algorithms and reporting tools. Effective data modeling reduces data redundancy and improves integration across diverse Big Data sources, facilitating faster insights and decision-making.

How Data Mapping Enhances Data Integration

Data mapping plays a crucial role in enhancing data integration by establishing precise relationships between source and target datasets, allowing seamless data consolidation from diverse Big Data platforms. It ensures consistency and accuracy during the transformation process by aligning differing data formats, schemas, and structures, which improves overall data quality and usability. Effective data mapping accelerates integration workflows and supports real-time analytics by enabling smooth interoperability across complex data ecosystems.

Data Modeling Techniques Used in Big Data Environments

Data modeling techniques in big data environments emphasize schema-on-read approaches, enabling flexible and dynamic data interpretation without predefined schemas. Techniques like entity-relationship modeling, dimensional modeling, and graph modeling are adapted to handle the volume, velocity, and variety characteristic of big data systems. Utilizing tools such as Apache Hadoop Hive and Apache Spark SQL facilitates effective structuring and querying of large-scale datasets, optimizing analytical capabilities in big data contexts.

Common Challenges in Data Mapping

Data mapping in big data environments often faces common challenges such as handling heterogeneous data sources, ensuring data quality, and maintaining schema consistency across evolving datasets. Complex transformations and the lack of standardized metadata can lead to mapping errors and data misinterpretation. Addressing these issues requires robust tools that support automated metadata management and flexible schema evolution to streamline data integration and enhance accuracy.

Data Modeling vs. Data Mapping: Use Cases in Big Data

Data modeling in big data involves creating abstract representations of complex datasets to ensure efficient storage, retrieval, and analysis, primarily used in designing data warehouses and data lakes. Data mapping focuses on establishing relationships and transformations between data sources and target systems, essential for data integration and ETL processes in big data pipelines. Use cases for data modeling include structuring unstructured data, while data mapping supports data migration and real-time data synchronization across distributed big data environments.

Best Practices for Effective Data Modeling and Mapping

Effective data modeling involves creating a clear and scalable schema that accurately represents business entities and their relationships, leveraging normalization and entity-relationship diagrams (ERDs) to maintain data integrity. Data mapping best practices include thorough documentation of source-to-target data flows, use of automated tools for consistency checks, and alignment with data governance standards to ensure accuracy during integration processes. Combining precise modeling with rigorous data mapping enhances data quality and supports robust analytics in big data environments.

Future Trends in Data Modeling and Data Mapping for Big Data

Future trends in data modeling for big data emphasize adaptive, schema-on-read techniques that enable dynamic integration of diverse datasets, enhancing real-time analytics capabilities. Data mapping is evolving through AI-driven automation, improving accuracy and reducing manual intervention in complex data environments. Integration of machine learning algorithms within both data modeling and mapping processes is poised to optimize data quality and accelerate decision-making in big data ecosystems.

Data Modeling vs Data Mapping Infographic

techiny.com

techiny.com