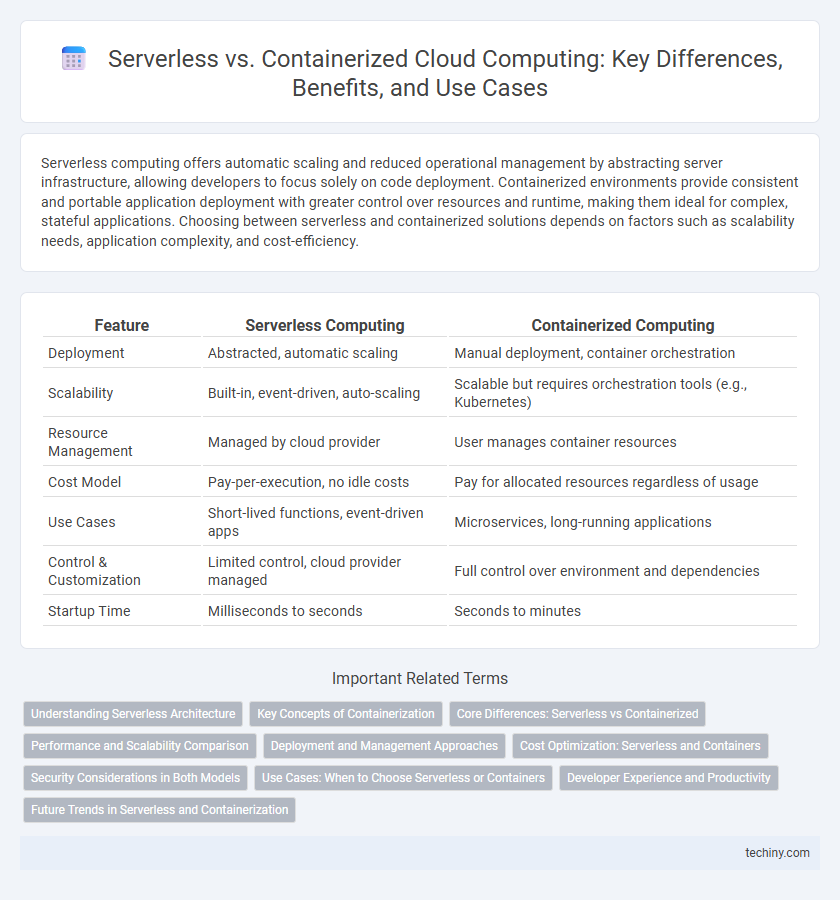

Serverless computing offers automatic scaling and reduced operational management by abstracting server infrastructure, allowing developers to focus solely on code deployment. Containerized environments provide consistent and portable application deployment with greater control over resources and runtime, making them ideal for complex, stateful applications. Choosing between serverless and containerized solutions depends on factors such as scalability needs, application complexity, and cost-efficiency.

Table of Comparison

| Feature | Serverless Computing | Containerized Computing |

|---|---|---|

| Deployment | Abstracted, automatic scaling | Manual deployment, container orchestration |

| Scalability | Built-in, event-driven, auto-scaling | Scalable but requires orchestration tools (e.g., Kubernetes) |

| Resource Management | Managed by cloud provider | User manages container resources |

| Cost Model | Pay-per-execution, no idle costs | Pay for allocated resources regardless of usage |

| Use Cases | Short-lived functions, event-driven apps | Microservices, long-running applications |

| Control & Customization | Limited control, cloud provider managed | Full control over environment and dependencies |

| Startup Time | Milliseconds to seconds | Seconds to minutes |

Understanding Serverless Architecture

Serverless architecture eliminates the need for managing server infrastructure by automatically scaling and provisioning resources based on demand, allowing developers to focus solely on code deployment. It leverages Function as a Service (FaaS) platforms like AWS Lambda or Azure Functions, which execute individual functions in response to events without requiring persistent server management. This contrasts with containerized environments, which package applications and dependencies into containers for consistent deployment but still require orchestration and infrastructure management.

Key Concepts of Containerization

Containerization isolates applications and their dependencies within lightweight, portable containers that run consistently across different computing environments. Key concepts include container images, which package application code and libraries, and container runtime, which executes these images on platforms like Docker or Kubernetes. This approach enhances scalability, resource efficiency, and simplifies deployment compared to traditional virtual machines.

Core Differences: Serverless vs Containerized

Serverless computing abstracts infrastructure management by automatically allocating resources and scaling functions in response to demand, eliminating the need for server provisioning. Containerized environments, using Docker or Kubernetes, provide developers with consistent, portable runtime environments by encapsulating applications and dependencies within isolated containers. The core difference lies in operational control--serverless offers event-driven execution with minimal overhead, while containerized solutions require managing container lifecycle and orchestration but allow greater customization and persistent services.

Performance and Scalability Comparison

Serverless computing offers automatic scaling and reduced operational overhead, enabling dynamic performance adjustments based on workload demands without manual intervention. Containerized environments provide consistent performance with fine-grained resource control, suitable for applications requiring predictable latency and custom scalability configurations. Performance in serverless architectures may experience cold start latency, while containerized solutions deliver stable throughput, making scalability strategies critical based on application requirements.

Deployment and Management Approaches

Serverless architecture eliminates the need for infrastructure management by abstracting server provisioning and scaling, allowing developers to deploy functions that automatically respond to events. Containerized deployment uses container orchestration platforms like Kubernetes to manage application packaging, scaling, and lifecycle, offering more control over environment configuration and dependencies. Serverless prioritizes rapid deployment with minimal management overhead, whereas containerization provides granular infrastructure control and portability for complex, stateful applications.

Cost Optimization: Serverless and Containers

Serverless computing reduces costs by charging only for actual execution time, eliminating expenses related to idle infrastructure, while containerized solutions require managing and scaling underlying resources, potentially increasing fixed costs. Containers offer predictable pricing through reserved resource allocation, enabling cost control in steady workloads, whereas serverless excels in variable or unpredictable traffic with automatic scaling and pay-per-use billing. Combining both approaches can optimize costs by leveraging serverless for sporadic, event-driven tasks and containers for continuous, resource-intensive applications.

Security Considerations in Both Models

Serverless architectures reduce attack surface by abstracting server management, minimizing exposure to OS vulnerabilities, though they require strict function-level permission controls and robust API security. Containerized environments offer granular network segmentation and isolation via container orchestrators like Kubernetes but demand continuous vulnerability scanning and patching of container images. Both models necessitate comprehensive identity and access management (IAM) policies, encryption at rest and in transit, and proactive monitoring to mitigate potential security risks effectively.

Use Cases: When to Choose Serverless or Containers

Serverless computing excels in event-driven applications and unpredictable workloads where automatic scaling and minimal operational management are crucial, such as APIs, microservices, and real-time data processing. Containerized solutions are ideal for complex, long-running applications requiring consistent environments, customization, and easy migration across hybrid cloud infrastructures, including legacy application modernization and multi-container orchestration with Kubernetes. Choosing serverless suits rapid development with cost efficiency under variable demand, while containers provide enhanced control and portability for scalable, stateful applications.

Developer Experience and Productivity

Serverless computing eliminates the need for infrastructure management, enabling developers to focus on writing code and accelerating deployment cycles with automatic scaling and event-driven execution. Containerized environments offer more control and customization, fostering consistent development workflows and streamlined collaboration through isolated, reproducible units. Developer productivity benefits from serverless by reducing operational overhead, while containerization enhances flexibility and integration with existing DevOps pipelines.

Future Trends in Serverless and Containerization

Future trends in serverless architecture emphasize enhanced scalability, reduced cold start latency, and deeper integration with AI-driven automation for optimized resource management. Containerization continues to evolve with Kubernetes advancements, stronger security protocols, and improved multi-cloud orchestration capabilities to support hybrid environments. Innovations in edge computing and microservices deployment accelerate the convergence of serverless and containerized paradigms, driving next-generation cloud-native applications.

Serverless vs Containerized Infographic

techiny.com

techiny.com