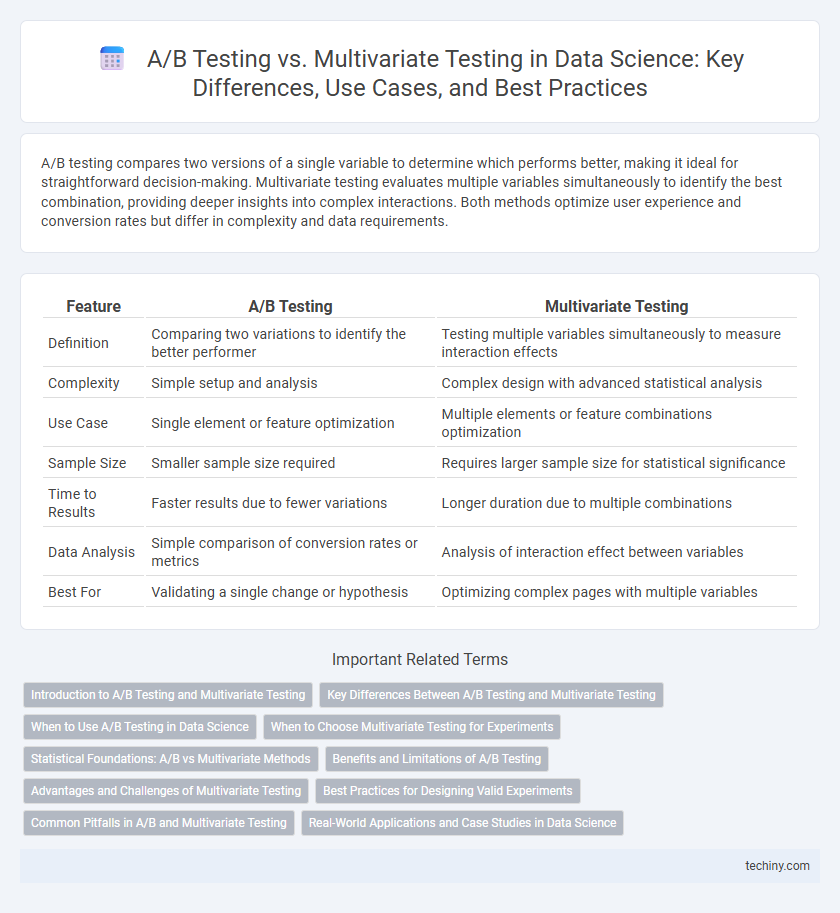

A/B testing compares two versions of a single variable to determine which performs better, making it ideal for straightforward decision-making. Multivariate testing evaluates multiple variables simultaneously to identify the best combination, providing deeper insights into complex interactions. Both methods optimize user experience and conversion rates but differ in complexity and data requirements.

Table of Comparison

| Feature | A/B Testing | Multivariate Testing |

|---|---|---|

| Definition | Comparing two variations to identify the better performer | Testing multiple variables simultaneously to measure interaction effects |

| Complexity | Simple setup and analysis | Complex design with advanced statistical analysis |

| Use Case | Single element or feature optimization | Multiple elements or feature combinations optimization |

| Sample Size | Smaller sample size required | Requires larger sample size for statistical significance |

| Time to Results | Faster results due to fewer variations | Longer duration due to multiple combinations |

| Data Analysis | Simple comparison of conversion rates or metrics | Analysis of interaction effect between variables |

| Best For | Validating a single change or hypothesis | Optimizing complex pages with multiple variables |

Introduction to A/B Testing and Multivariate Testing

A/B testing compares two versions of a single variable to determine which performs better by analyzing user responses, making it ideal for straightforward changes. Multivariate testing evaluates multiple variables and their combinations simultaneously, providing deeper insights into the interaction effects on user behavior but requiring larger sample sizes for statistical significance. Both methods employ statistical analysis to optimize digital experiences and enhance decision-making in data-driven marketing and product development.

Key Differences Between A/B Testing and Multivariate Testing

A/B testing compares two versions of a single variable to identify which performs better, making it ideal for straightforward decisions with limited variations. Multivariate testing evaluates multiple variables and their combinations simultaneously to understand how different elements interact and impact overall performance. While A/B testing offers simplicity and faster results, multivariate testing provides deeper insights into complex variable interactions but requires larger sample sizes and more time for accurate conclusions.

When to Use A/B Testing in Data Science

A/B testing is ideal for comparing two distinct variations to identify which one performs better on a specific metric, such as click-through rate or conversion rate, making it highly effective for testing simple, binary changes. This method excels when the goal is to isolate the impact of a single variable or feature, enabling clear, actionable insights without the complexity of multiple variable interactions. Data scientists should use A/B testing when there is a hypothesis about a particular element's effect, and the test requires a straightforward comparison to optimize user experience or business outcomes.

When to Choose Multivariate Testing for Experiments

Multivariate testing is ideal for experiments requiring the simultaneous assessment of multiple variables and their interactions, enabling a deeper understanding of how combined elements influence user behavior. This method is most effective when the goal is to optimize complex web pages or product features with numerous components, as it provides granular insights into which combination performs best. Choose multivariate testing when sufficient traffic exists to achieve statistical significance across all variable combinations within a reasonable timeframe.

Statistical Foundations: A/B vs Multivariate Methods

A/B testing relies on comparing two variants to determine statistically significant differences using hypothesis testing and p-values, typically based on binomial or normal distributions. Multivariate testing extends this concept by analyzing multiple variables simultaneously, employing factorial designs and interaction effects to assess combined influence on outcomes. Both methods use confidence intervals and statistical power considerations, but multivariate testing requires larger sample sizes to maintain validity due to increased complexity in factor levels and interactions.

Benefits and Limitations of A/B Testing

A/B testing offers clear advantages such as simplicity, ease of implementation, and precise measurement of the impact of a single variable change on user behavior, making it ideal for optimizing conversion rates and user experience. However, its limitations include the inability to test multiple variables simultaneously, potential for longer test durations due to smaller variants, and reduced insights into interaction effects between variables. Despite these constraints, A/B testing remains a fundamental method in data science for making data-driven decisions with statistically significant results.

Advantages and Challenges of Multivariate Testing

Multivariate testing enables data scientists to analyze the impact of multiple variables simultaneously, offering deeper insights into complex user interactions and boosting optimization efficiency compared to A/B testing. It uncovers the best combination of elements by testing different variations across multiple factors, increasing the precision of user behavior predictions. Challenges include requiring larger sample sizes for statistical significance, higher complexity in experimental design, and increased computational resources for data analysis.

Best Practices for Designing Valid Experiments

Designing valid experiments in A/B testing requires clear hypothesis formulation, random assignment of participants, and sufficient sample size to ensure statistical power. In multivariate testing, best practices include limiting the number of variables to avoid combinatorial explosion, applying factorial designs strategically, and using robust analytics to interpret interaction effects accurately. Both methods demand thorough pre-experiment planning, consistent data collection protocols, and appropriate significance level settings to minimize Type I and Type II errors.

Common Pitfalls in A/B and Multivariate Testing

Common pitfalls in A/B and multivariate testing include insufficient sample size leading to underpowered results, improper randomization causing biased outcomes, and failure to account for multiple comparisons which increases the risk of false positives. Overlooking interaction effects in multivariate testing can result in misinterpreted data, while premature stopping of tests often yields unreliable conclusions. Accurate test duration, robust experimental design, and appropriate statistical corrections are essential to avoid these common issues and ensure valid insights.

Real-World Applications and Case Studies in Data Science

A/B testing demonstrates practical effectiveness in optimizing single-variable changes, commonly used in marketing campaigns to boost user engagement by 20-30%. Multivariate testing excels in complex environments like e-commerce platforms, enabling simultaneous evaluation of multiple page elements to enhance conversion rates by over 15%. Case studies from companies like Amazon and Netflix illustrate how integrating both testing methods drives data-driven decisions that significantly improve user experience and revenue.

A/B testing vs multivariate testing Infographic

techiny.com

techiny.com