MapReduce processes large-scale data by dividing tasks into map and reduce functions, enabling parallel batch processing but often suffering from high latency. Spark offers in-memory computing, dramatically speeding up data processing and supporting iterative algorithms, making it ideal for real-time analytics and machine learning applications. Its rich API and ease of integration provide greater flexibility and efficiency compared to the traditional MapReduce paradigm.

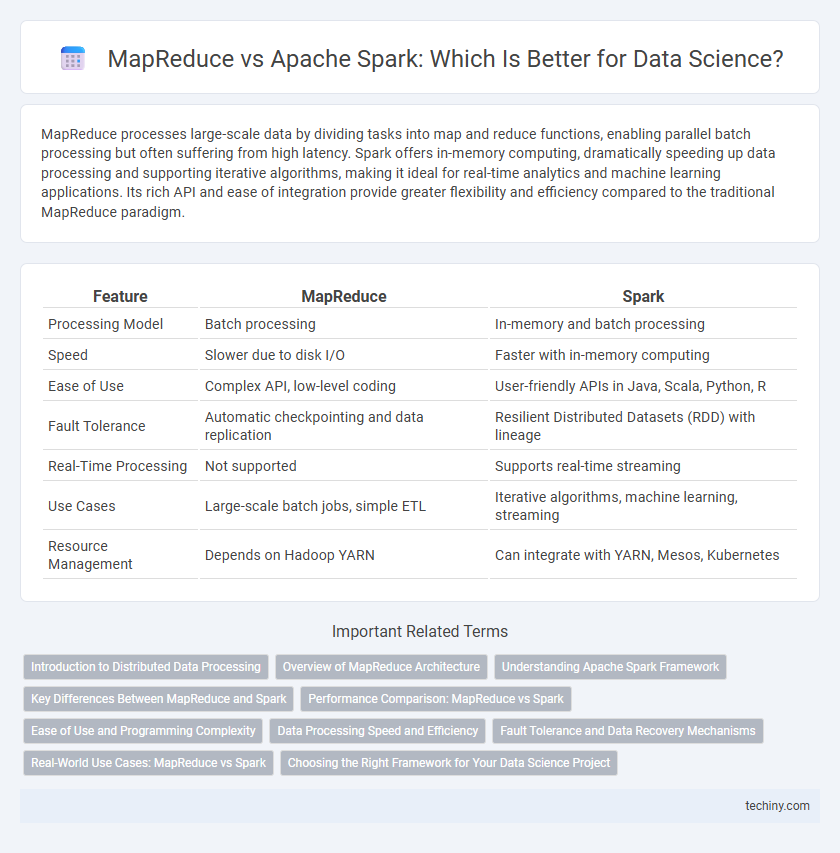

Table of Comparison

| Feature | MapReduce | Spark |

|---|---|---|

| Processing Model | Batch processing | In-memory and batch processing |

| Speed | Slower due to disk I/O | Faster with in-memory computing |

| Ease of Use | Complex API, low-level coding | User-friendly APIs in Java, Scala, Python, R |

| Fault Tolerance | Automatic checkpointing and data replication | Resilient Distributed Datasets (RDD) with lineage |

| Real-Time Processing | Not supported | Supports real-time streaming |

| Use Cases | Large-scale batch jobs, simple ETL | Iterative algorithms, machine learning, streaming |

| Resource Management | Depends on Hadoop YARN | Can integrate with YARN, Mesos, Kubernetes |

Introduction to Distributed Data Processing

MapReduce is a programming model designed for processing large-scale datasets across distributed clusters by dividing tasks into map and reduce functions, enabling parallel data processing. Apache Spark enhances distributed data processing by offering in-memory computation, faster iterative algorithms, and support for complex workflows beyond MapReduce's batch processing limitations. Spark's capabilities significantly reduce latency and improve performance in big data analytics compared to traditional MapReduce frameworks.

Overview of MapReduce Architecture

MapReduce architecture consists of two primary phases: Map and Reduce, designed for processing and generating large data sets with a parallel, distributed algorithm on a cluster. The Map function processes input key-value pairs to produce intermediate key-value pairs, while the Reduce function merges all intermediate values associated with the same intermediate key. This architecture emphasizes fault tolerance, data locality, and scalability, making it suitable for batch processing but often less efficient than Apache Spark for iterative algorithms and in-memory computations.

Understanding Apache Spark Framework

Apache Spark framework excels in in-memory data processing, offering faster performance than traditional MapReduce by minimizing disk I/O operations. Spark's Resilient Distributed Datasets (RDDs) enable fault-tolerant, parallel computations across clusters, enhancing efficiency for iterative algorithms in data science. Its integrated libraries for machine learning, graph processing, and streaming provide versatile tools that streamline complex big data workflows.

Key Differences Between MapReduce and Spark

MapReduce processes data through a two-stage disk-based model, causing higher latency and slower execution for iterative tasks, while Spark employs in-memory computing to deliver faster processing speeds and real-time analytics capabilities. Spark supports a wider range of workloads with integrated libraries for machine learning, streaming, and graph processing, unlike MapReduce's rigid batch processing framework. Fault tolerance in MapReduce relies on job re-execution upon failure, whereas Spark uses resilient distributed datasets (RDDs) to recover data efficiently without rerunning entire jobs.

Performance Comparison: MapReduce vs Spark

Spark outperforms MapReduce by executing data processing tasks in-memory, significantly reducing disk I/O latency and accelerating iterative algorithms common in machine learning and data analytics. MapReduce relies on batch processing with heavy disk reads and writes between map and reduce phases, causing higher latency in complex workflows. Spark's DAG execution engine optimizes task scheduling and resource utilization, delivering up to 100x faster performance in memory and 10x faster on disk compared to MapReduce.

Ease of Use and Programming Complexity

MapReduce requires extensive boilerplate code and complex configuration, making it less user-friendly for data scientists. Spark offers a more intuitive API with high-level libraries like Spark SQL and DataFrames, significantly reducing programming complexity. Its in-memory processing and concise syntax enable faster development and easier debugging compared to MapReduce's batch-oriented paradigm.

Data Processing Speed and Efficiency

Spark outperforms MapReduce in data processing speed and efficiency by utilizing in-memory computation and DAG execution, enabling faster iterative algorithms and real-time analytics. MapReduce relies on disk-based storage between each processing step, which introduces latency and reduces overall efficiency, especially for complex workflows. Spark's ability to cache datasets in memory significantly accelerates processing times compared to the batch-oriented nature of MapReduce.

Fault Tolerance and Data Recovery Mechanisms

MapReduce ensures fault tolerance through its task re-execution model, where failed tasks are automatically retried on different nodes, leveraging data replication in HDFS for recovery. Apache Spark improves fault tolerance by using Resilient Distributed Datasets (RDDs), enabling lineage-based recomputation instead of relying solely on data replication, which reduces recovery time and overhead. Spark's in-memory processing combined with its DAG execution model allows faster data recovery and better fault tolerance compared to MapReduce's batch-oriented approach.

Real-World Use Cases: MapReduce vs Spark

MapReduce excels in batch processing large-scale log analysis and data warehousing in Hadoop ecosystems, providing reliable fault tolerance and scalability for structured data. Spark outperforms with real-time stream processing, iterative machine learning algorithms, and graph analytics, leveraging in-memory computation to deliver faster results in dynamic data environments. Enterprises use MapReduce for cost-effective, long-term data storage analysis while Spark powers advanced analytics and predictive modeling in sectors like finance, e-commerce, and telecommunications.

Choosing the Right Framework for Your Data Science Project

MapReduce excels in processing large-scale batch data through its distributed computing model, making it suitable for tasks with minimal interactivity and strict fault tolerance needs. Apache Spark offers in-memory computing and faster processing speeds, ideal for iterative algorithms, real-time data analysis, and machine learning workflows. Selecting between MapReduce and Spark depends on project requirements such as latency tolerance, data volume, complexity of transformations, and the need for dynamic data processing capabilities.

map-reduce vs spark Infographic

techiny.com

techiny.com