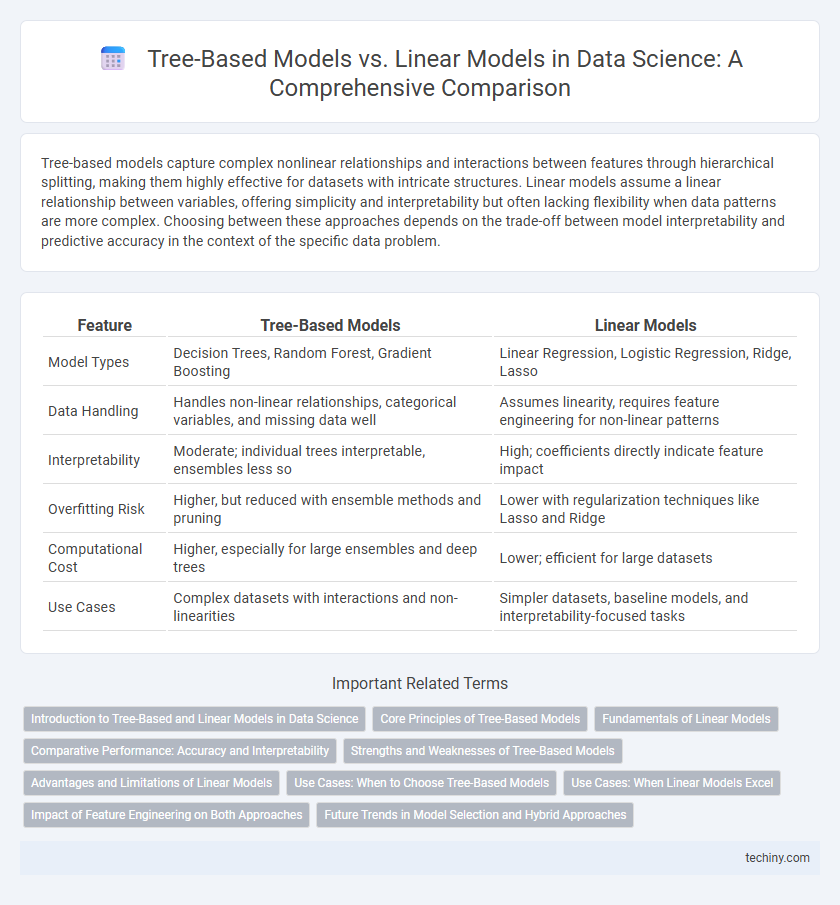

Tree-based models capture complex nonlinear relationships and interactions between features through hierarchical splitting, making them highly effective for datasets with intricate structures. Linear models assume a linear relationship between variables, offering simplicity and interpretability but often lacking flexibility when data patterns are more complex. Choosing between these approaches depends on the trade-off between model interpretability and predictive accuracy in the context of the specific data problem.

Table of Comparison

| Feature | Tree-Based Models | Linear Models |

|---|---|---|

| Model Types | Decision Trees, Random Forest, Gradient Boosting | Linear Regression, Logistic Regression, Ridge, Lasso |

| Data Handling | Handles non-linear relationships, categorical variables, and missing data well | Assumes linearity, requires feature engineering for non-linear patterns |

| Interpretability | Moderate; individual trees interpretable, ensembles less so | High; coefficients directly indicate feature impact |

| Overfitting Risk | Higher, but reduced with ensemble methods and pruning | Lower with regularization techniques like Lasso and Ridge |

| Computational Cost | Higher, especially for large ensembles and deep trees | Lower; efficient for large datasets |

| Use Cases | Complex datasets with interactions and non-linearities | Simpler datasets, baseline models, and interpretability-focused tasks |

Introduction to Tree-Based and Linear Models in Data Science

Tree-based models, such as decision trees, random forests, and gradient boosting machines, excel at capturing non-linear relationships and interactions between features in data science tasks. Linear models, including linear regression and logistic regression, assume a linear relationship between input variables and the target, offering simplicity and interpretability. Choosing between tree-based and linear models depends on data complexity, the need for feature interactions, and the importance of model explainability in predictive analytics.

Core Principles of Tree-Based Models

Tree-based models operate by recursively partitioning the feature space into distinct regions, creating a tree structure where each node represents a decision based on feature thresholds; this non-linear approach captures complex interactions without requiring feature scaling. Unlike linear models that assume additive relationships, tree-based methods inherently model variable interactions and handle categorical variables with ease, enhancing flexibility in representing diverse data patterns. The core principle relies on greedy algorithms like CART to optimize splits based on impurity metrics such as Gini index or entropy, enabling robust performance in classification and regression tasks.

Fundamentals of Linear Models

Linear models establish relationships between input features and the target variable through weighted sums, emphasizing interpretability and simplicity in data structure. Unlike tree-based models, they assume linearity and additive effects, which can limit their ability to capture complex, non-linear patterns. Their core advantage lies in efficient parameter estimation, often using methods like ordinary least squares or gradient descent, making them foundational for understanding predictive modeling.

Comparative Performance: Accuracy and Interpretability

Tree-based models often outperform linear models in accuracy by capturing complex nonlinear relationships and interactions within data, making them suitable for intricate datasets. However, linear models provide greater interpretability through straightforward coefficient analysis, allowing easier understanding of feature importance and effect direction. Balancing accuracy and interpretability depends on the specific use case, with tree-based models favored for predictive power and linear models preferred when model transparency is critical.

Strengths and Weaknesses of Tree-Based Models

Tree-based models excel in capturing complex, non-linear relationships and interactions between features without requiring extensive data preprocessing or feature scaling. They are robust to outliers and can naturally handle both numerical and categorical data, but they tend to overfit on small datasets and may suffer from instability due to high variance. Ensemble methods like Random Forests and Gradient Boosting mitigate overfitting and improve predictive accuracy by combining multiple trees.

Advantages and Limitations of Linear Models

Linear models offer simplicity and interpretability, making them ideal for datasets with a clear linear relationship among features. They perform efficiently on high-dimensional data and require less computational power compared to tree-based models. However, linear models struggle with capturing complex nonlinear patterns and interactions, leading to potential underfitting when relationships in data are nonlinear or multifaceted.

Use Cases: When to Choose Tree-Based Models

Tree-based models excel in handling complex, non-linear relationships and can automatically capture feature interactions without extensive preprocessing, making them ideal for tasks like fraud detection, customer churn prediction, and risk assessment. Their robustness to outliers and ability to work with mixed data types suit applications in finance, healthcare, and marketing where data heterogeneity is common. For problems requiring interpretability combined with high predictive performance on structured datasets, models like Random Forests and Gradient Boosting Machines outperform traditional linear models.

Use Cases: When Linear Models Excel

Linear models excel in scenarios with a clear linear relationship between features and target variables, such as predicting housing prices or risk assessment in finance. They perform well on high-dimensional datasets with sparse features, commonly found in text classification and bioinformatics. Their interpretability and efficiency make them ideal for real-time predictions and situations requiring straightforward model explanations.

Impact of Feature Engineering on Both Approaches

Feature engineering profoundly impacts the performance of tree-based models and linear models by shaping the input data representation. Tree-based models like random forests and gradient boosting naturally handle complex feature interactions and non-linear relationships, often requiring less extensive feature transformations. Linear models depend heavily on carefully engineered features such as polynomial terms or interaction variables to capture non-linearity, making feature engineering critical for their predictive accuracy.

Future Trends in Model Selection and Hybrid Approaches

Tree-based models such as Random Forests and Gradient Boosting Machines demonstrate superior performance in handling complex, non-linear relationships compared to traditional linear models, which excel in interpretability and computational efficiency. Emerging trends in data science emphasize hybrid approaches combining tree-based and linear algorithms to leverage the strengths of both, enhancing predictive accuracy and model robustness. Advances in automated machine learning (AutoML) platforms increasingly facilitate optimal model selection by incorporating ensemble techniques and real-time adaptation to dynamic datasets.

tree-based models vs linear models Infographic

techiny.com

techiny.com