Bag-of-words represents text by counting the frequency of individual words, ignoring word order and context, which simplifies text processing but can miss semantic nuances. In contrast, n-grams capture sequences of n consecutive words, preserving some contextual information and improving the ability to detect phrases and word dependencies. Choosing between bag-of-words and n-grams depends on the trade-off between model complexity and capturing textual structure for better predictive performance.

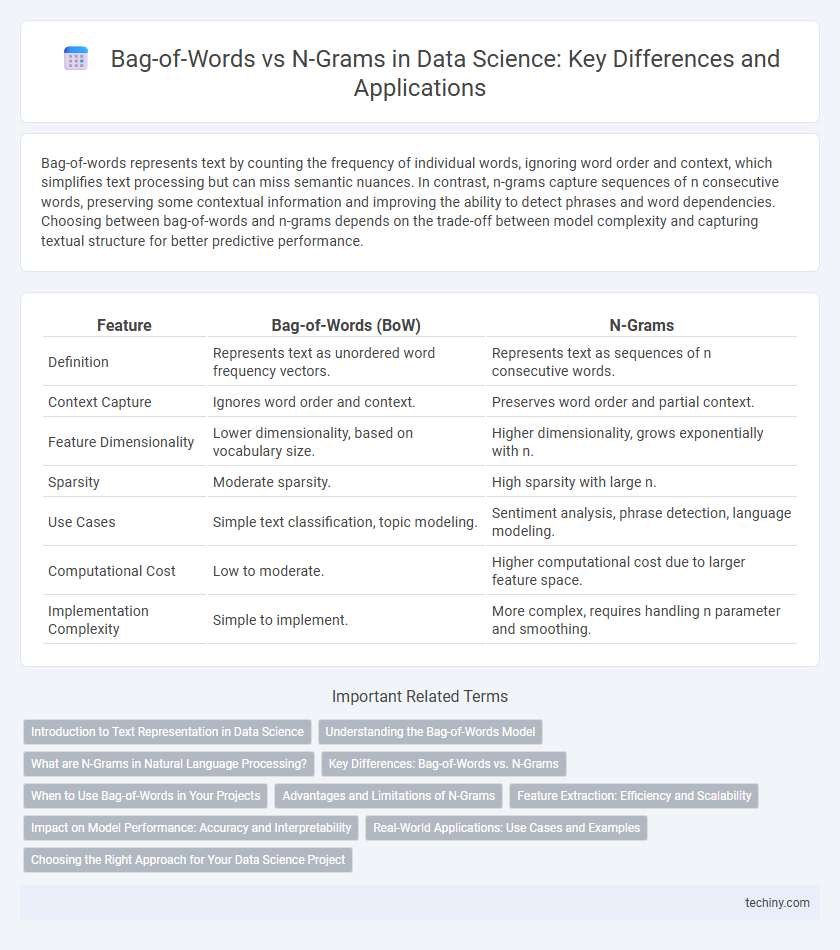

Table of Comparison

| Feature | Bag-of-Words (BoW) | N-Grams |

|---|---|---|

| Definition | Represents text as unordered word frequency vectors. | Represents text as sequences of n consecutive words. |

| Context Capture | Ignores word order and context. | Preserves word order and partial context. |

| Feature Dimensionality | Lower dimensionality, based on vocabulary size. | Higher dimensionality, grows exponentially with n. |

| Sparsity | Moderate sparsity. | High sparsity with large n. |

| Use Cases | Simple text classification, topic modeling. | Sentiment analysis, phrase detection, language modeling. |

| Computational Cost | Low to moderate. | Higher computational cost due to larger feature space. |

| Implementation Complexity | Simple to implement. | More complex, requires handling n parameter and smoothing. |

Introduction to Text Representation in Data Science

Bag-of-words (BoW) is a fundamental text representation method that transforms text into fixed-length vectors by counting word frequencies without considering word order. N-grams extend BoW by capturing contiguous sequences of n words, preserving some contextual information and improving performance in language models. Both techniques serve as the basis for feature extraction in natural language processing tasks, with n-grams offering enhanced semantic representation at the cost of increased computational complexity.

Understanding the Bag-of-Words Model

The Bag-of-Words model represents text by counting the frequency of individual words while ignoring grammar and word order, enabling straightforward feature extraction for machine learning tasks. It simplifies documents into fixed-length vectors based on a predefined vocabulary, making it computationally efficient but sometimes less effective in capturing context compared to n-grams. This approach is particularly useful for text classification and sentiment analysis where individual word occurrences significantly impact the model's predictive power.

What are N-Grams in Natural Language Processing?

N-grams in Natural Language Processing are contiguous sequences of n items from a given text, commonly words or characters, used to capture context and word order information. Unlike the Bag-of-Words model, which ignores word order, n-grams retain some syntactic structure by considering combinations like bigrams or trigrams, enhancing the understanding of phrase patterns. This approach improves tasks such as text classification, language modeling, and sentiment analysis by incorporating the sequential nature of language.

Key Differences: Bag-of-Words vs. N-Grams

Bag-of-Words (BoW) represents text by counting individual word occurrences, ignoring word order and context, which simplifies feature extraction but loses semantic meaning. N-Grams extend BoW by capturing contiguous sequences of n words, preserving local word order and context, enhancing the model's ability to understand phrases and subtle language nuances. The key difference lies in BoW's focus on single words versus N-Grams' focus on word combinations, impacting the granularity and expressiveness of text representation in machine learning models.

When to Use Bag-of-Words in Your Projects

Bag-of-words is ideal for projects where interpretability and simplicity are key, such as basic text classification or sentiment analysis with smaller datasets. It performs well when the word order is less important, and the focus is on word frequency rather than context. Use bag-of-words when computational efficiency and straightforward feature extraction outweigh the need for capturing semantic nuances.

Advantages and Limitations of N-Grams

N-grams capture contextual information by encoding contiguous sequences of words, improving text representation beyond individual terms as seen in bag-of-words. This approach enhances performance in language modeling and sentiment analysis by preserving phrase-level meaning, yet it drastically increases feature dimensionality and sparsity, leading to higher computational costs. Effectiveness depends on choosing the optimal n, since larger n-grams provide richer context but exacerbate data sparsity and model complexity.

Feature Extraction: Efficiency and Scalability

Bag-of-words (BoW) models offer efficient feature extraction by representing text as sparse vectors based on word frequency, making them scalable for large datasets with straightforward computational requirements. N-grams enhance context capture by incorporating contiguous word sequences but increase feature space dimensionality exponentially, leading to higher computational cost and potential sparsity challenges. Balancing the trade-off between BoW's efficiency and n-grams' nuanced representation is crucial for scalable data science applications in natural language processing.

Impact on Model Performance: Accuracy and Interpretability

Bag-of-words captures individual word frequencies but often ignores context, leading to simpler models with moderate accuracy and higher interpretability. N-grams incorporate sequences of words, enhancing context understanding and improving accuracy in complex language tasks while increasing model complexity and reducing interpretability. Choosing between bag-of-words and n-grams depends on the trade-off between desired accuracy gains and the need for model transparency in specific data science applications.

Real-World Applications: Use Cases and Examples

Bag-of-words models excel in document classification tasks such as spam detection by capturing word frequency without word order, making them computationally efficient. N-grams enhance language modeling and sentiment analysis by incorporating contiguous word sequences, which capture context and phrase-level meaning critical in social media monitoring and chatbot development. Real-world applications like machine translation and predictive text leverage n-grams to improve accuracy by understanding word dependencies beyond single tokens.

Choosing the Right Approach for Your Data Science Project

Choosing between bag-of-words and n-grams depends on the complexity and context sensitivity of the dataset. Bag-of-words is effective for simpler, frequency-based tasks, while n-grams capture word sequences and context, improving performance in tasks like sentiment analysis and language modeling. Evaluating the trade-off between model complexity and computational cost ensures the selection aligns with project goals and dataset characteristics.

bag-of-words vs n-grams Infographic

techiny.com

techiny.com