Accuracy measures the proportion of correct predictions, providing a straightforward assessment of model performance, while log loss evaluates the uncertainty of probabilistic predictions, penalizing confident but incorrect guesses more heavily. Optimizing for accuracy alone may overlook the quality of predicted probabilities, whereas minimizing log loss encourages models to be well-calibrated. Balancing accuracy and log loss is essential for robust predictive modeling, especially in scenarios requiring reliable probability estimates.

Table of Comparison

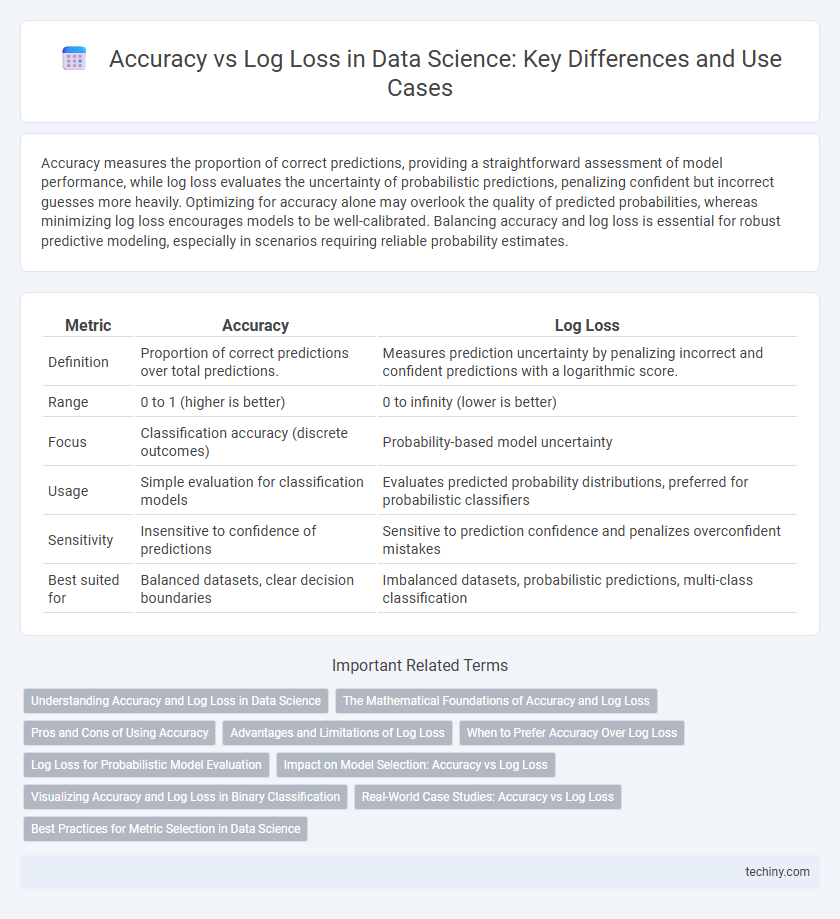

| Metric | Accuracy | Log Loss |

|---|---|---|

| Definition | Proportion of correct predictions over total predictions. | Measures prediction uncertainty by penalizing incorrect and confident predictions with a logarithmic score. |

| Range | 0 to 1 (higher is better) | 0 to infinity (lower is better) |

| Focus | Classification accuracy (discrete outcomes) | Probability-based model uncertainty |

| Usage | Simple evaluation for classification models | Evaluates predicted probability distributions, preferred for probabilistic classifiers |

| Sensitivity | Insensitive to confidence of predictions | Sensitive to prediction confidence and penalizes overconfident mistakes |

| Best suited for | Balanced datasets, clear decision boundaries | Imbalanced datasets, probabilistic predictions, multi-class classification |

Understanding Accuracy and Log Loss in Data Science

Accuracy measures the proportion of correctly predicted instances, providing an intuitive metric for model performance in classification tasks. Log loss, or cross-entropy loss, evaluates the uncertainty of predictions by penalizing incorrect and overconfident predictions, thus offering a nuanced assessment of probabilistic models. Emphasizing log loss can lead to models with better calibrated probabilities, while accuracy remains valuable for straightforward classification success rates.

The Mathematical Foundations of Accuracy and Log Loss

Accuracy represents the proportion of correctly classified instances out of the total predictions, mathematically defined as the ratio of true positives and true negatives to all samples, emphasizing discrete classification outcomes. Log loss, or cross-entropy loss, quantifies the uncertainty of a probabilistic classifier by penalizing the negative logarithm of predicted probabilities corresponding to true labels, thereby capturing the confidence of predictions. The fundamental difference lies in accuracy's binary evaluation of correctness versus log loss's continuous measure of prediction quality rooted in probability theory and information entropy.

Pros and Cons of Using Accuracy

Accuracy measures the proportion of correctly classified instances and is easy to interpret, making it useful for balanced datasets with clear class boundaries. However, accuracy can be misleading for imbalanced datasets since it ignores the severity of misclassifications and does not provide insights into prediction confidence. Unlike log loss, accuracy fails to account for probabilistic predictions and overall model calibration, limiting its usefulness in nuanced decision-making contexts.

Advantages and Limitations of Log Loss

Log loss measures the performance of classification models by penalizing incorrect predictions with a focus on confidence levels, offering a more nuanced evaluation than accuracy. It advantages include its sensitivity to probability estimates, enabling better calibration in probabilistic models, and its suitability for imbalanced datasets by reflecting prediction uncertainty. However, log loss can be heavily influenced by extreme probabilities and is less intuitive to interpret compared to accuracy, which may complicate model assessment and communication.

When to Prefer Accuracy Over Log Loss

Accuracy is preferred over log loss when the primary goal is to measure the overall correctness of categorical predictions in balanced datasets without emphasizing the confidence of predictions. In scenarios where model interpretability and straightforward performance metrics are critical, such as binary classification with equal class distributions, accuracy provides a clear and intuitive measure. Accuracy is especially useful when misclassification costs are uniform and the decision boundary is more important than probability calibration.

Log Loss for Probabilistic Model Evaluation

Log Loss measures the performance of probabilistic classification models by quantifying the uncertainty of predictions and penalizing incorrect classifications with high confidence more severely than accuracy. Unlike accuracy, which only considers the proportion of correct predictions, Log Loss evaluates the predicted probability distributions, making it essential for models where predicting probabilities accurately is critical, such as in logistic regression and neural networks. Optimizing Log Loss improves calibration and helps select models that better capture the uncertainty inherent in complex datasets.

Impact on Model Selection: Accuracy vs Log Loss

Accuracy measures the proportion of correctly classified instances, providing a straightforward evaluation metric, but it can be misleading in imbalanced datasets by ignoring the confidence of predictions. Log loss, also known as cross-entropy loss, captures the uncertainty in probabilistic outputs, penalizing models for overconfident incorrect predictions and rewarding well-calibrated probability estimates. Models selected based on log loss tend to perform better in real-world applications requiring reliable probability estimates, while accuracy-focused selection may favor models that perform well only on the majority class.

Visualizing Accuracy and Log Loss in Binary Classification

Visualizing accuracy and log loss in binary classification reveals different aspects of model performance, where accuracy measures the proportion of correct predictions and log loss quantifies the uncertainty of predictions by penalizing incorrect confident guesses more heavily. Plotting accuracy alongside log loss over training epochs provides insights into model convergence, highlighting cases where accuracy may plateau while log loss continues to improve due to better probabilistic predictions. Effective visualization techniques such as line graphs or heatmaps enable clear comparison and diagnostic analysis of model calibration and reliability in binary classification tasks.

Real-World Case Studies: Accuracy vs Log Loss

Real-world case studies demonstrate that accuracy alone can be misleading in imbalanced classification tasks, where log loss provides a more nuanced evaluation by penalizing false confident predictions. For example, in fraud detection, models with similar accuracy scores often exhibit significantly different log loss values, highlighting the importance of probabilistic confidence calibration. These insights underscore that log loss is essential for optimizing model performance in scenarios demanding reliable probability estimates.

Best Practices for Metric Selection in Data Science

Selecting the appropriate metric in data science hinges on the specific problem context, where accuracy excels in balanced classification scenarios but may be misleading with imbalanced datasets. Log loss provides a nuanced evaluation by penalizing false classifications with varying confidence levels, making it ideal for probabilistic models and risk-sensitive applications. Best practices recommend leveraging log loss for model calibration and fine-grained performance insights, while accuracy serves as a complementary, straightforward metric in initial model assessments.

accuracy vs log loss Infographic

techiny.com

techiny.com