Hyperparameter tuning involves selecting the best set of external configurations for a machine learning model to improve its performance, such as learning rate or number of trees, which are not learned from the data. Parameter tuning, on the other hand, refers to optimizing the internal parameters that the model learns during training, like weights and biases in neural networks. Effective hyperparameter tuning can significantly enhance model accuracy by guiding the training process, while parameter tuning fine-tunes the model's predictions based on the given dataset.

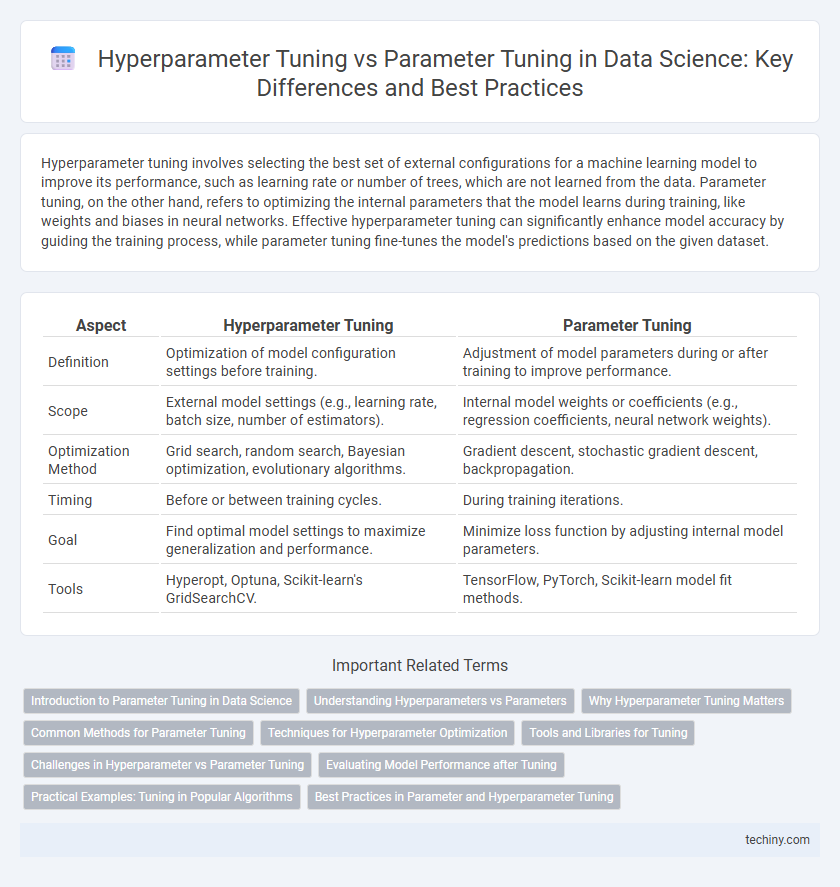

Table of Comparison

| Aspect | Hyperparameter Tuning | Parameter Tuning |

|---|---|---|

| Definition | Optimization of model configuration settings before training. | Adjustment of model parameters during or after training to improve performance. |

| Scope | External model settings (e.g., learning rate, batch size, number of estimators). | Internal model weights or coefficients (e.g., regression coefficients, neural network weights). |

| Optimization Method | Grid search, random search, Bayesian optimization, evolutionary algorithms. | Gradient descent, stochastic gradient descent, backpropagation. |

| Timing | Before or between training cycles. | During training iterations. |

| Goal | Find optimal model settings to maximize generalization and performance. | Minimize loss function by adjusting internal model parameters. |

| Tools | Hyperopt, Optuna, Scikit-learn's GridSearchCV. | TensorFlow, PyTorch, Scikit-learn model fit methods. |

Introduction to Parameter Tuning in Data Science

Parameter tuning in data science involves adjusting the internal variables of a machine learning model to optimize its performance on a specific task. Unlike hyperparameter tuning, which focuses on external configuration settings such as learning rate or number of trees, parameter tuning directly refines the model's weights or coefficients based on training data. Effective parameter tuning enhances predictive accuracy by minimizing error and improving model generalization.

Understanding Hyperparameters vs Parameters

Hyperparameters are configuration settings set before the learning process begins, controlling the model's overall behavior and complexity, such as learning rate, batch size, or number of trees in a random forest. Parameters are internal model components learned from data during training, like weights in neural networks or coefficients in linear regression. Effective hyperparameter tuning optimizes these external settings to improve model performance, while parameter tuning involves adjusting the model itself based on the training data.

Why Hyperparameter Tuning Matters

Hyperparameter tuning is essential in data science because it directly influences the model's learning process and generalization capabilities by setting values like learning rate, number of trees, or regularization strength before training begins. Unlike parameter tuning, which adjusts model weights based on data to minimize error, hyperparameter tuning controls the model's architecture and training behavior, significantly impacting performance and avoiding overfitting. Optimizing hyperparameters leads to improved model accuracy, faster convergence, and better predictive power in real-world applications.

Common Methods for Parameter Tuning

Common methods for parameter tuning in data science include grid search, random search, and Bayesian optimization, each systematically exploring hyperparameter values to enhance model performance. Grid search exhaustively evaluates combinations, while random search samples parameters randomly, often finding effective settings more efficiently. Bayesian optimization uses probabilistic models to predict promising hyperparameters, balancing exploration and exploitation for optimized tuning outcomes.

Techniques for Hyperparameter Optimization

Hyperparameter tuning involves optimizing external model configurations such as learning rate, batch size, and number of trees, which significantly impact model performance and generalization. Techniques for hyperparameter optimization include grid search, random search, Bayesian optimization, and gradient-based methods, each offering different trade-offs between computational cost and search efficiency. Parameter tuning, on the other hand, adjusts internal model weights during training through algorithms like gradient descent, directly minimizing loss functions to improve accuracy.

Tools and Libraries for Tuning

Hyperparameter tuning involves optimizing model settings like learning rate and regularization strength, typically using tools such as Optuna, Hyperopt, and Scikit-learn's GridSearchCV and RandomizedSearchCV for automated search across hyperparameter spaces. Parameter tuning focuses on adjusting model parameters during training, commonly managed through algorithms embedded in libraries like TensorFlow, PyTorch, and XGBoost that update weights based on gradient descent or boosting techniques. These specialized tools streamline both tuning processes, enhancing model performance and predictive accuracy in data science workflows.

Challenges in Hyperparameter vs Parameter Tuning

Hyperparameter tuning involves selecting the best model settings, such as learning rate and batch size, which require extensive computational resources and can lead to overfitting if not properly managed. Parameter tuning, on the other hand, adjusts internal model weights during training but is generally handled by optimization algorithms like gradient descent, posing fewer manual challenges. The main difficulty in hyperparameter tuning lies in the combinatorial explosion of possible configurations, necessitating efficient search methods like grid search, random search, or Bayesian optimization to balance model performance and training time.

Evaluating Model Performance after Tuning

Evaluating model performance after hyperparameter tuning involves assessing metrics such as accuracy, precision, recall, or F1-score on validation or test datasets to ensure the model generalizes well to unseen data. Parameter tuning focuses on optimizing the internal weights of the model through training algorithms like gradient descent, while hyperparameter tuning adjusts external settings like learning rate, batch size, or tree depth that control the training process. Effective model evaluation combines these approaches to balance bias and variance, preventing overfitting and achieving robust predictive performance.

Practical Examples: Tuning in Popular Algorithms

Hyperparameter tuning involves adjusting settings like learning rate and batch size in algorithms such as Random Forests and Gradient Boosting Machines to optimize model performance before training begins. Parameter tuning, by contrast, refers to refining model coefficients or weights during the training process, as seen in linear regression or neural networks. Practical examples include using grid search for hyperparameter tuning in Support Vector Machines and stochastic gradient descent for parameter optimization in deep learning models.

Best Practices in Parameter and Hyperparameter Tuning

Best practices in parameter and hyperparameter tuning emphasize systematic searching methods such as grid search, random search, and Bayesian optimization to enhance model performance. Parameters are internal model variables learned from training data, while hyperparameters are external configurations set prior to learning that significantly influence the optimization process. Leveraging cross-validation techniques ensures robust evaluation of tuning strategies, minimizing overfitting and improving generalization accuracy in machine learning models.

hyperparameter tuning vs parameter tuning Infographic

techiny.com

techiny.com