Hyperparameter tuning focuses on optimizing the algorithm's parameters to improve model performance without changing the data, while feature engineering involves creating or transforming input variables to enhance the model's predictive power. Effective feature engineering can provide more meaningful data representations, often resulting in greater performance gains than hyperparameter tuning alone. Combining both strategies ensures robust model optimization by refining data quality and algorithmic settings simultaneously.

Table of Comparison

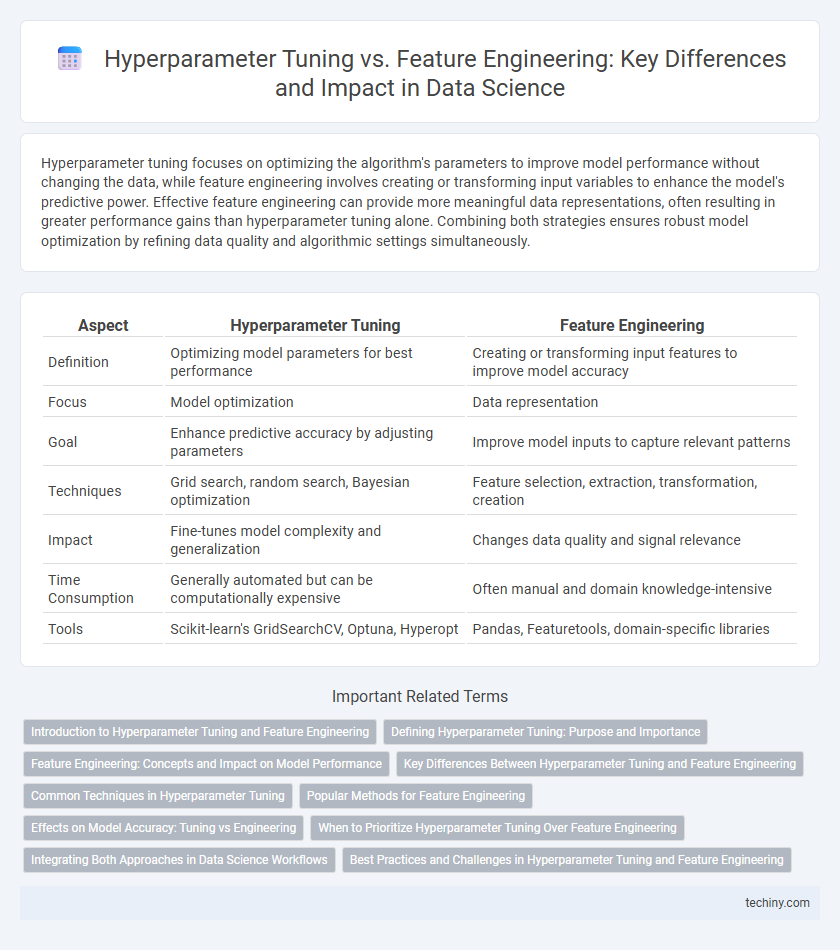

| Aspect | Hyperparameter Tuning | Feature Engineering |

|---|---|---|

| Definition | Optimizing model parameters for best performance | Creating or transforming input features to improve model accuracy |

| Focus | Model optimization | Data representation |

| Goal | Enhance predictive accuracy by adjusting parameters | Improve model inputs to capture relevant patterns |

| Techniques | Grid search, random search, Bayesian optimization | Feature selection, extraction, transformation, creation |

| Impact | Fine-tunes model complexity and generalization | Changes data quality and signal relevance |

| Time Consumption | Generally automated but can be computationally expensive | Often manual and domain knowledge-intensive |

| Tools | Scikit-learn's GridSearchCV, Optuna, Hyperopt | Pandas, Featuretools, domain-specific libraries |

Introduction to Hyperparameter Tuning and Feature Engineering

Hyperparameter tuning focuses on optimizing the settings of machine learning algorithms to improve model performance, involving techniques like grid search, random search, and Bayesian optimization. Feature engineering transforms raw data into meaningful features through processes such as normalization, encoding, and extraction, enhancing the predictive power of models. Both hyperparameter tuning and feature engineering are crucial for building high-accuracy data science models by ensuring the algorithm is well-configured and the input data is well-represented.

Defining Hyperparameter Tuning: Purpose and Importance

Hyperparameter tuning involves systematically optimizing model parameters that are set before the learning process to enhance predictive performance and generalization. It plays a crucial role in improving algorithm accuracy by adjusting parameters such as learning rate, regularization strength, and tree depth based on validation metrics. Effective hyperparameter tuning complements feature engineering by maximizing model potential without altering input data characteristics.

Feature Engineering: Concepts and Impact on Model Performance

Feature engineering in data science involves transforming raw data into meaningful features that enhance model accuracy and predictive power. It includes techniques such as normalization, encoding categorical variables, and creating interaction terms, which directly influence model performance by improving data representation. Effective feature engineering often yields greater improvements than hyperparameter tuning alone, as it addresses the quality and relevance of the input data before model training.

Key Differences Between Hyperparameter Tuning and Feature Engineering

Hyperparameter tuning optimizes the configuration settings of machine learning algorithms to improve model performance, while feature engineering involves creating, modifying, or selecting input variables to better represent the underlying data patterns. Hyperparameter tuning directly alters the model's learning process, including parameters like learning rate and tree depth, whereas feature engineering focuses on transforming raw data into meaningful features that enhance model interpretability and predictive power. Understanding these distinctions is crucial for effective model development and achieving higher accuracy in data science workflows.

Common Techniques in Hyperparameter Tuning

Common techniques in hyperparameter tuning include grid search, random search, and Bayesian optimization, each optimizing model performance by systematically exploring hyperparameter spaces. Grid search exhaustively evaluates predefined parameter combinations, while random search samples hyperparameters randomly, potentially finding optimal values more efficiently. Bayesian optimization uses probabilistic models to predict promising hyperparameters, balancing exploration and exploitation to improve tuning efficiency in complex data science models.

Popular Methods for Feature Engineering

Popular methods for feature engineering in data science include scaling techniques like Min-Max Scaling and Standardization, which normalize feature ranges to improve model convergence. Dimensionality reduction methods such as Principal Component Analysis (PCA) help reduce feature space complexity while retaining essential information. Encoding categorical variables with one-hot encoding or target encoding transforms qualitative data into machine-readable formats, enhancing model interpretability and performance.

Effects on Model Accuracy: Tuning vs Engineering

Hyperparameter tuning fine-tunes model parameters such as learning rate and regularization to optimize performance, often yielding significant accuracy improvements without altering the data itself. Feature engineering transforms raw data into meaningful features, enhancing the model's ability to capture patterns and relationships, which can lead to more substantial gains in predictive accuracy compared to hyperparameter tuning alone. Combining both approaches typically results in the highest model accuracy, as engineered features provide richer input while tuned hyperparameters optimize the learning process.

When to Prioritize Hyperparameter Tuning Over Feature Engineering

Prioritize hyperparameter tuning over feature engineering when existing features are already well-constructed and the model's performance plateau suggests optimization within the algorithm's parameters can yield improvements. Algorithms like gradient boosting machines and neural networks often benefit more from tuning hyperparameters such as learning rate, depth, or regularization strength when feature space quality is stable. In scenarios with robust datasets and limited feature extraction potential, refining hyperparameters accelerates convergence and enhances model generalization effectively.

Integrating Both Approaches in Data Science Workflows

Integrating hyperparameter tuning and feature engineering enhances model performance by optimizing both the algorithm parameters and input data quality simultaneously. Automated machine learning platforms increasingly support joint optimization processes, leveraging techniques such as Bayesian optimization and feature selection algorithms. Combining these approaches streamlines data science workflows, leading to more robust predictive models and improved generalization on unseen datasets.

Best Practices and Challenges in Hyperparameter Tuning and Feature Engineering

Hyperparameter tuning requires careful selection of parameter ranges and efficient search algorithms like Bayesian optimization to balance model complexity and computational cost, while feature engineering demands domain expertise for creating meaningful variables and managing multicollinearity. Both processes face challenges such as overfitting during hyperparameter optimization and risk of noise introduction in feature transformation, necessitating robust validation techniques like cross-validation. Best practices involve iterative experimentation, automation through tools like AutoML for hyperparameter tuning, and leveraging data visualization alongside statistical tests to refine features effectively.

Hyperparameter Tuning vs Feature Engineering Infographic

techiny.com

techiny.com