Training data is used to teach machine learning models by allowing them to learn patterns and relationships within the dataset. Testing data evaluates the model's performance on unseen examples to measure its accuracy and generalization capabilities. Balancing quality and quantity in both training and testing datasets is crucial for building robust and reliable predictive models.

Table of Comparison

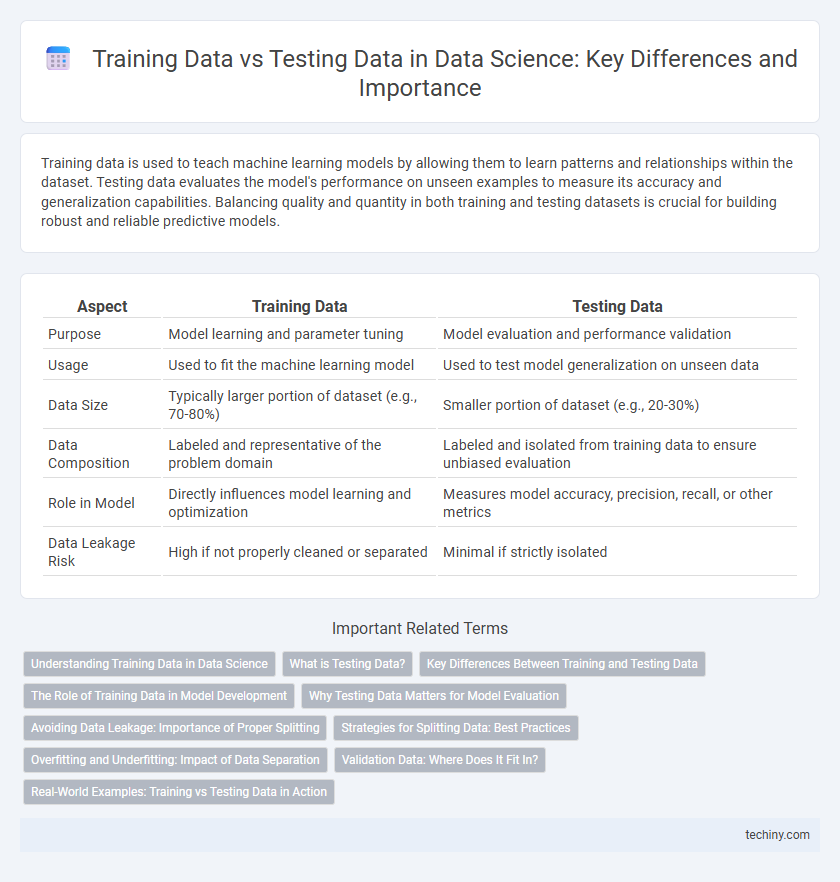

| Aspect | Training Data | Testing Data |

|---|---|---|

| Purpose | Model learning and parameter tuning | Model evaluation and performance validation |

| Usage | Used to fit the machine learning model | Used to test model generalization on unseen data |

| Data Size | Typically larger portion of dataset (e.g., 70-80%) | Smaller portion of dataset (e.g., 20-30%) |

| Data Composition | Labeled and representative of the problem domain | Labeled and isolated from training data to ensure unbiased evaluation |

| Role in Model | Directly influences model learning and optimization | Measures model accuracy, precision, recall, or other metrics |

| Data Leakage Risk | High if not properly cleaned or separated | Minimal if strictly isolated |

Understanding Training Data in Data Science

Training data in data science is a labeled dataset used to teach machine learning models to identify patterns and relationships within the data. It enables algorithms to generalize from examples by adjusting model parameters iteratively to minimize prediction errors. Properly curated training data ensures robust model accuracy and generalizability across unseen scenarios.

What is Testing Data?

Testing data is a subset of the dataset used to evaluate the performance and generalization ability of a trained machine learning model. It consists of unseen examples that the model has not encountered during the training phase, allowing for an unbiased assessment of accuracy, precision, recall, and other relevant metrics. Properly structured testing data helps identify overfitting and ensures the model's robustness on real-world data.

Key Differences Between Training and Testing Data

Training data is used to teach machine learning models by allowing them to learn patterns and features, while testing data evaluates the model's performance on unseen examples to measure generalization. Key differences include the training data's role in model fitting and parameter tuning, whereas testing data remains untouched during training to provide an unbiased assessment. The accuracy and reliability of machine learning predictions largely depend on the clear separation and appropriate use of these two data sets.

The Role of Training Data in Model Development

Training data serves as the foundational input for machine learning models, enabling them to learn patterns, relationships, and underlying structures within the dataset. It directly influences model accuracy and generalization, as the quality and diversity of training data determine how well the model adapts to new, unseen data. Effective model development depends on well-prepared, representative training data to reduce bias and improve predictive performance across various real-world applications.

Why Testing Data Matters for Model Evaluation

Testing data is crucial for model evaluation as it provides an unbiased assessment of a model's performance on unseen information, ensuring generalizability beyond the training dataset. By evaluating metrics like accuracy, precision, recall, and F1 score on testing data, data scientists can detect overfitting and validate the model's real-world applicability. Proper use of testing data ultimately guides improvements, model selection, and reliable deployment in production environments.

Avoiding Data Leakage: Importance of Proper Splitting

Properly splitting data into training and testing sets is crucial for avoiding data leakage, which can lead to overly optimistic model performance. Ensuring that testing data remains completely unseen during training preserves the integrity of model evaluation. Techniques like stratified sampling and time-based splitting help maintain representative and independent datasets for reliable predictive analytics.

Strategies for Splitting Data: Best Practices

Effective strategies for splitting data in data science involve allocating distinct subsets for training and testing to ensure model generalization and prevent overfitting. Common best practices include using stratified sampling to maintain class distribution, applying an 80/20 or 70/30 split ratio, and leveraging cross-validation techniques like k-fold to maximize data utility and reliability. Balancing data representativeness and independence between training and testing sets is crucial for accurate performance evaluation and robust predictive modeling.

Overfitting and Underfitting: Impact of Data Separation

Training data enables the model to learn patterns and relationships, but excessive reliance on it can lead to overfitting, where the model performs well on training data but poorly on unseen data. Testing data serves as an independent benchmark to evaluate model generalization and detect underfitting, indicating insufficient learning from the training set. Proper separation of training and testing data is crucial to balance bias and variance, ensuring the model accurately captures underlying trends without memorizing noise.

Validation Data: Where Does It Fit In?

Validation data serves as a critical intermediary between training data and testing data in data science workflows, enabling model tuning and hyperparameter optimization without biasing the evaluation results. It provides a separate dataset from training to validate model performance during development, preventing overfitting by ensuring the model generalizes well on unseen data. This distinct partition helps accurately assess the model before the final evaluation on testing data, improving reliability and robustness in predictive analytics.

Real-World Examples: Training vs Testing Data in Action

Training data shapes machine learning models by providing patterns from labeled datasets, such as customer purchase histories for recommendation systems. Testing data evaluates model performance using unseen instances like new loan applications to predict credit risk accurately. Real-world applications highlight the split's importance in avoiding overfitting and ensuring generalization across diverse scenarios.

training data vs testing data Infographic

techiny.com

techiny.com