TF-IDF quantifies the importance of words in a document relative to a corpus, emphasizing term frequency and inverse document frequency without capturing semantic meanings. Word2Vec generates dense vector embeddings by learning word contexts in large text datasets, effectively encoding semantic relationships and similarities. Choosing between TF-IDF and Word2Vec depends on the task: TF-IDF suits keyword extraction and sparse representations, while Word2Vec excels in capturing context and meaning for advanced natural language processing.

Table of Comparison

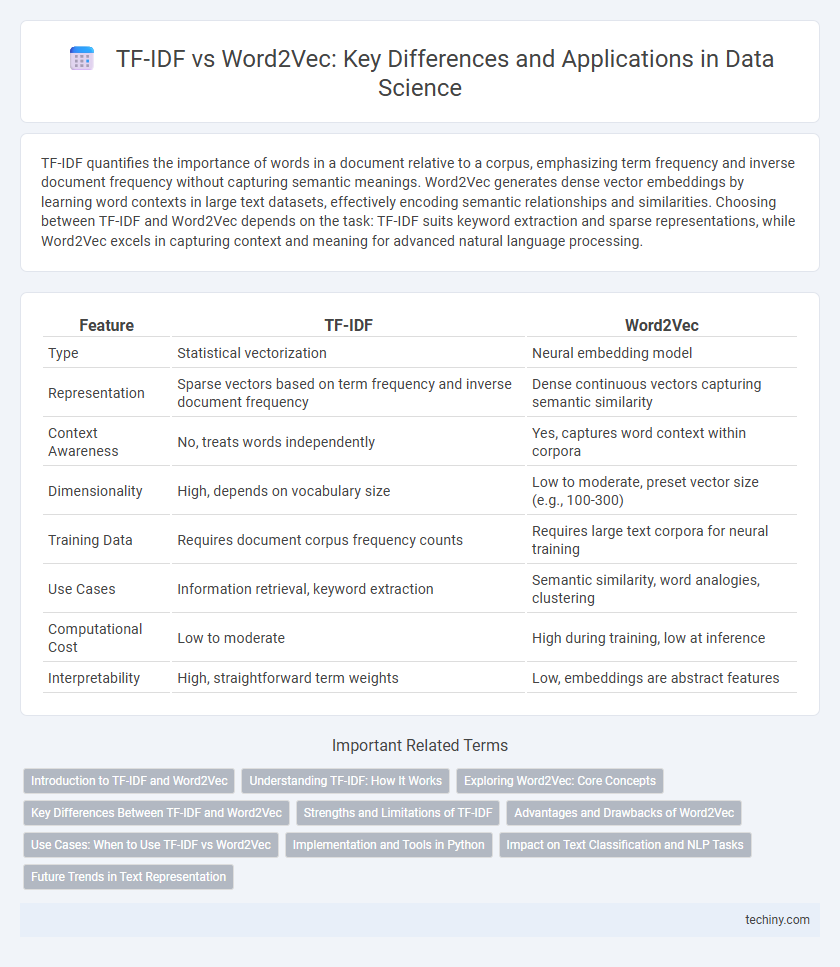

| Feature | TF-IDF | Word2Vec |

|---|---|---|

| Type | Statistical vectorization | Neural embedding model |

| Representation | Sparse vectors based on term frequency and inverse document frequency | Dense continuous vectors capturing semantic similarity |

| Context Awareness | No, treats words independently | Yes, captures word context within corpora |

| Dimensionality | High, depends on vocabulary size | Low to moderate, preset vector size (e.g., 100-300) |

| Training Data | Requires document corpus frequency counts | Requires large text corpora for neural training |

| Use Cases | Information retrieval, keyword extraction | Semantic similarity, word analogies, clustering |

| Computational Cost | Low to moderate | High during training, low at inference |

| Interpretability | High, straightforward term weights | Low, embeddings are abstract features |

Introduction to TF-IDF and Word2Vec

TF-IDF (Term Frequency-Inverse Document Frequency) quantifies the importance of words in a document relative to a corpus, optimizing information retrieval by highlighting distinctive terms. Word2Vec leverages neural networks to generate dense vector representations of words, capturing semantic relationships and contextual similarity through continuous embedding spaces. These foundational techniques enable advanced natural language processing by transforming text into analyzable numerical formats.

Understanding TF-IDF: How It Works

TF-IDF (Term Frequency-Inverse Document Frequency) quantifies the importance of a word in a document relative to a corpus by balancing word frequency with its rarity across documents. It assigns higher weights to terms that appear frequently in a specific document but infrequently in the entire collection, highlighting distinctive keywords. This numeric representation enables efficient feature extraction for text classification, information retrieval, and natural language processing tasks.

Exploring Word2Vec: Core Concepts

Word2Vec utilizes neural network models to generate dense vector representations of words, capturing semantic relationships based on contextual usage in large text corpora. Unlike TF-IDF, which relies on term frequency and inverse document frequency to assess word importance, Word2Vec encodes continuous vector spaces where similar words lie close together, enabling nuanced understanding of word meaning and analogy tasks. This approach enhances text analysis by leveraging distributed representations to reflect semantic and syntactic word similarities effectively.

Key Differences Between TF-IDF and Word2Vec

TF-IDF measures the importance of a word in a document relative to a corpus, based on frequency statistics, while Word2Vec generates dense vector representations capturing semantic meanings through neural network models. TF-IDF produces sparse vectors that highlight keyword relevance, whereas Word2Vec captures contextual relationships and word similarities in continuous vector space. The key difference lies in TF-IDF's focus on word occurrence and Word2Vec's ability to understand semantic context through embedding learning.

Strengths and Limitations of TF-IDF

TF-IDF excels at highlighting important words in a document by quantifying their frequency relative to their occurrence in a corpus, making it effective for keyword extraction and document classification. It struggles with capturing semantic relationships and contextual meanings because it treats each word independently and ignores word order. TF-IDF works best in applications requiring simple text representation, but it falls short for tasks needing deeper understanding of language nuances or word similarities.

Advantages and Drawbacks of Word2Vec

Word2Vec excels in capturing semantic relationships and contextual similarities between words by generating dense vector representations, making it highly effective for tasks like clustering and recommendation systems. However, it requires substantial training data and computational resources compared to TF-IDF, and it may struggle with rare or out-of-vocabulary words due to its dependence on context. Unlike TF-IDF's simplicity and interpretability, Word2Vec's embeddings are less transparent, which can complicate model explainability in data science applications.

Use Cases: When to Use TF-IDF vs Word2Vec

TF-IDF excels in scenarios requiring straightforward keyword extraction and frequency analysis, such as document classification and information retrieval, where understanding the importance of individual terms is crucial. Word2Vec is ideal for capturing semantic relationships and contextual meaning in text, making it suitable for tasks like sentiment analysis, text similarity, and recommendation systems. Choose TF-IDF for interpretability and simplicity, while Word2Vec is preferable for nuanced language understanding and complex pattern recognition in large corpora.

Implementation and Tools in Python

TF-IDF implementation in Python typically leverages libraries like Scikit-learn, which provides straightforward functions such as TfidfVectorizer for converting text into weighted term frequency vectors. Word2Vec models, on the other hand, are commonly implemented using the Gensim library, enabling efficient training and usage of continuous vector representations through algorithms like Skip-gram or CBOW. Both approaches benefit from extensive community support and integration with tools like NLTK or SpaCy for preprocessing, making them accessible choices for text feature extraction in Data Science workflows.

Impact on Text Classification and NLP Tasks

TF-IDF effectively captures term importance by measuring word frequency relative to its inverse document frequency, enhancing text classification by weighting rare but relevant words more heavily. Word2Vec generates dense vector embeddings that capture semantic relationships between words, significantly improving performance in complex NLP tasks like sentiment analysis and named entity recognition. Combining TF-IDF's explicit term relevance with Word2Vec's contextual understanding yields more robust and accurate models for various text classification challenges.

Future Trends in Text Representation

Future trends in text representation emphasize the integration of TF-IDF and word2vec techniques to enhance semantic understanding and contextual relevance. Advances in neural embedding models are driving the evolution from traditional TF-IDF frequency-based methods towards more sophisticated word2vec-based vector representations, capturing deeper linguistic nuances. Emerging hybrid models leverage the strengths of both approaches to improve accuracy in natural language processing tasks like sentiment analysis and information retrieval.

TF-IDF vs word2vec Infographic

techiny.com

techiny.com