Cosine similarity measures the cosine of the angle between two vectors, emphasizing their directional alignment rather than magnitude, making it ideal for text analysis and high-dimensional data. Euclidean distance calculates the straight-line distance between two points in space, reflecting absolute differences in magnitude, which suits continuous, low-dimensional datasets. Choosing between cosine similarity and Euclidean distance depends on whether the focus is on orientation or actual distances in the feature space.

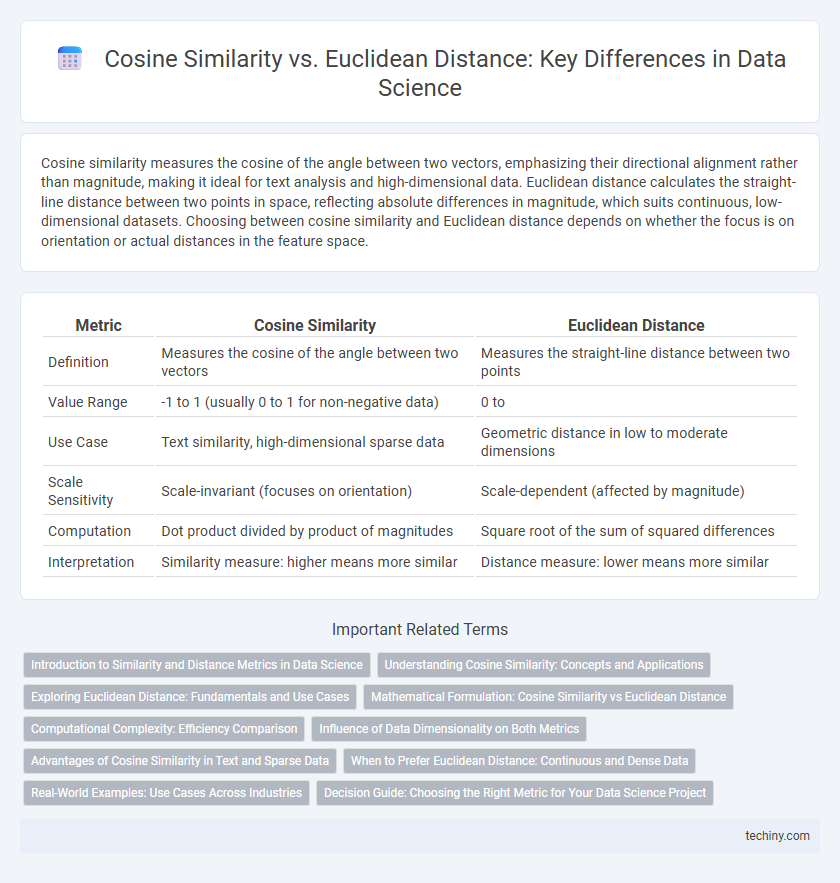

Table of Comparison

| Metric | Cosine Similarity | Euclidean Distance |

|---|---|---|

| Definition | Measures the cosine of the angle between two vectors | Measures the straight-line distance between two points |

| Value Range | -1 to 1 (usually 0 to 1 for non-negative data) | 0 to |

| Use Case | Text similarity, high-dimensional sparse data | Geometric distance in low to moderate dimensions |

| Scale Sensitivity | Scale-invariant (focuses on orientation) | Scale-dependent (affected by magnitude) |

| Computation | Dot product divided by product of magnitudes | Square root of the sum of squared differences |

| Interpretation | Similarity measure: higher means more similar | Distance measure: lower means more similar |

Introduction to Similarity and Distance Metrics in Data Science

Cosine similarity measures the cosine of the angle between two non-zero vectors, capturing orientation rather than magnitude, making it ideal for high-dimensional sparse data like text documents. Euclidean distance calculates the straight-line distance between points in continuous space, emphasizing absolute differences in feature values. Both metrics serve crucial roles in clustering, classification, and recommendation systems by quantifying relationships and patterns within datasets.

Understanding Cosine Similarity: Concepts and Applications

Cosine similarity measures the cosine of the angle between two vectors, capturing their directional alignment regardless of magnitude, making it ideal for text analysis and recommendation systems. It quantifies how similar two documents or data points are based on the orientation of their feature vectors, rather than their absolute values. This approach excels in high-dimensional spaces typical of natural language processing, where Euclidean distance may be less effective due to scale sensitivity.

Exploring Euclidean Distance: Fundamentals and Use Cases

Euclidean distance measures the straight-line distance between two points in a multidimensional space, making it fundamental for clustering algorithms like K-means and hierarchical clustering in data science. Its simplicity and effectiveness in quantifying spatial relationships make it ideal for datasets where magnitude differences are crucial. Use cases include image recognition, anomaly detection, and any scenario requiring accurate metric space analysis.

Mathematical Formulation: Cosine Similarity vs Euclidean Distance

Cosine similarity measures the cosine of the angle between two non-zero vectors by dividing their dot product by the product of their magnitudes, mathematically expressed as cosine similarity = (A * B) / (||A|| ||B||). Euclidean distance calculates the straight-line distance between two points in n-dimensional space using the formula sqrt(S (Ai - Bi)2), reflecting the geometric distance magnitude. While cosine similarity captures orientation and is scale-invariant, Euclidean distance focuses on absolute spatial separation, making each metric suitable for different analytical scenarios in data science.

Computational Complexity: Efficiency Comparison

Cosine similarity typically requires fewer computational resources since it involves calculating the dot product and magnitudes of vectors, resulting in O(n) complexity for n-dimensional data. Euclidean distance demands calculating the square root of the sum of squared differences, which can increase processing time, especially for large datasets. In high-dimensional spaces, cosine similarity often proves more efficient due to its focus on angular differences rather than absolute distances.

Influence of Data Dimensionality on Both Metrics

Cosine similarity remains effective in high-dimensional spaces by measuring the angle between vectors, reducing sensitivity to magnitude and data sparsity common in text and recommendation systems. Euclidean distance, however, suffers from the curse of dimensionality, as distances tend to become less discriminative and converge, diminishing its usefulness in distinguishing data points. High dimensionality increases noise and reduces the contrast between distances, making cosine similarity a preferred metric in many high-dimensional data science applications.

Advantages of Cosine Similarity in Text and Sparse Data

Cosine similarity effectively captures the orientation of high-dimensional text vectors, making it ideal for comparing documents with varying lengths and sparse word occurrences. It mitigates the influence of vector magnitude, which is crucial in natural language processing tasks involving TF-IDF representations or one-hot encoded vectors. This results in more meaningful similarity measures for text data compared to Euclidean distance, which can be skewed by the sparsity and scale of features.

When to Prefer Euclidean Distance: Continuous and Dense Data

Euclidean distance is preferred for measuring similarity in continuous and dense data because it captures the absolute magnitude of differences between points in a multidimensional space. This metric effectively reflects spatial relationships when data features are on the same scale, making it ideal for applications like image recognition and clustering of numerical datasets. Euclidean distance provides intuitive geometric interpretations that are valuable in contexts where the actual distance between points represents meaningful dissimilarity.

Real-World Examples: Use Cases Across Industries

Cosine similarity enhances recommendation systems in streaming platforms by measuring the angle between user preference vectors, enabling personalized content suggestions even when magnitudes differ. Euclidean distance is pivotal in healthcare for clustering patient data based on biometrics, facilitating diagnosis and treatment plans by quantifying absolute differences in measurements. In finance, both metrics optimize fraud detection systems; cosine similarity identifies unusual transaction patterns irrespective of scale, while Euclidean distance detects anomalies based on raw numerical differences.

Decision Guide: Choosing the Right Metric for Your Data Science Project

Cosine similarity measures the cosine of the angle between two vectors, making it ideal for text analysis and high-dimensional sparse data where direction matters more than magnitude. Euclidean distance calculates the straight-line distance between two points, best suited for continuous numeric data where absolute differences are critical. Select cosine similarity when focusing on orientation and pattern recognition in feature space, and Euclidean distance for clustering or regression tasks that depend on actual value differences.

cosine similarity vs Euclidean distance Infographic

techiny.com

techiny.com