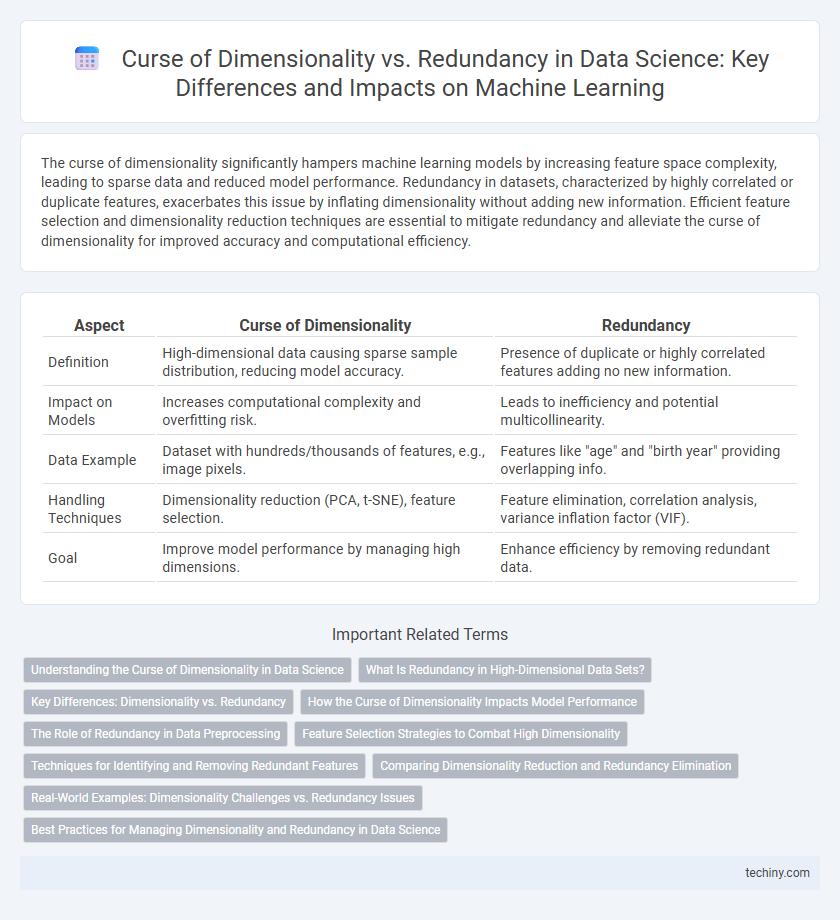

The curse of dimensionality significantly hampers machine learning models by increasing feature space complexity, leading to sparse data and reduced model performance. Redundancy in datasets, characterized by highly correlated or duplicate features, exacerbates this issue by inflating dimensionality without adding new information. Efficient feature selection and dimensionality reduction techniques are essential to mitigate redundancy and alleviate the curse of dimensionality for improved accuracy and computational efficiency.

Table of Comparison

| Aspect | Curse of Dimensionality | Redundancy |

|---|---|---|

| Definition | High-dimensional data causing sparse sample distribution, reducing model accuracy. | Presence of duplicate or highly correlated features adding no new information. |

| Impact on Models | Increases computational complexity and overfitting risk. | Leads to inefficiency and potential multicollinearity. |

| Data Example | Dataset with hundreds/thousands of features, e.g., image pixels. | Features like "age" and "birth year" providing overlapping info. |

| Handling Techniques | Dimensionality reduction (PCA, t-SNE), feature selection. | Feature elimination, correlation analysis, variance inflation factor (VIF). |

| Goal | Improve model performance by managing high dimensions. | Enhance efficiency by removing redundant data. |

Understanding the Curse of Dimensionality in Data Science

The curse of dimensionality in data science refers to the exponential increase in data volume and computational complexity as the number of features grows, which often leads to sparse and less informative datasets. Redundancy in high-dimensional data occurs when multiple features convey overlapping or duplicated information, decreasing model efficiency and interpretability. Effective dimensionality reduction techniques, such as Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE), help mitigate these issues by preserving essential data characteristics while minimizing redundancy and complexity.

What Is Redundancy in High-Dimensional Data Sets?

Redundancy in high-dimensional data sets refers to the presence of highly correlated or duplicate features that convey overlapping information, which can obscure patterns and degrade model performance. Unlike the curse of dimensionality that emphasizes data sparsity and increased computational complexity, redundancy inflates the feature space unnecessarily, leading to overfitting and inefficiency. Effective feature selection or dimensionality reduction techniques help mitigate redundancy by identifying and removing irrelevant or repetitive attributes, enhancing the interpretability and accuracy of data science models.

Key Differences: Dimensionality vs. Redundancy

Curse of dimensionality refers to the exponential increase in data sparsity and computational complexity as feature dimensions grow, severely impacting model performance and generalization. Redundancy involves the presence of highly correlated or duplicate features that add no new information, causing inefficiencies and potential overfitting. While dimensionality measures the number of features, redundancy assesses the informational overlap within those features, highlighting distinct challenges in data preprocessing and feature selection.

How the Curse of Dimensionality Impacts Model Performance

The curse of dimensionality significantly degrades model performance by increasing data sparsity and noise, which complicates learning and decreases generalization. High-dimensional datasets often contain redundant features that do not contribute meaningful information, causing overfitting and inflated computational costs. Effective dimensionality reduction techniques like PCA and feature selection algorithms mitigate these issues by enhancing model accuracy and efficiency.

The Role of Redundancy in Data Preprocessing

Redundancy in data preprocessing plays a crucial role in mitigating the curse of dimensionality by reducing feature space without significant loss of information. Techniques such as feature selection and extraction identify and eliminate redundant variables, enhancing model performance and computational efficiency. Efficient redundancy management preserves data quality while addressing high-dimensional challenges in machine learning and data analysis.

Feature Selection Strategies to Combat High Dimensionality

Feature selection strategies address the curse of dimensionality by identifying and retaining the most informative features, thus reducing redundancy and enhancing model performance. Techniques like mutual information, recursive feature elimination, and LASSO regularization help isolate relevant variables by minimizing noise and irrelevant data. Effective feature selection not only improves computational efficiency but also prevents overfitting in high-dimensional datasets.

Techniques for Identifying and Removing Redundant Features

Techniques for identifying and removing redundant features in data science include correlation analysis, mutual information, and principal component analysis (PCA). Correlation analysis detects linear dependencies, while mutual information captures nonlinear relationships between features. PCA reduces dimensionality by transforming correlated variables into a smaller set of uncorrelated components, effectively mitigating the curse of dimensionality and improving model performance.

Comparing Dimensionality Reduction and Redundancy Elimination

Dimensionality reduction techniques such as Principal Component Analysis (PCA) and t-SNE tackle the curse of dimensionality by transforming high-dimensional data into lower-dimensional spaces while preserving essential patterns. Redundancy elimination methods focus on identifying and removing duplicate or highly correlated features to streamline datasets without altering the original feature space. Comparing the two, dimensionality reduction offers more comprehensive complexity reduction at the cost of interpretability, whereas redundancy elimination maintains feature interpretability but may be less effective in addressing data sparsity and model overfitting.

Real-World Examples: Dimensionality Challenges vs. Redundancy Issues

High-dimensional datasets in image recognition often suffer from the curse of dimensionality, where the sparse sampling of feature space can degrade model performance, while redundancy in sensor data, such as IoT measurements, leads to correlated features that increase computational complexity without adding value. In recommendation systems, dimensionality challenges arise from the vast number of user-item interactions, whereas redundancy manifests when similar user profiles or item attributes create overlapping information. Efficient feature selection and dimensionality reduction techniques like PCA help mitigate both high dimensionality and redundancy by identifying informative features and removing irrelevant or correlated inputs.

Best Practices for Managing Dimensionality and Redundancy in Data Science

Effective management of dimensionality and redundancy in data science involves techniques such as feature selection, principal component analysis (PCA), and autoencoders to reduce the feature space while preserving essential information. High-dimensional data often introduces sparsity and noise, exacerbating the curse of dimensionality, so applying dimensionality reduction improves model performance and interpretability. Regularly evaluating feature importance and correlation matrices helps identify redundant variables, enabling the creation of more efficient and robust predictive models.

curse of dimensionality vs redundancy Infographic

techiny.com

techiny.com